If you have been keeping up with post-quantum cryptography (PQC), the latest release from the U.S. National Institute of Standards and Technology (NIST) is worth noting. NIST has published a draft Cybersecurity White Paper titled “Considerations for Achieving Crypto-Agility“, which outlines the practical challenges, trade-offs, and strategies that organizations must consider when transitioning cryptographic systems for the post-quantum era.

The goal of this draft is to build a shared understanding of the challenges and to identify existing approaches related to crypto agility based on discussions NIST has held with various organizations and individuals. It also serves as a pre-read for an upcoming NIST-hosted virtual workshop, where the cryptographic community will further explore these issues and help shape the final version of the paper.

You might be wondering, who really needs to focus on this? The answer is simple: pretty much everyone involved in cybersecurity. Whether you are designing protocols, managing IT systems, developing software or standards, building hardware, or shaping policy, the insights in this white paper are directly relevant to your role in ensuring secure and agile cryptographic systems for the future.

What is Crypto-Agility?

As defined by NIST, Cryptographic Agility refers to the capabilities needed to replace and adapt cryptographic algorithms in protocols, applications, software, hardware, and infrastructures without interrupting the flow of a running system in order to achieve resiliency.

In simpler terms, crypto agility is the capability to switch to stronger cryptographic algorithms when existing ones become vulnerable quickly and seamlessly. It is essential because advancements in quantum computing can break current encryption methods, making it necessary to switch to quantum-resistant algorithms. Crypto agility ensures that systems can make this switch efficiently without needing to rebuild entire applications or infrastructures.

This flexibility allows systems to adopt stronger algorithms and retire weak ones with minimal changes to applications or infrastructure. Achieving crypto agility often involves updates to APIs, software libraries, or hardware, and maintaining interoperability in protocols when introducing new cipher suites.

More than just a technical goal, crypto agility is now a strategic necessity. It requires coordinated efforts from developers, IT administrators, policymakers, and security professionals to ensure systems remain secure, resilient, and future-ready in the face of evolving threats.

Why Do These Cryptographic Transitions Take So Long?

You might wonder if we know a cryptographic algorithm is outdated, why not just replace it right away? In reality, it’s rarely that simple. A good example is Triple DES. It was meant to be a temporary patch for the aging DES algorithm. However, even after the more secure AES standard was introduced in 2001, Triple DES continued to be used and was only officially phased out in 2024. That is a 23-year transition for something that was meant to be temporary.

The reason transitions like this take decades is that many systems were not built with flexibility in mind. This makes transitioning to stronger algorithms slow, complex, and expensive.

- Hardcoded Algorithms

In many older systems, cryptographic algorithms are hardcoded directly into the application’s source code. This means swapping them out often requires rewriting and retesting the entire application. This makes updates time-consuming and risky.

- Backward Compatibility and Interoperability Challenges

Another major challenge during cryptographic transitions is the need to maintain backward compatibility. A good example is SHA-1, a widely used hash function that was once considered secure. Even though its weaknesses were discovered as early as 2005, SHA-1 continued to be used for years because many systems relied on it for digital signatures, authentication, and key derivation.

Even after NIST urged agencies to stop using SHA-1 by 2010, support for it had to remain in certain protocols like TLS to preserve interoperability. As a result, known weak algorithms were kept in use far longer than recommended because not all systems could adapt in time.

This example illustrates a key challenge: when applications lack crypto-agility and cannot adapt quickly, outdated algorithms end up being used longer than necessary just to maintain compatibility with older systems.

- Constant Need for Transition

Cryptographic security is not static. It needs to evolve as computing power increases. Take RSA, for example. When RSA was first approved for digital signatures in 2000, a 1024-bit modulus was required to provide at least 80 bits of security. However, due to advances in computing power and cryptanalysis, by 2013, the minimum recommended modulus size was increased to 2048 bits to maintain a security level of at least 112 bits.

These transitions often need to happen during a device’s lifetime. If systems are not designed to support larger key sizes or stronger algorithms, they may become obsolete and require replacement. That is why planning for upgrades from the start is both practical and cost-effective.

Since 2005, NIST SP 800-57 Part 1 has projected the need to transition to 128-bit security by 2031. In 2024, NIST Internal Report (IR) 8547 stated that 112-bit security for current public-key algorithms would be deprecated by 2031, enabling a direct transition to post-quantum cryptography.

- Resource and Performance Challenges

Cryptographic transitions often come with performance trade-offs, especially when moving to post-quantum algorithms. Many of these newer algorithms require larger public keys, signatures, or ciphertexts. For instance, an RSA signature providing 128-bit security uses a 3072-bit key, while the post-quantum ML DSA signature defined in FIPS 204 is significantly larger at 2420 bytes, more than six times the size.

This increase in size affects not just storage and processing but also network bandwidth, slowing down communication and putting pressure on constrained environments like IoT devices or embedded systems. As a result, transitions can be slower than expected, adding yet another layer of complexity.

That is why crypto agility is essential. It provides a framework for building systems that can adapt to new algorithms more smoothly, even when those changes demand more from the underlying infrastructure.

Making Security Protocols Crypto-Agile

Security protocols rely on cryptographic algorithms to deliver key protections like confidentiality, integrity, authentication, and non-repudiation. For these protocols to work correctly, communicating parties must agree on a common set of cryptographic algorithms known as a cipher suite.

Crypto agility in this context means the ability to easily switch from one cipher suite to another, more secure one, without breaking compatibility. To support this, protocols should be designed modularly, allowing new algorithms to be added and old ones phased out smoothly.

In this section, we will explore the challenges and recommended practices for achieving crypto agility in security protocols.

- Clear Algorithm Identifiers

Protocols should use unambiguous, versioned identifiers for algorithms or cipher suites to support crypto agility. For example, TLS 1.3 uses cipher suite identifiers that encapsulate combinations of algorithms, while Internet Key Exchange Protocol version 2 (IKEv2) negotiates each algorithm separately using distinct identifiers.

Reusing names across variants or key sizes such as, labelling both AES-128 and AES-256 simply as “AES” can create confusion and increase the risk of misconfigurations during transitions. Well-defined identifiers enable phased rollouts, support fallback mechanisms, and improve troubleshooting as systems evolve.

- Timely Updates

Standards Developing Organizations (SDOs) must update mandatory or recommended algorithms before advances in cryptanalysis or computing weaken them without needing to change the entire security protocol. One way to achieve this is by separating the core protocol specification from a companion document that lists supported algorithms, allowing updates to the algorithms without altering the protocol itself.

It is important for SDOs to introduce new algorithms before the current ones become too weak and to provide a smooth transition. A delay in updating algorithms could lead to the prolonged use of outdated or insecure cryptographic methods.

- Strict Deadlines

Legacy algorithms must be retired on clear and enforceable schedules. Organizations should avoid letting timelines slip. At the same time, standards groups like the Internet Engineering Task Force (IETF) and the National Institute of Standards and Technology (NIST) should coordinate across systems to reduce fragmentation and ensure smooth interoperability during transitions.

- Hybrid Cryptography

Hybrid cryptography combines traditional algorithms like ECDSA with post-quantum algorithms such as ML-DSA. This approach supports crypto agility by enabling a smooth transition from classical cryptographic systems to quantum-resistant algorithms. Hybrid schemes are commonly used for signatures and key establishment, where both traditional and PQC public keys are certified either in one certificate or separately to balance compatibility and forward security.

While hybrid schemes are essential for validating crypto agility in real-world deployments, they introduce challenges such as increased protocol complexity, larger payloads, layered encapsulation, and performance trade-offs, which need careful consideration, especially in resource-constrained environments.

- Balancing Security and Simplicity

Cipher suites should maintain consistent strength across all components. Mixing weak and strong primitives in one suite undermines overall security. Overly complex negotiation logic also increases the risk of bugs and downgrade attacks. Streamlined suites improve analysis, simplify testing, and support more reliable cryptographic transitions.

Building Crypto-Agility for Applications

Crypto APIs separate application logic from cryptographic implementations, allowing applications to perform encryption, signing, hashing, or key management without embedding cryptographic code directly. These operations are handled by dedicated libraries or providers. This abstraction makes it easier to switch between algorithms, such as AES-CCM and AES-GCM, without major changes to the application. Crypto APIs also enable seamless integration with protocols like TLS and IPsec, which rely on these interfaces for cryptographic operations.

To support crypto agility, system designers must streamline the replacement of algorithms across software, hardware, and infrastructure. The cryptographic interface must be user-friendly and well-documented to reduce errors and support long-term security.

NIST also explores in detail a few use cases for using crypto APIs, such as:

- Using an API in a Crypto Library Application

A Cryptographic Service Provider (CSP) delivers algorithm implementations through a crypto API and may also manage protected key storage. Enterprise policies set by the Chief Information Security Officer (CISO) define which algorithms are allowed, and CSPs can enforce these rules during runtime. For instance, encryption with Triple DES is not allowed, but decryption might still be allowed for backward compatibility. Application developers must ensure that cryptographic libraries can be updated efficiently to support the rapid adoption of newer, more secure primitives.

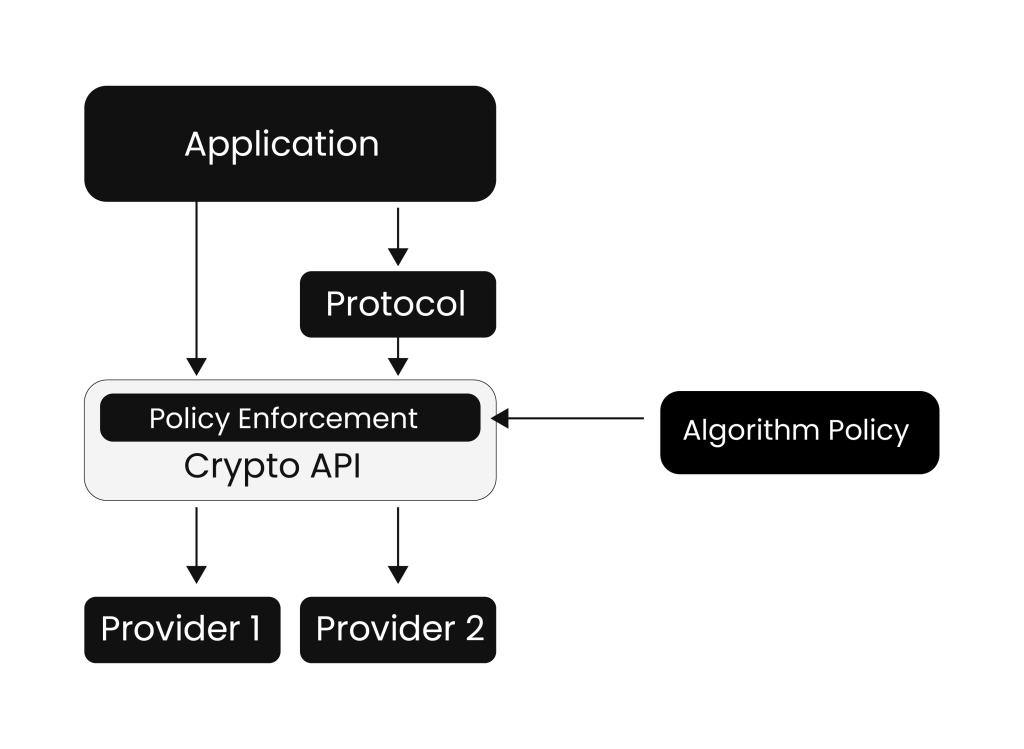

Applications using Crypto API An application refers to the end-user or process that executes cryptographic functions. The protocol defines the rules for communication and data transfer, ensuring that data is exchanged securely and consistently. Policy enforcement is typically handled through a cryptographic API, which implements the policies set by the Chief Information Security Officer (CISO) to determine which cryptographic algorithms are allowed. Providers are cryptographic service providers (CSPs) that offer supported algorithms through software libraries, hardware modules, or cloud-based services, based on the organizational requirements.

- Using APIs in the Operating System Kernel

Some security protocols, such as IPsec and disk encryption, need to operate in the operating system kernel, which is the core component of the system that runs first when the machine is powered on and manages all system resources. To support crypto agility, the kernel must have access to a crypto API, but typically, only a subset of it is available based on the required operations.

Since supported algorithms are often determined at build time, adding new ones post-build (e.g., via plugins) can be difficult. As a result, crypto agility is more limited at the kernel level compared to user-space applications. Some systems also perform self-tests during boot to ensure cryptographic functions work as expected, but long-term crypto agility depends on sound initial decisions aligned with evolving cryptographic standards.

- Hardware Considerations for Crypto Agility

Hardware-based cryptography may use dedicated chips like Trusted Platform Modules (TPMs), Hardware Security Modules (HSMs), or personal crypto tokens that securely store private keys and handle cryptographic operations. These devices offer strong security but are much harder to update than software. Some CPUs offer built-in instructions to accelerate specific crypto functions, improving efficiency but not necessarily agility.

Achieving agility at this layer requires selecting strong, future-proof algorithms and close collaboration between developers and cryptographers to anticipate long-term needs.

Key Trade-Offs and Areas for Improvement

NIST emphasizes that crypto agility is a shared responsibility between cryptographers, developers, implementers, and security practitioners. To be actionable, crypto-agility requirements must be customized to fit the specific needs and limitations of each system. This section outlines core challenges, key trade-offs, and areas that need further improvement.

- Resource Limitations

Resource limitations are one of the most difficult challenges for achieving crypto agility. Protocols often need to support multiple cryptographic algorithms, but newer algorithms, like post-quantum ones, often require much larger keys, signatures, or ciphertexts than those they replace. These larger sizes can overwhelm existing protocol limits or hardware capabilities. Protocol designers must anticipate these demands to avoid shortsighted design decisions that hinder future transitions.

Hardware limitations pose another challenge, as they may not have enough capacity to support multiple algorithms on a single platform. Algorithm designers should consider reusability and compatibility across different algorithms, ensuring that subroutines like hash functions can be shared to save hardware resources rather than relying on unique components that are rarely used.

There is also a growing need to design algorithms based on diverse assumptions so that if one fails, others still provide security.

- Agility-Aware Design

Crypto APIs provide a practical means to substitute cryptographic algorithms without requiring a complete rewrite of application logic. This flexibility is essential when algorithms are deprecated due to emerging threats. However, achieving crypto agility at the kernel level is more challenging, as cryptographic functions are often hardcoded during kernel compilation, making post-deployment updates difficult.

To address this, NIST recommends designing APIs and interfaces that do not assume fixed algorithms or key sizes. Protocols that support negotiation mechanisms, such as TLS cipher suites, should be used to allow flexibility in selecting cryptographic methods. Additionally, it is beneficial for standards to include a “Crypto Agility Considerations” section to guide developers in creating systems that can easily adapt to evolving cryptographic requirements over time. These practices help ensure that systems remain secure and adaptable as cryptographic needs change.

- Complexity and Security Risks

While crypto agility increases flexibility, it also introduces new complexity and risk. Supporting multiple algorithm options can lead to configuration errors, security bugs, and broader attack surfaces. For example, if cipher suite negotiation is not protected, attackers can downgrade to weaker algorithms. Similarly, exposing too many cryptographic options in libraries or APIs can increase the risk of security flaws. For enterprise IT administrators, it is necessary to ensure that the configuration is updated to reflect new security requirements.

Also, transitioning from one algorithm to another is risky. Yet, most security evaluations today focus on static configurations and not on the dynamics of transitioning between algorithms. Future assessments should explicitly consider cryptographic transitions as part of their security posture.

- Crypto Agility in Cloud

Cloud environments provide scalability and flexibility for cryptographic operations but can limit crypto agility due to vendor lock-in. Developers often rely on provider-specific cryptographic APIs, hardware, or key management services, which can restrict algorithm or provider changes.

Some CSPs offer access to external, application-specific HSMs to avoid lock-in, but this approach adds operational complexity. In addition, adopting confidential computing architectures can prevent cloud providers from accessing sensitive data or keying material by isolating the processing environment. However, the provider may still retain administrative control, including the ability to remove the application entirely. In some cloud environments, the cloud provider can delete keys from an HSM, even without direct access to those keys.

Therefore, to support crypto agility, organizations must carefully evaluate the cryptographic controls, flexibility, and responsibilities in their chosen cloud environment.

- Maturity Assessment for Crypto Agility

To help organizations evaluate and improve their crypto agility, NIST highlights the Crypto Agility Maturity Model (CAMM). This model defines five levels:

- Level 0: Not possible

- Level 1: Possible

- Level 2: Prepared

- Level 3: Practiced

- Level 4: Sophisticated

These levels assess how well a system or organization can handle crypto transitions. For example, a system that supports cryptographic modularity at Level 2 (“Prepared”) can replace individual cryptographic components without disrupting the rest of the system. Although CAMM is mostly descriptive today, it offers a valuable framework for guiding improvements. The concept of crypto agility maturity is still developing, and expanding the model with more precise metrics and broader applicability could further strengthen its value.

- Strategic Planning for Managing Crypto Risk

Crypto agility should be part of an organization’s long-term risk management strategy, not just a one-time effort. NIST recommends a proactive approach that blends governance, automation, and prioritization based on actual cryptographic risk.

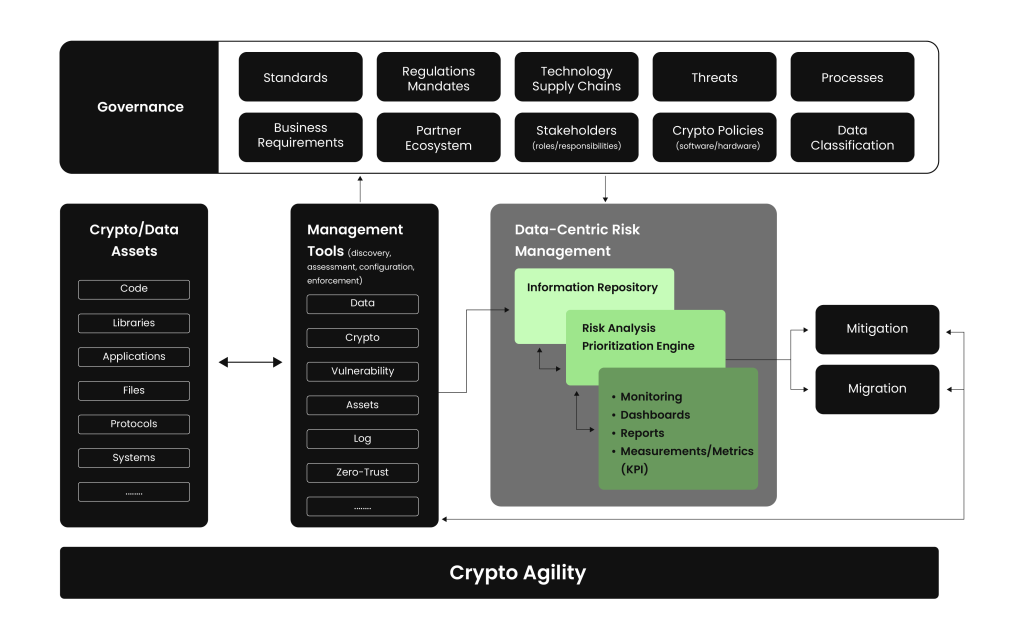

Crypto agility strategic plan for managing organization’s crypto risks Key steps include integrating crypto agility into governance policies; creating inventories to identify where and how cryptography is used; evaluating enterprise management tools to ensure they support crypto risk monitoring; prioritizing systems based on exposure to weak cryptography; and taking action to either migrate to stronger algorithms or deploy mitigation techniques like zero-trust controls.

These activities should be cyclical, enabling organizations to evolve with new threats, technologies, and compliance mandates.

- Standards, Regulations, and Compliance

Standards and regulations often drive crypto-agility efforts by mandating the transition away from obsolete algorithms. For example, NIST SP 800-131A set a deadline to end support for Triple DES by the end of 2023.

Compliance with these standards is crucial. However, successful transitions also require practical strategies to handle legacy data. This includes securely decrypting or re-signing data that was protected using deprecated algorithms. Protocols and software must be updated to reflect algorithm transitions.

- Enforcing Crypto Policies

Enforcing crypto-security policies is a critical yet difficult aspect of crypto agility. Organizations need to coordinate the transition from weak to strong algorithms in a way that avoids service disruptions. This requires synchronizing updates across systems, enforcing algorithm restrictions within protocols, and supporting secure configurations through APIs. This process demands close collaboration among cryptographers, developers, IT administrators, and policymakers. Effective crypto agility depends not just on technical solutions but also on shared visibility and communication across all stakeholders to ensure that systems remain secure and responsive in the face of evolving threats and regulatory requirements.

How Can Encryption Consulting’s PQC Advisory Services Help?

Navigating the transition to post-quantum cryptography requires careful planning, risk assessment, and expert guidance. At Encryption Consulting, we provide a structured approach to help organizations seamlessly integrate PQC into their security infrastructure.

We begin by assessing your organization’s current encryption environment and validating the scope of your PQC implementation to ensure it aligns with industry best practices. This initial step helps establish a solid foundation for a secure and efficient transition.

From there, we work with you to develop a comprehensive PQC program framework customized to your needs. This includes projections for external consultants and internal resources needed for a successful migration. As part of this process, we conduct in-depth evaluations of your on-premises, cloud, and SaaS environments, identifying vulnerabilities and providing strategic recommendations to mitigate quantum risks.

To support implementation, our team provides project management estimates, delivers training for your internal teams, and ensures your efforts stay aligned with evolving regulatory standards. Once the new cryptographic solutions are in place, we perform post-deployment validation to confirm that the implementation is both effective and resilient.

Conclusion

Cryptographic agility is not just a technical goal but a critical part of building resilient systems in a world of constant change. It requires collaboration between cryptographers, developers, implementers, and security professionals, all working together to stay ahead of emerging threats. As cryptographic standards shift and new risks appear, organizations must be prepared to adapt quickly and securely. By making agility a core part of system design and security planning, we can build a future where strong protection keeps pace with innovation.