Introduction

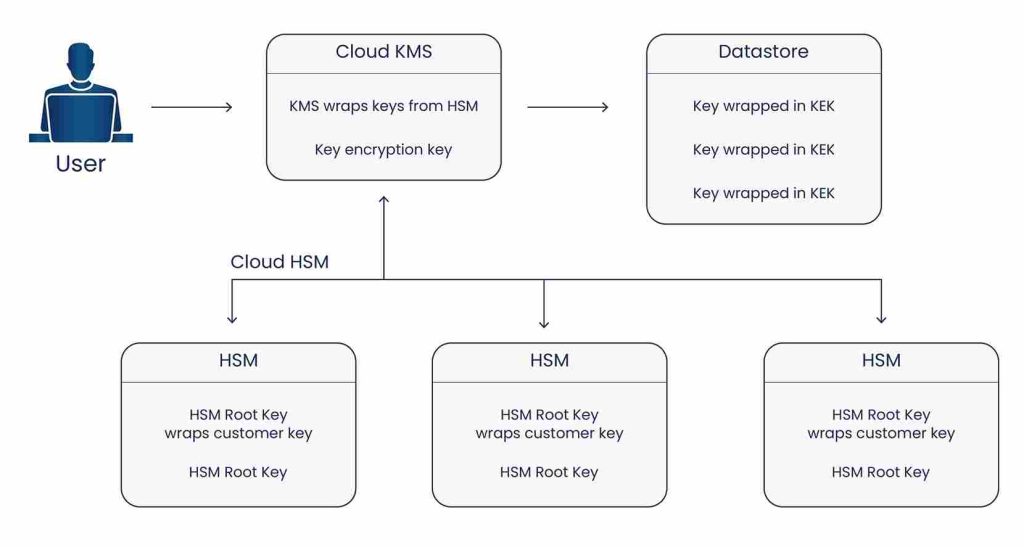

Google’s Cloud HSM service provides hardware-backed keys to Cloud KMS (Key Management Service). This gives customers the ability to manage and use their cryptographic keys while being protected by fully managed Hardware Security Modules (HSM). The Cloud HSM service is highly available and scales horizontally automatically. Created keys would be regionally bound to the KMS region in which the keyring is defined. With Cloud HSM, the keys that users create and use cannot be materialized outside of the cluster of HSMs belonging to the region specified at the time of key creation.

Using Cloud HSM, users can verifiably attest that their cryptographic keys are created and used exclusively within a hardware device. No application changes are required for existing Cloud KMS customers to use Cloud HSM. The Cloud HSM service is accessed using the same API and client libraries as the Cloud KMS software backend.

The Cloud HSM service uses HSMs, which are FIPS 140-2 Level 3-validated and are always running in FIPS mode. FIPS standard specifies the cryptographic algorithms and random number generation used by the HSMs.

Provisioning and Handling of HSMs

Provisioning of HSMs is carried out in a lab equipped with numerous physical and logical safeguards, including multi-party authorization controls to help prevent single-actor compromise.

The following are Cloud HSM system-level invariants:

- Customer keys cannot be extracted as plaintext.

- Customer keys cannot be moved outside the region of origin.

- All configuration changes to provisioned HSMs are guarded through multiple security safeguards.

- Administrative operations are logged, adhering to separation of duties between Cloud HSM administrators and logging administrators.

- HSMs are designed to be protected from tampering, such as by the insertion of malicious hardware or software modifications, or unauthorized extraction of secrets, throughout the operational lifecycle.

Vendor-controlled firmware on Cloud HSM

HSM firmware is digitally signed by the HSM vendor. Google cannot create or update the HSM firmware. All firmware from the vendor is signed, including development firmware that is used for testing.

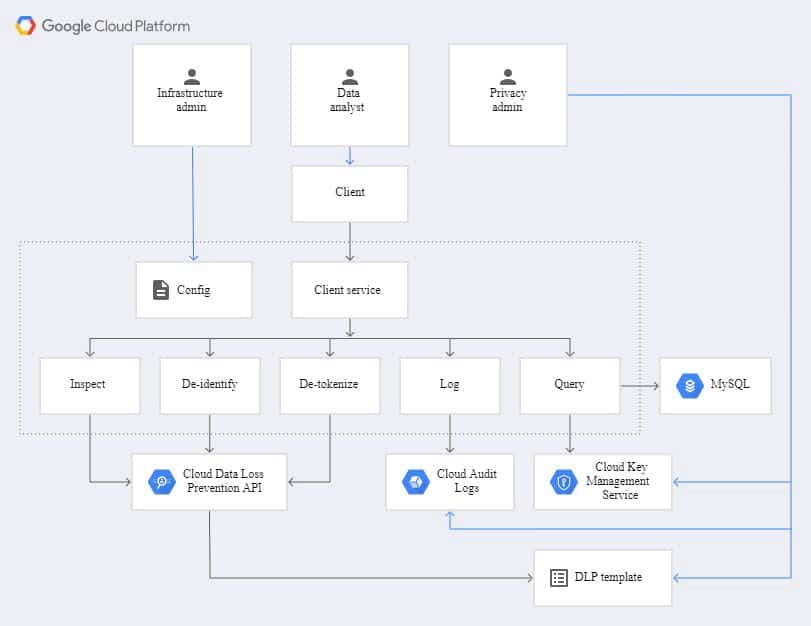

Google Cloud HSM Key Hierarchy

Cloud HSM wraps customer keys, and Cloud KMS keys wrap HSM keys, which are then stored in Google’s datastores.

Cloud HSM does have a key that controls the migration of the materials inside the administrative domain of Cloud HSM.

The root key of Cloud HSM has two primary characteristics:

The root key is generated on the HSM and, throughout its lifespan, never leaves the well-defined boundaries of the HSM. However, cloning is possible, and backups of HSMs are allowed.

The root key can be used as an encryption key to wrap customer keys that HSMs use. Wrapped customer keys can be used on the HSM, but the HSM never returns an unwrapped customer key. HSMs can only use customer keys for operational purposes.

Key storage

HSMs are not used as a permanent data storage solution for keys. HSMs only store keys while they are in use. Since HSM storage is constrained, HSM keys are encrypted and then stored in the Cloud KMS key datastore.

The Cloud KMS datastore is highly available, durable, and heavily protected. Some of its features are:

- Availability: Cloud KMS uses Google’s internal data storage, which is highly available, and also supports a number of Google’s critical systems.

- Durability: Cloud KMS uses authenticated encryption to store customer key material in the datastore. Additionally, all metadata is authenticated using a hash-based message authentication code (HMAC) to ensure it has not been altered or corrupted while at-rest. Every hour, a batch job scans all key material and metadata and verifies that the HMACs are valid and that the key material can decrypt successfully.

Cloud KMS uses several types of backups for the datastore:

- By default, the datastore keeps a change history of every row for several hours. In an emergency, this lifetime can be extended to provide more time to remediate issues.

- Every hour, the datastore records a snapshot. The snapshot can be validated and used for restoration, if needed. These snapshots are kept for four days.

- Every day, a full backup is copied to disk and tape.

- Residency: Cloud KMS datastore backups reside in their associated Google Cloud region. These backups are all encrypted at-rest.

- Protection: At the Cloud KMS application layer, customer key material is encrypted before it is stored. Datastore engineers do not have access to plaintext customer key material. Additionally, the datastore encrypts all data it manages before writing to permanent storage. This means access to underlying storage layers, including disks or tape, would not allow access to even the encrypted Cloud KMS data without access to the datastore encryption keys. These datastore encryption keys are stored in Google’s internal KMS.

Conclusion

Google Cloud HSM is a cluster of FIPS 140-2 Level 3 certified Hardware Security Modules which allow customers to host encryption keys and perform cryptographic operations on it. Although Cloud HSM is very similar to most network HSMs, Google’s implementation to bring HSM to the cloud did require some changes to be made. Nevertheless, Cloud HSM is one of the best options from Google Cloud Platform to keep data secure and private on a tamper-proof HSM.