Being compliant means meeting the minimum bar by following established rules and passing audits. It shows that your organization can align with frameworks, but it often reflects a snapshot in time rather than ongoing security. Resilience, on the other hand, is about preparing for the unexpected by building systems that can withstand failures, adapt to new threats, and recover quickly without disruption. This gap between compliance and resilience becomes especially important when examining PKI, as it can determine whether your business continues to operate smoothly or comes to a halt in the event of a sudden failure.

If your organization has invested in cybersecurity, it’s likely that you’ve aligned your practices with established frameworks like NIST, PCI DSS, HIPAA, ISO, and other regulatory frameworks. You’ve rolled out technical controls, implemented robust authentication mechanisms, logged activities, deployed endpoint protection, and maybe even built layered defenses like multi-factor authentication and conditional access policies.

In short, you’ve staffed up, passed audits, and ticked all the right boxes, but there’s a foundational question that often gets overlooked: Is your PKI healthy?

Most organizations assume the answer is yes. After all, certificates are being issued, TLS connections appear secure, users can log in, and everything seems to be functioning as expected. But what looks fine on the surface can hide serious risks. It is important to understand that PKI health is not the same as your overall security posture. Your security posture reflects the strength of your defenses across the organization, while PKI health is specifically about the reliability and proper functioning of your certificate and key infrastructure. Even an organization with a strong security posture can be severely impacted if its PKI fails.

An unhealthy or poorly maintained Public Key Infrastructure (PKI) is one of the most overlooked security risks in modern enterprise environments. When PKI fails, whether due to expired certificates, misconfigured CAs, or broken revocation chains, it doesn’t fail quietly. It disrupts authentication, access, and encryption simultaneously, bringing critical business processes to a halt.

Beyond Compliance: Strengthening PKI Security

Compliance frameworks are designed to set minimum standards, and not to guarantee resilience. They define the baseline requirement for key lengths, approved encryption algorithms, certificate validity periods, and audit logging that organizations must satisfy.

Compliance is about proving you’re secure today, while resilience ensures your PKI stays secure and operational tomorrow, no matter what changes, breaks, or evolve. Resilience is the ability of your PKI to maintain secure, reliable operations continuously, even as certificates expire, cryptographic standards evolve, or infrastructure components fail. It includes proactive monitoring, automated lifecycle management, rapid incident response, and the capacity to adapt to both planned changes and unexpected disruptions without service interruptions.

What compliance leaves out are the day-to-day operational challenges of PKI, such as continuous monitoring, automated certificate renewals, detection of shadow or orphan certificates, and readiness for cryptographic shifts like post-quantum migration. A PKI can appear fully compliant on paper while still being fragile in practice, leaving it vulnerable to expired certificates, misconfigured trust chains, or outdated cryptography that lingers in templates.

Think of it like aviation. An aircraft can pass inspections and meet all regulatory requirements, but if it isn’t maintained between checks, small issues can build into catastrophic failures mid-flight. Similarly, a PKI that passes an audit may still be dangerously close to failure if it isn’t actively managed, monitored, and kept agile for future cryptographic shifts.

The risks aren’t theoretical. For instance, in 2020, Microsoft Teams experienced a widespread outage because an authentication certificate expired unexpectedly. Even though the organization met compliance requirements, the expired certificate prevented users from authenticating and accessing services, causing hours of disruption across multiple regions. This incident highlights how even compliant PKI systems can fail operationally if certificates aren’t actively monitored and managed.

What is PKI and Why Should You Care?

Public Key Infrastructure (PKI) is the foundation of digital trust in any modern IT environment. It provides mechanisms that enable secure communication, trusted identity verification, and encrypted data exchange. At its core, PKI enables five essential functions: identity, authentication, confidentiality, data integrity, and access control.

Identity

PKI ensures that every entity in your environment, whether a user, device, server, or application, has a unique, verifiable identity. It provides identity through digital certificates, which are issued by a trusted Certificate Authority (CA). Each certificate contains a unique public key and metadata about the entity it represents, such as a username, device ID, or domain name. The CA acts as a trusted third party, vouching for the authenticity of the entity. This allows you to confirm who is connecting to your systems, detect rogue devices, and prevent unauthorized access from impersonated accounts.

Authentication

Claiming an identity is not enough; it must be proven. PKI enables authentication by using certificates to prove a user or device is who they claim to be. Each certificate has a public key, and only the owner with the matching private key can successfully authenticate. This provides strong cryptographic authentication, ensuring that only verified users and devices can access your systems, mitigating credential theft attacks, and strengthening multi-factor authentication.

Confidentiality

PKI provides the keys and trust model needed to encrypt sensitive communications and data in transit. Using public-private key pairs, data encrypted with a public key can only be decrypted with the corresponding private key. This ensures confidentiality by protecting data from eavesdropping, preventing tampering, and keeping information unreadable even if communications are intercepted.

Data Integrity

PKI ensures that information has not been altered in transit. The sender signs the data with their private key, creating a digital signature, and the recipient uses the sender’s public key to verify it. If the signature matches, the recipient can trust that the data is authentic and unchanged. This mechanism allows recipients to verify that messages, files, code, or transactions received are exactly as the sender intended, protecting against tampering and unauthorized modifications.

Access Control

Certificates issued through PKI define and enforce who has permission to access specific systems or resources. PKI supports access control by binding certificates to specific roles, policies, or systems, allowing access decisions to be enforced based on certificate attributes and the trust placed in the issuing CA. This ensures that users or machines can only access authorized systems, reduces lateral movement during a breach, and enforces least-privilege access policies.

In practical terms, PKI is what makes it possible to securely log in to systems, access corporate VPNs, encrypt emails, sign software, authenticate devices, and build trust between internal and external systems. Whether it’s a smart card login, a digitally signed firmware update, or a trusted TLS handshake, PKI is working silently in the background to enforce trust.

The importance of PKI becomes crystal clear when something goes wrong because when PKI fails, everything that depends on it can fail as well. This includes your ability to operate securely, authenticate users, and maintain compliance. PKI is not just another IT system. It is a critical part of your core infrastructure. The health of your authentication, encryption, and identity ecosystem depends entirely on how well your PKI is managed.

Ignoring PKI risks does not make them disappear. Unmanaged or neglected PKI is a silent vulnerability that often shows no warning until something critical stops working. So, if your organization relies on secure access, trusted identities, or encrypted communication, which virtually all do, then you absolutely need to care about the state of your PKI.

In the following sections, we will explore the hidden risks of compliant PKI, the business impact of failures, real-world cases that highlight the consequences of neglected PKI, and practical strategies to strengthen the resilience of your PKI infrastructure.

Enterprise PKI Services

Get complete end-to-end consultation support for all your PKI requirements!

The Hidden PKI Risks of “Compliant” Organizations

Despite rigorous audits, many organizations remain vulnerable due to overlooked operational gaps. A study by DigiCert and Ponemon Institute found that 62% of organizations experienced outages or security incidents caused by digital certificate issues, and 43% of organizations don’t have a complete inventory of the certificates they manage, creating potential blind spots.

Even if your PKI meets all compliance requirements, hidden operational and security risks can still threaten availability and trust. Some of the most common gaps include:

-

Point-in-Time vs. Real-Time Security

Audits validate PKI at a specific moment in time, providing a snapshot of compliance. However, PKI risks evolve constantly. Certificates can expire unexpectedly, revocation chains may break, or subordinate CAs may go offline, causing outages weeks or months after the audit ends. Without continuous monitoring and automated alerts, these issues often remain undetected until critical systems fail.

-

Cryptographic Agility Gaps

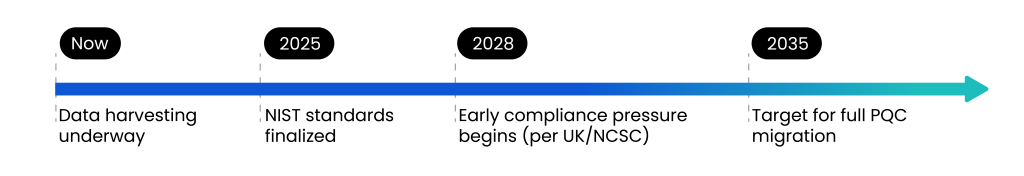

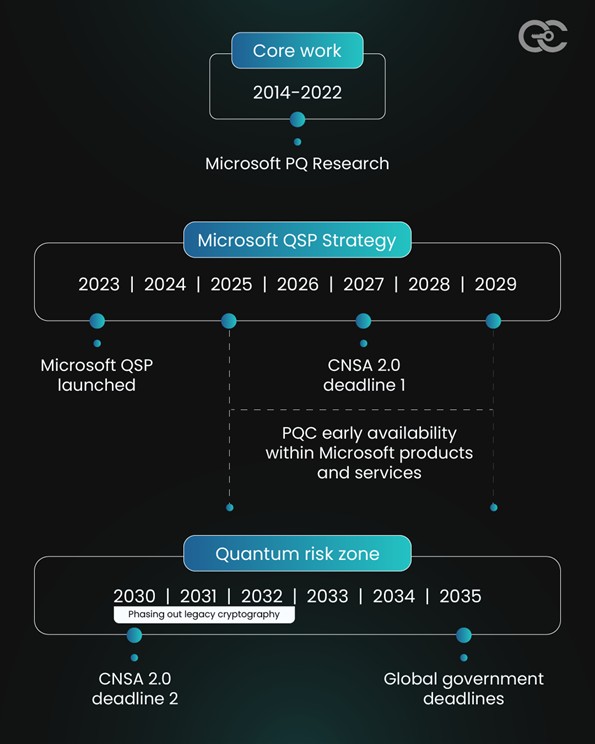

Compliance frameworks often accept algorithms like RSA-2048 or ECC, which are secure today. But the cryptographic landscape is evolving rapidly, with post-quantum cryptography (PQC) on the horizon. A compliant PKI that cannot easily migrate to new algorithms or update certificate templates leaves organizations exposed to future attacks, potentially requiring emergency-wide re-issuance of keys and certificates.

Preparing for PQC involves not just selecting new algorithms but also validating that applications, servers, network devices, and Hardware Security Modules (HSMs) support them, updating certificate profiles and trust hierarchies, and planning phased key and certificate rollouts to minimize service disruption. Without proactive cryptographic agility, organizations risk unexpected outages, compromised trust chains, and costly remediation efforts when cryptographic standards evolve.

-

Shadow and Orphan Certificates

Certificates issued outside central IT management, such as in test labs, developer environments, or legacy systems, often escape audits. These shadow or orphan certificates can be forgotten or left unmanaged. Examples include TLS certificates for internal staging servers, self-signed certificates used in development pipelines, certificates embedded in legacy applications, or device certificates for IoT sensors and network appliances. A single neglected certificate may trigger service outages, break authentication chains, or provide an attack vector for malicious actors, especially if it uses weak cryptography or default configurations.

-

Weak Operational Practices

Audits may only require minimum key lengths and validity periods. However, using long-lived certificates, such as two-year or three-year certificates, increases the risk of private key compromise, reliance on outdated algorithms, and challenges in managing the certificate lifecycle. This can create vulnerabilities, extend the window of opportunity for attackers, and lead to service disruptions if manual issuance and renewal processes fail.

-

Limited Visibility and Monitoring

While most compliance frameworks ensure baseline PKI requirements are met, they often do not require continuous certificate inventory, real-time monitoring, or automated reporting for certificates and keys. Without these measures, expired, misconfigured, or compromised certificates can silently accumulate, creating blind spots that threaten both system availability and security. Continuous monitoring, alerting, and health checks are essential to prevent outages and maintain trust across internal and external systems.

Even if your PKI meets all compliance requirements, hidden risks can still compromise security and availability. Continuous management, monitoring, and proactive practices are essential to ensure true resilience.

The Business Impact of PKI Failures

PKI is deeply embedded in nearly every part of enterprise IT, from securing logins and encrypting traffic to authenticating devices and enabling trusted transactions. When it fails, the impact spreads quickly across business operations, causing disruptions that go far beyond IT.

Here are the most common business impacts of PKI failures:

1. Service Outages and Downtime

A single expired or misconfigured certificate can bring down websites, APIs, or authentication systems, instantly halting business processes. For customer-facing platforms like e-commerce or SaaS services, even brief outages can translate into lost revenue, broken customer experiences, and damage to business continuity. According to a 2024 study, the average cost of a single minute of downtime has increased from $5,600 to around $9,000.

2. Security Breaches and Data Exposure

Weak algorithms, certificates using insecure key sizes like RSA 1024, or unmanaged shadow certificates can be exploited by attackers to impersonate systems, intercept communications, or gain unauthorized access. These lapses create direct pathways for breaches that compromise sensitive customer data, intellectual property, and even critical infrastructure.

3. Regulatory and Compliance Failures

Frameworks such as PCI DSS, HIPAA, and GDPR mandate strong encryption and reliable certificate management. When PKI incidents result in service outages or data exposure, organizations risk failing audits, incurring heavy fines, and facing additional oversight, all of which impact both financial stability and brand credibility.

4. Supply Chain and Partner Disruptions

PKI underpins trust between business partners, vendors, and third-party integrations. An expired signing certificate or a broken trust chain can disrupt vendor APIs, federated identity systems, and software distribution, creating operational delays and eroding trust across the supply chain ecosystem.

For example, if a logistics provider’s SSL/TLS certificate expires, a retailer might not be able to retrieve real-time shipping updates or process new orders, causing shipment delays, missed SLAs, and customer dissatisfaction. In tightly integrated supply chains, even minor certificate failures can have ripple effects across multiple partners.

5. Long-Term Reputation Damage

Customers and partners expect seamless, secure digital interactions. Browser warnings, login failures, or insecure communication caused by PKI lapses erode confidence and trust. Even after technical fixes are applied, reputational damage often lingers, influencing customer loyalty and competitive standing in the market.

PKI failures may start with a single overlooked certificate or weak control, but their impact quickly escalates across business operations. Proactive management and continuous monitoring are the only ways to prevent these technical missteps from becoming enterprise-wide crises.

Real-World Cases from Neglected PKI

Even if your PKI appears compliant, neglecting its management can lead to outages, breaches, and operational chaos. The following real-world incidents show how even minor oversights in certificates or Root CAs can have major consequences.

1. Equifax Data Breach (2017)

One of the most infamous security breaches in history stemmed in part from an expired PKI certificate. For 10 months, the expired certificate prevented Equifax from inspecting encrypted traffic, leaving attackers free to exploit a known vulnerability in the Apache Struts server. The result was catastrophic: personal data of more than 145 million consumers was compromised. This incident demonstrates how a single overlooked certificate can eliminate critical visibility and lead to massive financial and reputational damage.

2. DigiNotar CA Compromise (2011)

Dutch certificate authority DigiNotar was hacked, and attackers issued over 500 fraudulent certificates for domains like Google and Skype. This broke the integrity of the trust chain at its core. Browsers revoked certificates issued by DigiNotar, the company went bankrupt, and it became a landmark case of why CA security and monitoring are critical.

3. Twitter Outage (2022)

In 2022, Twitter experienced a major outage caused by an internal systems change that disrupted core services across the platform. The incident disrupted internal tools and core services, limiting the platform’s ability to function at scale. This highlighted how lapses in PKI management can affect not only external user-facing systems but also internal operational tools that employees rely on daily.

4. ServiceNow Root Certificate Failure (2024)

ServiceNow, a leading enterprise SaaS platform, faced a significant disruption when root certificate mismanagement undermined its services. The failure illustrated how issues at the top of a trust hierarchy can ripple across dependent systems, breaking authentication and trust across thousands of organizations relying on ServiceNow for critical workflows.

5. California COVID-19 Reporting Issue (2020)

During the height of the pandemic, California’s COVID-19 reporting system failed to process thousands of case reports due to an expired certificate. The result was a backlog of unreported cases and delayed public health decisions at a critical time. This incident highlighted that PKI failures are not limited to corporate IT but can directly affect public safety and crisis response.

These incidents highlight that compliance alone cannot prevent failures. Continuous PKI monitoring, automated certificate management, and proactive governance are essential to maintain security and operational resilience.

Certificate Management

Prevent certificate outages, streamline IT operations, and achieve agility with our certificate management solution.

Public Key Infrastructure often fades into the background once it’s deployed. Many organizations treat PKI as a one-time project; they design it, configure it, pass the audit, and then move on. The result is that PKI becomes invisible until something breaks.

Common signs of this “set-and-forget” approach include:

- Abandoned Certificate Authorities (CAs)

Root or intermediate CAs are still trusted but have no defined owner, no documented key storage policy, or no recovery procedure if a private key is lost or compromised.

- Outdated Cryptography

Legacy certificate templates still issue weak keys (e.g., RSA-1024, ECC with unsupported curves) or deprecated hash functions like SHA-1. These are often left in place for backward compatibility, exposing services to downgrade and collision attacks.

- Broken Revocation Infrastructure

CRL Distribution Points (CDPs) or Online Certificate Status Protocol (OCSP) responders are missing, misconfigured, or unreachable. As a result, clients may incorrectly accept revoked certificates as valid.

- Long-Lived Certificates

Two to three-year validity periods still exist, increasing the attack window if keys are compromised or the systems are still relying on outdated algorithms, making it harder to manage the certificate lifecycle effectively.

- Lack of monitoring hooks

PKI health is not integrated into SIEMs, certificate lifecycle tools, or uptime monitoring, allowing expired, misused, or rogue certificates to go unnoticed until they cause outages.

This leads to issues such as unknown or poorly documented Certificate Authorities (CAs), continued use of deprecated algorithms like SHA-1 or RSA-1024, misconfigured or expired certificate templates, broken or missing certificate revocation infrastructure (CRL/OCSP), root and intermediate CAs without defined ownership or recovery plans, etc.

And most importantly, compliance audits don’t always catch this. Just because certificates are being issued and browsers show no errors, many teams assume everything is fine. But under the surface, vulnerabilities are silently stacking up.

Building PKI Resilience Beyond Compliance

If compliance isn’t enough, how do you strengthen PKI? The answer is to take proactive steps to strengthen infrastructure, operational practices, and cryptographic agility. Here’s how:

-

Maintain a Complete Cryptographic Inventory

A resilient PKI starts with visibility. Track every certificate, key, and

cryptographic dependency across on-premises systems, cloud services, and

shadow IT environments. Include both production and non-production assets,

expired and orphaned certificates, or unmanaged CAs. A comprehensive

inventory allows you to identify vulnerabilities, plan renewals, and

prevent unexpected outages.

-

Implement Continuous Monitoring & Alerts

Audits provide a snapshot in time, but PKI risks evolve daily. Continuous

monitoring ensures that certificate expirations, revocations, or

misconfigurations are detected in real-time. Automated alerts can notify

administrators when certificates are approaching expiry date, CA trust

chains are broken, or cryptographic standards are outdated. This reduces

the risk of downtime or compromised communications.

-

Automate Certificate Lifecycle Management

Manual certificate issuance, renewal, and revocation are prone to human

error and operational delays. Modern PKI tools like CertSecure Manager

allow organizations to automate the entire lifecycle of certificates,

ensuring that keys are rotated on schedule, revoked immediately if

compromised, and deployed without service interruption. Automation also

enforces policy compliance consistently across all environments.

Additionally, integrating certificate management into CI/CD pipelines

allows certificates to be automatically renewed and deployed during

application updates or infrastructure changes, reducing downtime and

eliminating manual intervention in dynamic environments.

-

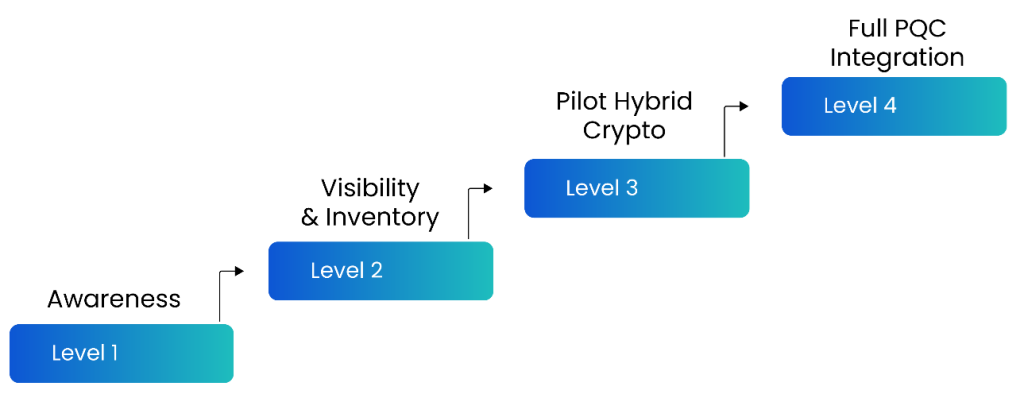

Plan for Cryptographic Agility

Cryptography standards evolve with time, and PKI must be prepared to

adapt. Plan your infrastructure to support algorithm transitions from

RSA/ECC to post-quantum cryptography (PQC), without breaking existing

services. This involves designing flexible CA hierarchies, maintaining

compatible certificate templates, and testing interoperability before

deployment. Cryptographic agility ensures your PKI remains secure against

future threats.

-

Adopt Short-Lived Certificates

Short-lived certificates, typically ranging from days to months, minimize

the impact of compromised keys and reduce reliance on revocation

mechanisms. The industry is increasingly shifting toward 90-day and even

47-day TLS certificates to improve security posture. Moving from

traditional 398-day certificates to these shorter lifetimes results in up

to four to eight times more renewals annually, making automation

essential. Automated management of these certificates ensures continuous

validity while reducing administrative overhead and human error,

increasing both security and availability.

-

Conduct Regular PKI Health Checks

Beyond compliance audits, perform internal PKI assessments to verify that

all certificates, keys, and CA configurations align with modern

cryptographic standards. Health checks should include verification of

trust chains, revocation mechanisms, algorithm strengths, and HSM

configurations. Regular assessments help identify hidden risks before they

impact operations.

Strengthening PKI requires more than meeting compliance standards. Continuous inventory, monitoring, automation, and cryptographic agility are essential to ensure security, availability, and long-term resilience.

How can EC help?

Encryption Consulting has extensive experience delivering end-to-end PKI solutions for enterprise and government clients. We provide both professional services and our automation platform (CertSecure Manager) to ensure your PKI is secure, resilient, and future-ready.

PKI Services

-

Project Planning

We assess your cryptographic environment, review PKI configurations,

dependencies, and requirements, and consolidate findings into a

structured, customer-approved project plan.

-

CP/CPS Development

In the next phase, we develop Certificate Policy (CP) and Certification

Practice Statement (CPS) aligned with RFC#3647. These documents are

customized to your organization’s regulatory, security, and operational

requirements.

-

PKI Design and Implementation

We design and deploy resilient PKI infrastructures with offline Root CA,

issuing CAs, NDES servers, integration with HSMs, etc., depending upon the

customer’s needs. Deliverables include PKI design document, build guides,

ceremony scripts, and system configurations. Once deployed, we conduct

thorough testing, validation, fine-tuning, and knowledge transfer sessions

to empower your team.

-

Business Continuity and Disaster Recovery

Following the deployment, we develop and implement business continuity and

disaster recovery strategies, conduct failover testing, and document

operational workflows for the entire PKI and HSM infrastructure, supported

by a comprehensive PKI operations guide.

-

Ongoing Support and Maintenance (Optional)

After implementation, we offer a subscription-based yearly support package

that provides comprehensive coverage for PKI, CLM, and HSM components.

This includes incident response, troubleshooting, system optimization,

certificate lifecycle management, CP/CPS updates, key archival, HSM

firmware upgrades, audit logging, and patch management.

This approach ensures your PKI infrastructure is not only secure and compliant but also scalable, resilient, and fully aligned with your long-term operational and regulatory goals.

Our Certificate Lifecycle Management Solution: CertSecure Manager

CertSecure Manager by Encryption Consulting is a certificate lifecycle management solution that simplifies and automates the entire lifecycle, allowing you to focus on security rather than renewals.

- Automation for Short-Lived Certificates: With ACME and 90-day/47-day TLS certificates becoming the standard, manual renewal is no longer a practical option. CertSecure Manager automates enrolment, renewal, and deployment to ensure certificates never expire unnoticed.

- Seamless DevOps & Cloud Integration: Certificates can be provisioned directly into Web Servers and cloud instances, and they integrate with modern logging tools like Datadog, Splunk, ITSM tools like ServiceNow, and DevOps tools such as Terraform and Ansible.

- Multi-CA Support: Many organizations utilize multiple CAs (internal Microsoft CA, public CAs such as DigiCert and GlobalSign, etc.). CertSecure Manager integrates across these sources, providing a single pane of glass for issuance and lifecycle management.

- Unified Issuance & Renewal Policies: CertSecure Manager enforces your organization’s key sizes, algorithms, and renewal rules consistently across all certificates, not just automating renewals with multiple CAs, but ensuring every certificate meets your security standards every time.

- Proactive Monitoring & Renewal Testing: Continuous monitoring, combined with simulated renewal/expiry testing, ensures you identify risks before certificates impact production systems.

- Centralized Visibility & Compliance: One consolidated dashboard displays all certificates, key lengths, strong and weak algorithms, and their expiry dates. Audit trails and policy enforcement simplify compliance with PCI DSS, HIPAA, and other frameworks.

If you’re still wondering where and how to get started with securing your PKI, Encryption Consulting is here to support you with its PKI Support Services. You can count on us as your trusted partner, and we will guide you through every step with clarity, confidence, and real-world expertise.

Conclusion

A compliant PKI is just the starting point. Many organizations assume that passing audits and ticking checkboxes is enough, but without active management, monitoring, and regular updates, hidden risks quietly accumulate. Expired certificates, weak cryptography, orphaned CAs, and misconfigured revocation infrastructure can disrupt operations, expose sensitive data, and create opportunities for attackers. True PKI resilience requires visibility, proactive lifecycle management, and readiness for evolving cryptographic standards.

Don’t wait for an expired certificate to teach you how critical your PKI really is. Treat PKI like the core infrastructure it is. Monitor it, own it, and strengthen it.