IEC 62443 is a set of international standards developed by the International Electrotechnical Commission (IEC), which provides a structured framework for securing Industrial Automation and Control Systems (IACS). This series of standards helps organizations address cybersecurity risks related to IACS. Industrial Automation and Control Systems are crucial in industries like energy, manufacturing, etc. However, these systems were really not designed with cybersecurity in mind. In fact, the security of these systems largely depends on physical isolation, which we already know is not enough because of the continuously evolving cybersecurity threats.

The Need for Standards

In Iran’s Natanz Nuclear Facility, Industrial Control Systems controlling centrifuges were targeted by attackers who infiltrated through infected USB drives, likely introduced by an insider. Another case was in 2017 when a Saudi Arabian petrochemical plant’s Safety Instrumented System was physically isolated, but attackers infiltrated via a remote engineering workstation connected to the SIS (Safety Instrumented System). Multiple organizations around the world encountered various problems in securing these systems, and around 40% of the global IACS were facing malicious activity in the second half of 2022; thus, the need for standards emerged.

International Electrotechnical Commission (IEC)

Established around a century ago, the International Electrotechnical Commission (IEC) exists to address the need for standard electrical measurements and technology. Over the years, the IEC widened the scope of its activities, bringing into its area all products and services involving technologies such as electronics, telecommunication, energy generation, and advanced digital systems. In the 21st century, the IEC became the global authority on electrical and electronic subjects. The organization has published over 10,000 standard documents covering specifications, best practices, and testing protocols that guarantee compatibility, reliability, and sustainability while also promoting innovation.

IEC 62443 is in accordance with the mission of the International Electrotechnical Commission (IEC) in establishing a normative framework of cyber security for emerging industrial automation and critical infrastructural industries. IEC aims to achieve safety, reliability, and global compatibility in electrical and electronic systems, and IEC 62443 directly follows up on this by addressing cyber threats, specifically in industrial automation control systems (IACS). As a horizontal standard, it goes beyond the industrial sector and is relevant to healthcare, automotive, and other critical industries, wherein secure interoperability and global cybersecurity best practices are promoted. As it offers consistent security provisions for every sector, IEC 62443 enhances resilience, risk management, and compliance as part of IEC’s global safety and security commitment.

The Structure of IEC 62443

The IEC 62443 standard is structured into four categories, denoted by using suffixes: -1, -2, -3, -4. Each one of these categories targets different aspects of cybersecurity for industrial automation and control systems.

IEC 62443-1 (General)

IEC 62443-1 provides a basis for IACS security, starting with defining key terms, concepts, and models that stakeholders will create to build a common understanding (62443-1-1). It also has a complete glossary of the terms and abbreviations used throughout the series to ensure that all documents are consistent and clear (62443-1-2). Besides, it introduces system security conformance metrics that describe how to judge the security posture of IACS effectively (62443-1-3). It outlines the IACS security lifecycle and practical use cases to guide stakeholders in implementing security measures throughout the system’s life (62443-1-4).

IEC 62443-2 (Policies and Procedures)

The IEC 62443-2 series is concerned with organizational policies and procedures to enhance IACS security. It is aimed at advising on establishing and maintaining a cybersecurity program for industrial systems (62443-2-1) and introduces frameworks like NIST CSF, ISO 27001, etc, for evaluating the maturity of such programs (62443-2-2). Other subjects handled by the series include patch management processes to reduce vulnerabilities within the IACS environment (62443-2-3) and security program requirements for service providers regarding IACS operations since they have privileged access to industrial systems that make them possible attack vectors (62443-2-4).

IEC 62443-3 (System)

The IEC 62443-3 series is focused on security at the system level for IACS. It also introduces measures like endpoint protection and zoning to secure industrial systems (62443-3-1). It offers guidance to conduct risk assessments to identify and mitigate potential threats when building the system (62443-3-2). It also provides initial system security requirements and defines the security levels (SL 1-4) that help the organizations achieve their desired protection level (62443-3-3).

IEC 62443-4 (Component)

The IEC 62443-4 series is focused on the requirements for the security of individual components of IACS. This includes a secure product development lifecycle so that components are developed under strong security practices (62443-4-1), as well as specifying technical security requirements for individual IACS components such as controllers, sensors, and HMIs, and those required for secure operation within the system (62443-4-2). We have discussed IEC 62443-4-2 much further ahead in the blog.

Security Levels of IEC 62443

IEC 62443 includes four levels of security aimed at protecting the industrial system against various threats arising from cybersecurity. Each level is defined with a certain level of threat in mind, from the most trivial occurrences to sophisticated operations.

Organizations determine the required IEC 62443-security level (SL1-SL4) after performing risk assessments, threat modeling, and asset criticality analysis, as well as considering any industry compliance requirements. Frameworks such as NIST RMF, ISO 31000, and IEC 62443-3-2 aid in assessing the likelihood of threats or vulnerabilities occurring and their eventual operational impact. Generally, most high-risk industries (for example, energy and healthcare) typically need SL3 or SL4, whereas industries with less impact may go by SL1 or SL2.

SL1 Guarding from accidental breaches

Only accidental breach remediation is effective in this category. Emphasis is placed on measures to counter human errors, such as information misconfiguration and unintended deletion. These measures include basic solutions like user authentication to ensure only authorized individuals can access systems. Additionally, access controls are set in place to restrict unauthorized users.

SL2 Protection from deliberate attacks

Level 2 looks at attacks that are simple but are intentional. These types of attacks mostly involve using basic malware or exploiting unpatched or weak vulnerabilities in the system. Protection against malware is taken care of by using antivirus and antimalware software, and stronger user authentication mechanisms like password complexity requirements and multi-factor authentication are put in place to safeguard the systems.

SL3 Safeguard against sophisticated attacks

Security level 3 primarily handles attacks by attackers who are more organized and skilled. These attackers are capable of exploiting complex vulnerabilities and may use sophisticated tools and methods to breach systems. This level involves a wide range of very rigid security practices. There are requirements for biometric access, installation of sophisticated alarm systems, and strict user protocols. Monitoring is continuous, and multiple layers of security are applied to ensure all-rounded protection.

SL4 Safeguard from external attacks using advanced tools

Level 4 is the apex of Security Levels, which prevents the most advanced cyber-attacks involving high-risk scenarios that can cause devastating loss. Level 4 requires advanced threat intelligence, real-time anomaly detection, AI-driven monitoring, and network segmentation. It also includes automated incident response, zero-trust architecture, hardened encryption, and strict access controls.

The Concept of Zones and Conduits

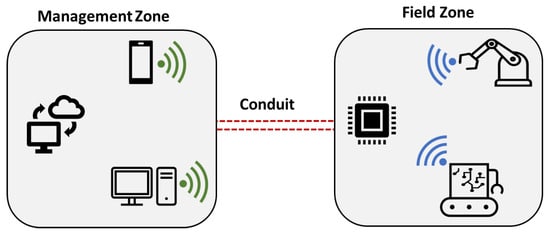

The application of zones and conduits is fundamental for organizing and governing industrial network security and is thus important to understand the IEC 62443 standards. It is a concept that puts forward the notion that the system should be divided into several logical parts with the management of the data traffic between those parts, which is a basic approach for risk control in complicated systems. In IACS, zones are characterized as certain areas with individual security needs about the particular activities and risks involved. These zones are interconnected using conduits, which are communication channels between the zones.

Zones

It is merely a logical and physical grouping of entities or systems with common security or operational characteristics. The objective here is to segregate various components of a system that have distinct requirements depending on functionality or sensitivity. One such instance can be a factory where the manufacturing field is in one zone while the IT systems responsible for communication and management are in another zone.

Conduits

Conduits are communication pathways existing to provide data flow and a way to control and secure interaction between zones. It ensures that only authorized and secure data exchanges occur. They act as checkpoints between the zones; they basically enforce security controls over the communication that happens between the zones. These controls can include access control lists, firewalls, intrusion detection systems, etc. An example of this is that in a similar factory, a conduit can control the flow of data between the field zone and the management zone. A firewall may act in between and restrict the type of data allowed to pass between these zones.

The zones and conduits model in IEC 62443 promises several advantages to the security and manageability of Industrial Automation and Control Systems (IACS). One important advantage is risk isolation; grouping assets into separate zones limits a breach’s impact area. More enhanced security is provided with conduits to strictly control communications between zones to prevent unauthorized access and tampering with information being exchanged. Further, it simplifies security administration, as security policies are defined and strictly applied in all well-defined zones. The model is also scalable, allowing more zones and conduits to be added as a system changes and grows, ensuring perpetual protection as the environment expands.

Achieving compliance with IEC 62443

An organization intending to comply with IEC 62443 must carry out a full risk assessment, which will include identifying important risks, vulnerabilities, and critical assets related to the entire Industrial Automation and Control Systems (IACS). On the basis of this assessment, the Security Management System (SMS) is devised, which contains the policies, processes, and responsibilities of securing the IACS, targeting both technical and organizational cybersecurity. Network segmentation can be accomplished by creating secure zones, each with a controlled conduit for data movement between them. Strong access control mechanisms must be imposed, including multi-factor authentication and role-based access, to prevent unauthorized access.

Continuous monitoring should be set up to effectively detect and respond to threats as they arise. There will also be the establishment of an incident response plan outlining measures to contain, recover, and communicate with all stakeholders in cybersecurity incidents. Employee training should be established to empower staff on what to do when it comes to security threats faced by an organization. In addition, any third-party vendors and suppliers that the organization works with must satisfy its security requirements. This reduces exposure to IACS from the outside. Finally, regular compliance audits and third-party certification should be sought to substantiate the adherence to IEC 62443.

How does it Help?

IEC 62443 is a standard that provides a structured framework to mitigate risks across lifecycles of IACS, addressing security vulnerabilities right from the very start. The standard emphasizes a layered security approach: protecting critical and sensitive assets and minimizing the possible impacts of cyber threats through network segmentation. It boosts the proactive use of technologies like firewalls, SIEM solutions, etc., for threat detection, enabling the identification and mitigation of threats within those layers. Regular review, continuous monitoring, and execution of compliance audits will ensure that the organization can keep up with evolving threats while maintaining a strong defense.

In several ways, this standard helps organizations enjoy the benefits of improved security, resilience in operations, and compliance, making systems better in both accidental non-use and premeditated attacks, reducing downtime, and enhancing operational efficiency. While establishing a sense of trust among stakeholders, this commitment also allows organizations to harness internationally recognized best practices and meet global cybersecurity standards to safeguard critical infrastructure.

IEC 62443-4-2

IEC 62443-4-2 is a standard within the IEC 62443 series that focuses on the technical security requirements for individual IACS components, including embedded devices, network components, software applications, and host devices. It emphasizes maintaining IACS components throughout their entire lifecycle by addressing security concerns from design and development to operation and decommissioning. The standard specifies detailed security requirements to protect against a variety of cyber threats, ensuring the integrity, confidentiality, and availability of IACS components in both the short and long term. It aligns with the broader IEC 62443 framework, supporting the secure deployment of industrial control systems by defining component-level security measures necessary for safeguarding critical infrastructure.

Individual components like controllers or sensors might have vulnerabilities that penetrate the entire IACS, making component security crucial to system-level security. Secure components may include access control, encryption, patch management capability, or secure communication. Dependencies arise when a weak component becomes an entry point for attacking interconnected systems. Therefore, IEC 62443-4 works to ensure security by design, whereas IEC 62443-3 provides a view of the entire system to ensure that no single failure compromises the security of the entire system.

What does IEC 62443-4-2 require?

One of its key requirements is the adoption of a Secure Development Lifecycle (SDL), which integrates security from the beginning of product development, ensuring that security testing and validation occur at every stage to safeguard product integrity. The standard also mandates patch management processes to ensure timely vulnerability updates regarding operational security. IEC 62443-4-2 stresses the importance of Strong Access Control and Authentication Mechanisms, ensuring that only authorized users can access IACS components, which aligns with the zero-trust-security.

It also incorporates Physical Security Measures to prevent unauthorized physical access and protect air-gapped systems. Data protection is a key focus, with requirements for encryption to secure sensitive data alongside controls to maintain system integrity and detect malware or unauthorized changes. The standard also emphasizes System Resilience to Cyberattacks, requiring components to maintain secure operations under threat, and mandates incident detection and response mechanisms to address security breaches swiftly. Maintaining operational excellence also requires strong configuration management, ensuring that any changes are intentional and well documented, and Comprehensive Documentation and Training for personnel to effectively manage and maintain secure operations.

Compliance with IEC 62443-4-2 is not easy to achieve because of its high technical complexity, high demand for resources and expertise, and the need for continuous monitoring and maintenance of security measures throughout the lifecycle of IACS components. Implementing the standard requires a deep understanding of both cybersecurity principles and the specific operational requirements of industrial control systems. Additionally, it involves addressing challenges such as legacy systems, limited resources for smaller organizations, and the evolving nature of cyber threats, which necessitate ongoing updates and adjustments to security practices. Achieving compliance requires significant investment in skilled personnel, technology upgrades, and robust risk management strategies.

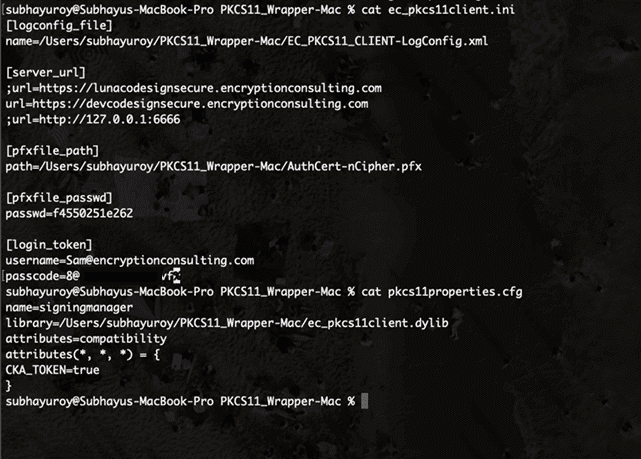

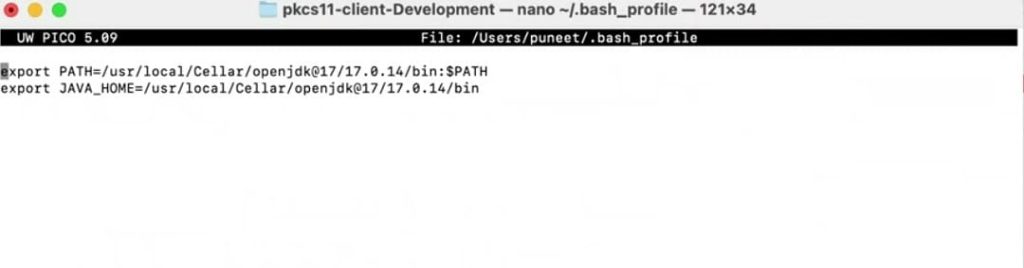

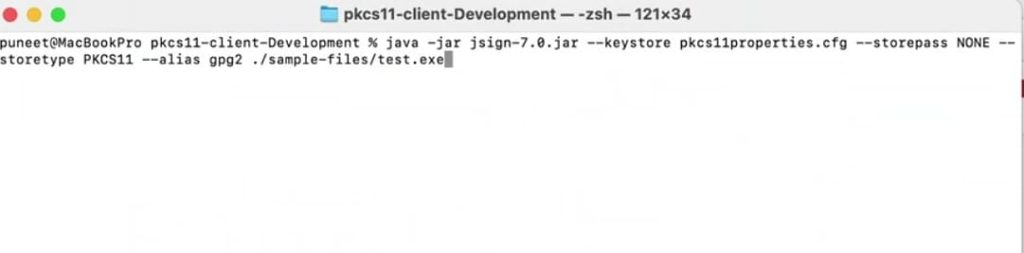

PKI and IEC 62443

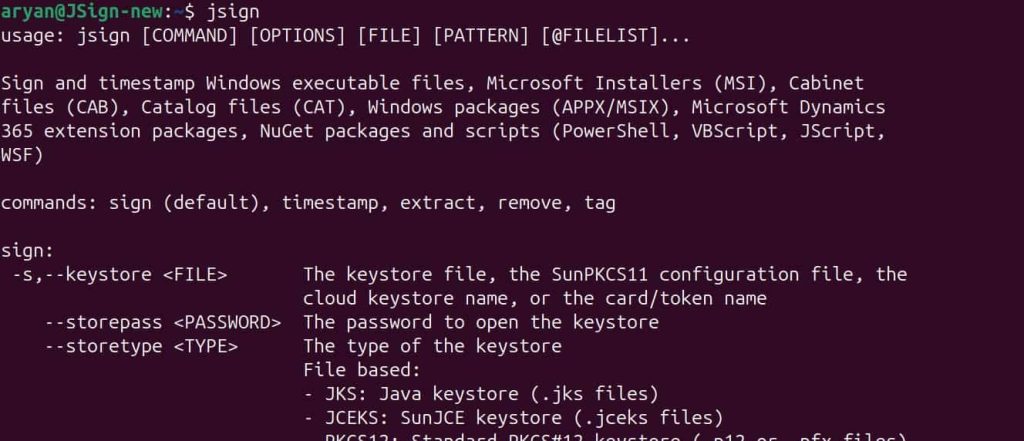

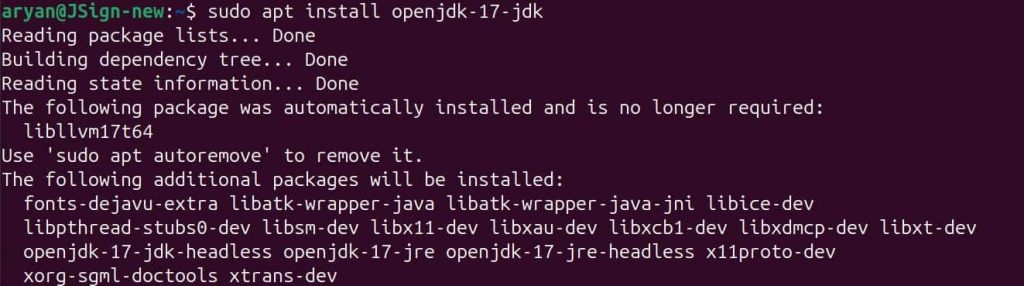

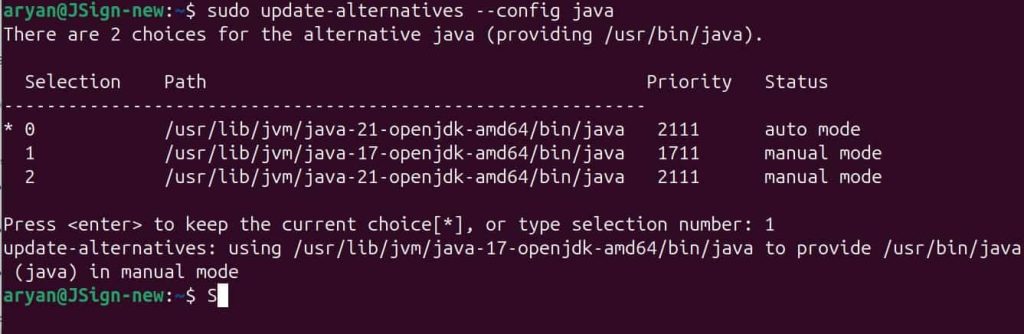

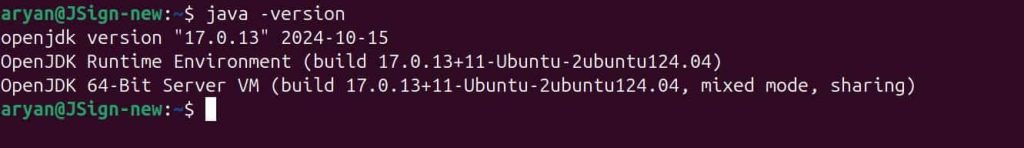

PKI and IEC 62443 are two completely distinct but overlapping areas in cybersecurity. Public key cryptography, which uses asymmetric key pairs, certificates, and digital signatures, offers some of the most practical methods of addressing the cyber security challenges that IACS faces. This cannot be overlooked to ensure the security of industrial automation and control systems (IACS). There are some attributes that IACS must have to comply with IEC 62443, which are, without a doubt, the specialty of public key infrastructure. Like,

- Authentication of both users and devices

- Access Control

- Validation of Software

- Secured Communication

According to the IEC 62443 standard, Part 4-2, there is a strong emphasis on ensuring that devices and users are authenticated and that data is protected from unauthorized access or modification, which is the stronghold of infrastructure like PKI. In addition, PKI addresses the concern of software assurance in industrial environments by making it easy for update processes to use code signing to guarantee the reliability of the software and the firmware package provided. It also helps you keep the system clean by letting only trusted packages into the system. In short, a good PKI solution enhances operational security while ensuring that your industrial systems can meet the modern threats in cybersecurity.

IEC 62443 and the evolving industry

The fourth industrial revolution has ushered in a new era in manufacturing due to the adoption of modern technologies such as the Internet of Things and Artificial Intelligence. These have meaningfully enhanced operations by smartening processes, carrying out data analytics in real-time, and performing predictive maintenance. With all this interconnectivity, there come new cybersecurity challenges. With many devices and systems interlinked, the threat landscape widens.

This is where IEC 62443 becomes important. Following the IEC 62443 instructions assists manufacturers in building a safer place where there is less likelihood of a cyber-attack. The framework outlined by IEC 62443 includes requirements for securing devices, networks, and systems within IACS, as well as ensuring that proper governance, risk management, and access control measures are in place. It also emphasizes the importance of continuous monitoring, vulnerability management, and incident response, all of which are crucial for mitigating the cyber risks introduced by Industry 4.0 technologies. With the developing challenges, the upcoming versions of the standard will also concentrate on these threats and help mitigate them.

It’s an eternal challenge to be on edge, but as long as the IEC 62443 standard is in place and the manufacturers develop all systems with security in mind, their systems will remain relevant. Relatedly, the development of post-quantum-cryptography presents new opportunities for everyone, and resources need to be kept up for both digital and physical security.

Conclusion

Although safeguarding an Industrial Automation and Control System (IACS) seems like a hefty task, IEC 62443 gives tidy guidance to aid the caseload. As we live in a modern, interconnected world where threats are evolving almost every minute, this innovative framework allows organizations to embrace and carry out security practices to the very end of their systems’ life cycle.

The best thing about IEC 62443 is that it is not only about technology. It also expresses the value of collaboration, learning over time, and being flexible. With such a framework, industries will be able to overcome the appropriate challenges in cybersecurity today and in the future.