Company Overview

Our recent client is a leading healthcare provider in Texas, renowned for delivering a broad range of medical services, from general practice to specialized treatment for cancer, cardiovascular diseases, trauma, and pediatrics.

They are the largest provider of health benefits in the state, working with more than 150,000 physicians and health care practitioners, along with more than 500 hospitals, to serve nearly 10 million members. They offer a variety of health insurance plans and services, including individual plans, employer group and family plans, Medicaid, Medicare Advantage plans, and dental and vision coverage.

For more than a century, this organization has been a pillar of health and wellness by addressing personal, occupational, and public health needs. They are dedicated to ensuring that everyone has access to quality healthcare through clinical care, wellness initiatives, outreach programs, and insurance and community support, available 24/7.

Challenges

For our client, Public Key Infrastructure (PKI) served as the backbone of their security framework, playing a critical role in issuing digital certificates for online services, authenticating remote access, encrypting sensitive data, and ensuring the integrity of internal communications. Their PKI environment was a hybrid of on-premises and cloud-based models designed to support the diverse needs of their expanding infrastructure. However, as the organization expanded and digital services became more complex, certain weaknesses in their existing PKI infrastructure began to surface.

The Root CA was kept online, and the domain joined, which increased its attack surface area by making it vulnerable to network-based threats, privilege escalation, and domain compromises. This exposure puts the entire PKI infrastructure at risk, as attackers gaining access to the domain controller or the network could manipulate or control the Root CA. This approach went against industry best practices and regulatory compliance requirements, which emphasize keeping the Root CA offline and completely isolated to minimize risk.

The healthcare firm was storing its Root CA and Issuing CA private keys in an encrypted file system on dedicated servers. However, this method was not as secure as using a dedicated Hardware Security Module (HSM), as it left the private keys vulnerable if the encryption keys were compromised or if an attacker gained unauthorized access to the server.

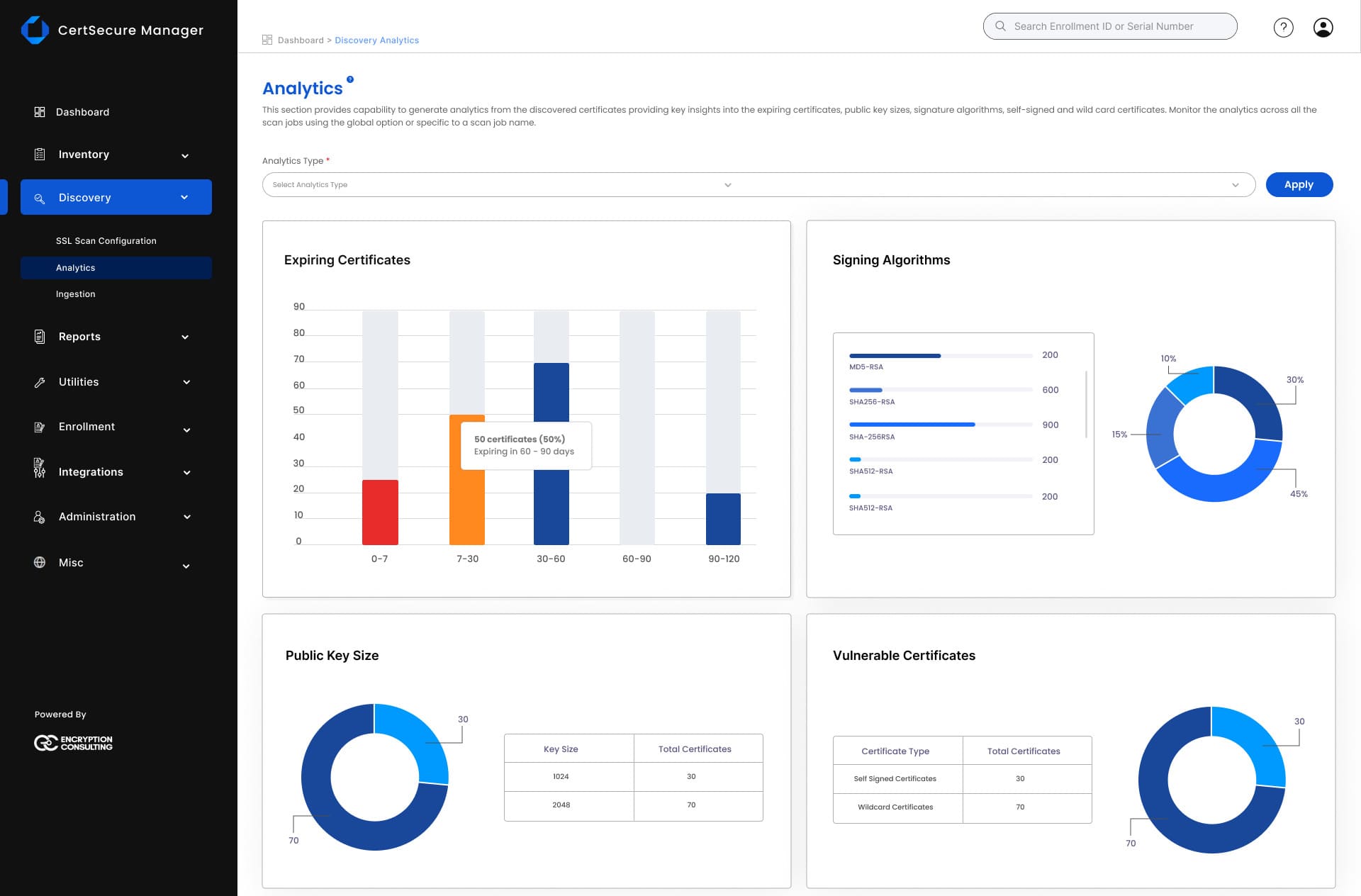

The organization had no defined guidelines for creating and using self-signed certificates, which led to users deploying them without an effective tracking mechanism, making it challenging for the organization to monitor them. As a result, some certificates expired without being noticed, causing certificate outages.

Moreover, the CAPolicy.inf file was not installed on the host server before setting up the Root CA. The CAPolicy.inf is a configuration file that defines the extensions, constraints, and other settings, such as certificate validity periods and key lengths, that are applied to a root CA certificate and all certificates issued by the Root CA.

Without this file, the default settings were applied, preventing the organization from configuring the PathLength basic constraint, which limits the number of subordinate CA tiers that can be created. This oversight introduced vulnerabilities in PKI infrastructure, as it allowed malicious actors to create rogue subordinate CAs under the existing Issuing CAs. These rogue CAs could then issue unauthorized certificates, undermining the trust of the entire PKI system.

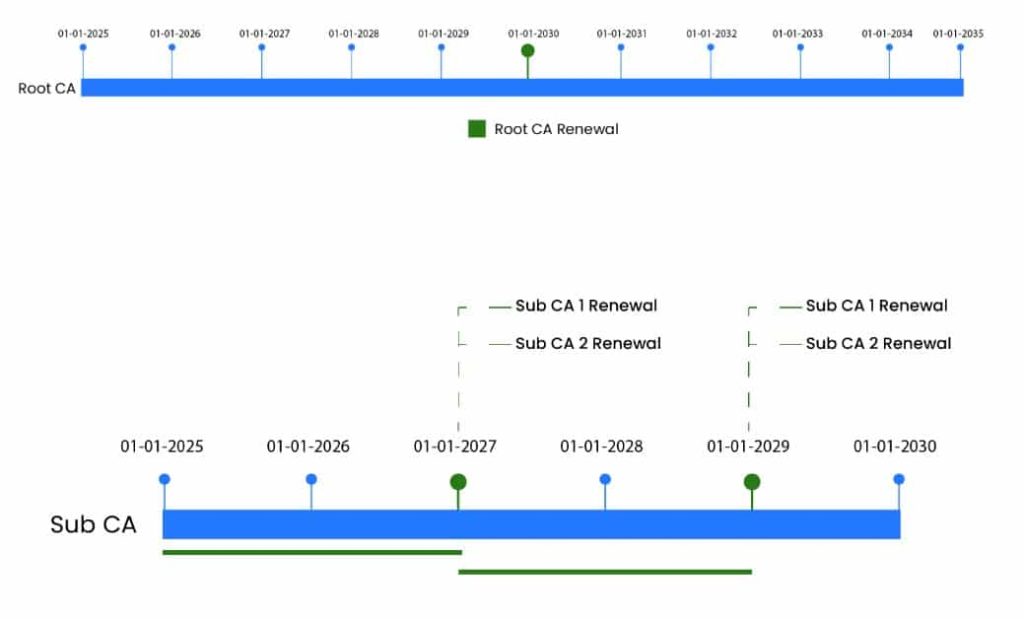

The organization’s Root CA and Issuing CA had a 25-year certificate validity period, which exceeds recommended best practices, increasing the risk of private key compromise due to advancements in computing power, such as quantum computing, cryptographic vulnerabilities like weaknesses in SHA-1, or inadequate key protection measures.

Furthermore, any vulnerabilities or misconfigurations in the CA infrastructure could persist for decades, leaving the entire system exposed to exploitation. A 25-year certificate validity period means the same private key is used for an extended time, whereas a shorter certificate validity period ensures that the private key is rotated and replaced before it becomes obsolete or vulnerable. Therefore, best practices recommend shorter certificate validity periods to ensure more frequent key rotations and timely updates to cryptographic algorithms, which reduces the risk of long-term exposure.

A key challenge in our client’s PKI environment was the absence of a clearly defined key custodian matrix for the Root CA and Issuing CA. Their existing setup allowed a single authorized individual to make changes without proper oversight, increasing the risk of misconfigurations, security vulnerabilities, or compromised certificates. Without this matrix, there was no clear division of responsibility and accountability for the management, protection, and lifecycle of the private keys associated with these critical components.

Additionally, the absence of both a disaster recovery plan and a business continuity plan meant there was no strategy for restoring IT systems after a cyberattack, disruption, or data breach, nor a plan to ensure essential business operations could continue during and after such events. This lack of preparedness increased the risk of prolonged outages and operational delays, potentially affecting customer trust and the organization’s ability to respond effectively to such incidents.

With so many vulnerabilities in their existing PKI setup, there was a constant risk that the organization’s sensitive data might fall into the hands of attackers, leaving the healthcare provider vulnerable to security breaches.

Solution

When the healthcare provider reached out to us, they were looking for expert guidance to build and implement a secure PKI infrastructure that would protect their operations from cyberattacks, unauthorized access, and data breaches and help address compliance-related issues.

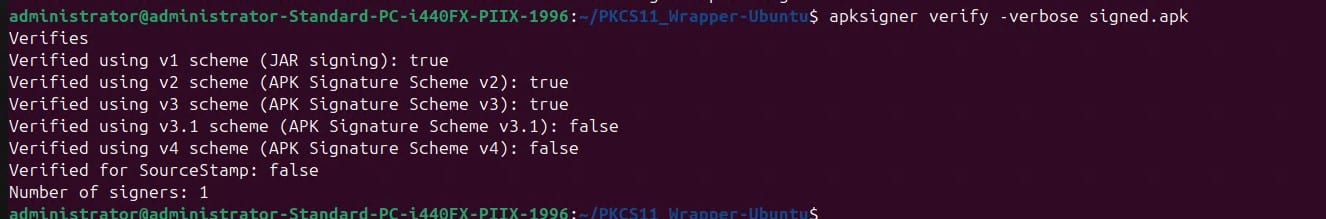

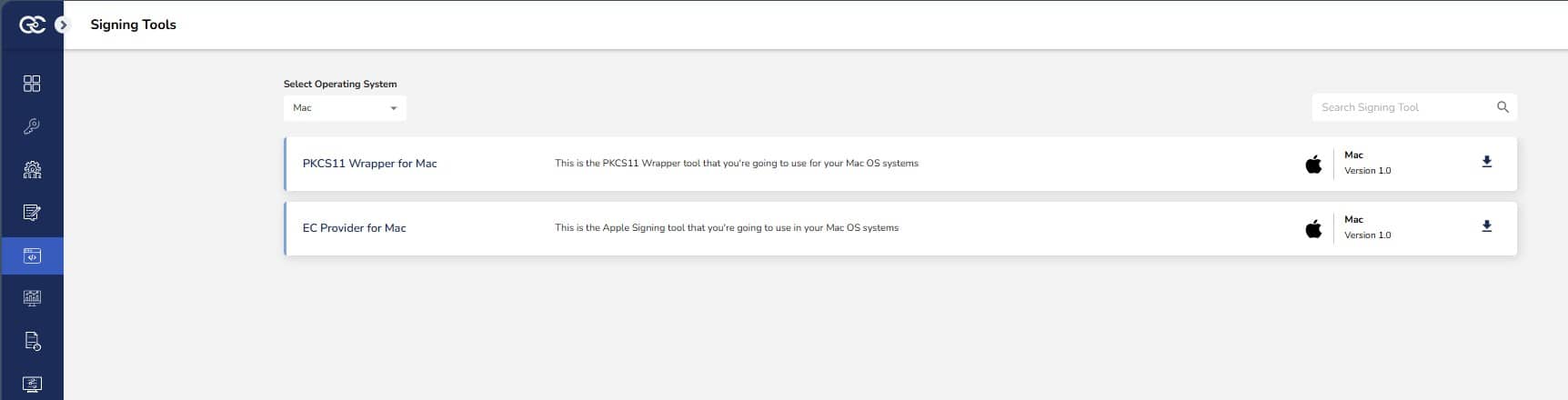

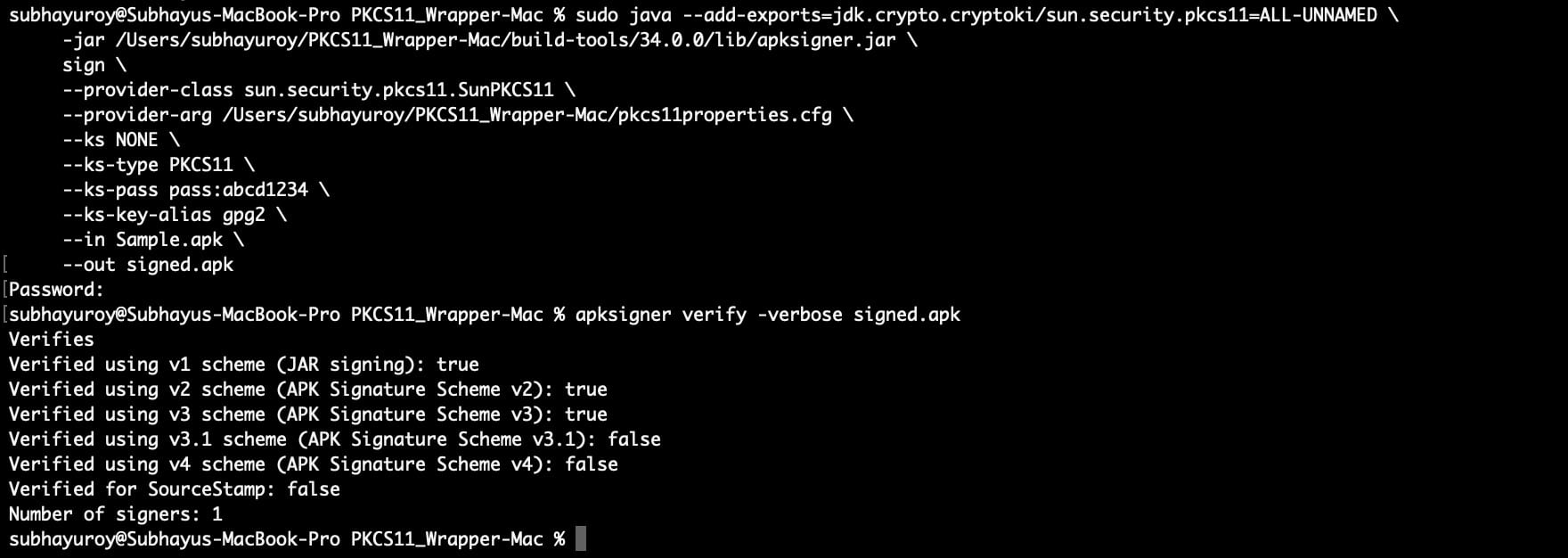

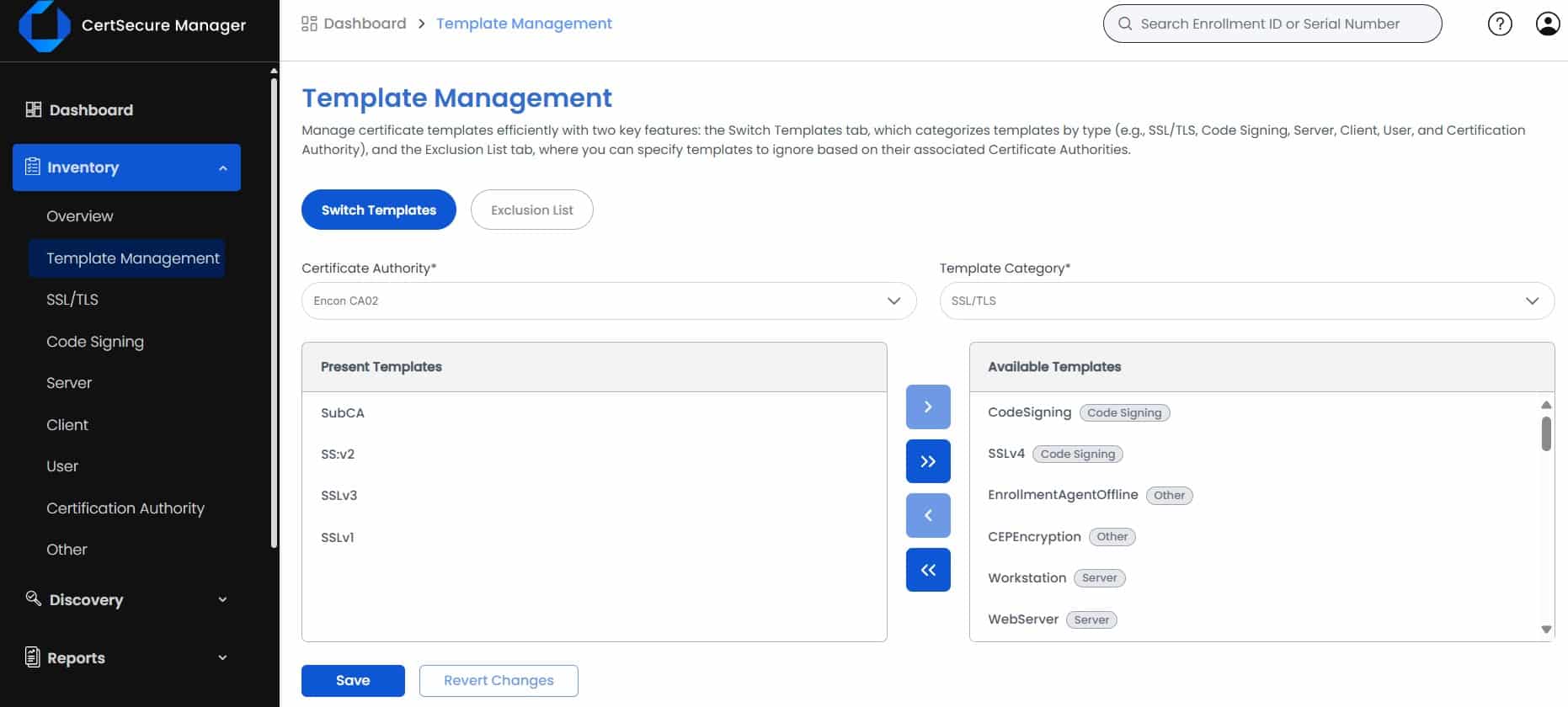

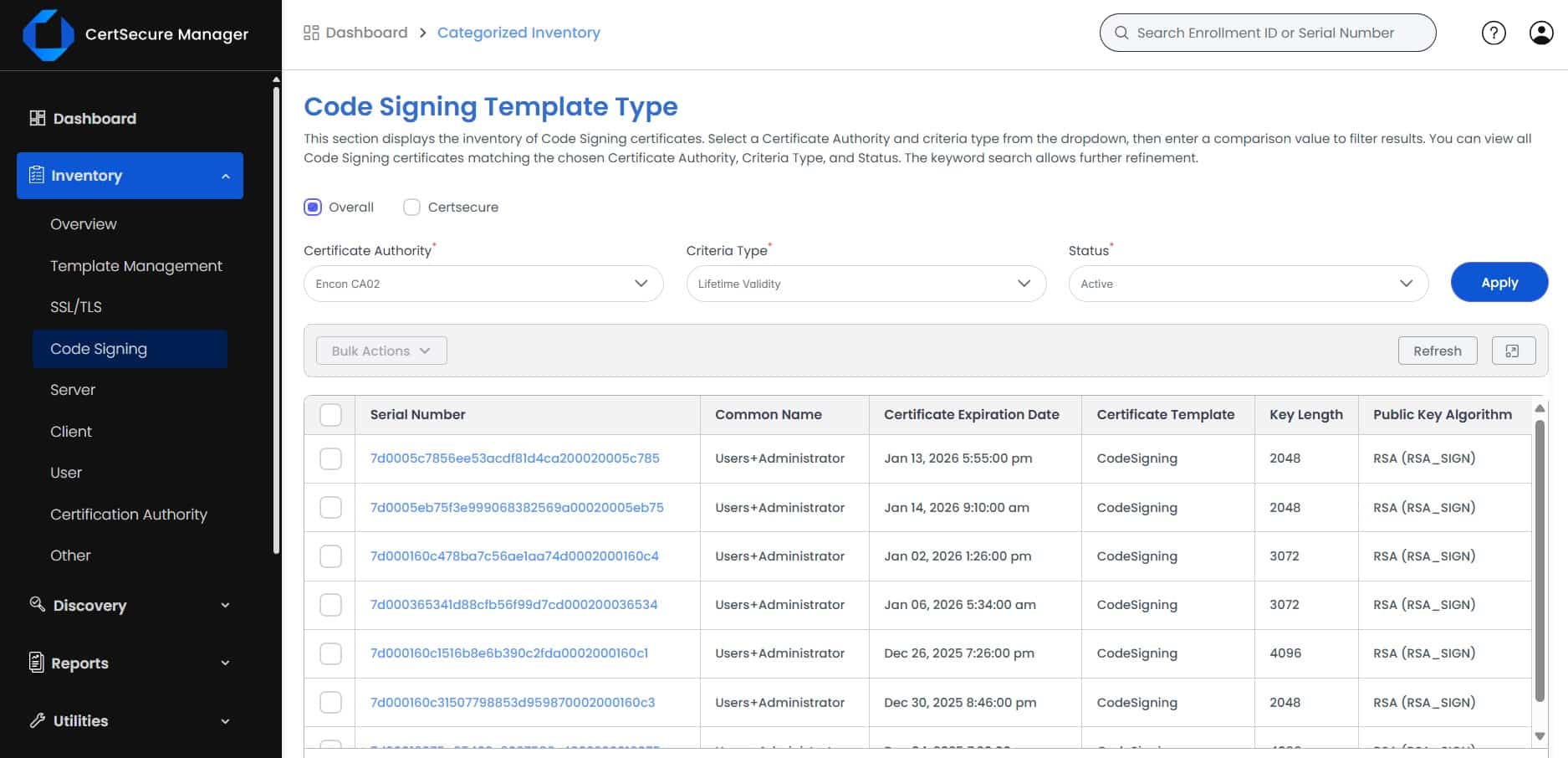

We began by gathering detailed information about the client’s PKI environment, which allowed us to develop a comprehensive kick-off deck. This phase included evaluating their existing cryptographic environment, including security policies, procedures, and standards, defining use cases based on system requirements such as SSL/TLS certificates for secure communication, S/MIME for secure email, client and server authentication, and code signing, and establishing implementation tasks and timelines. Based on the gathered information, we presented several design options and worked closely with the client to finalize the new PKI architecture, ensuring it met their use cases and security needs.

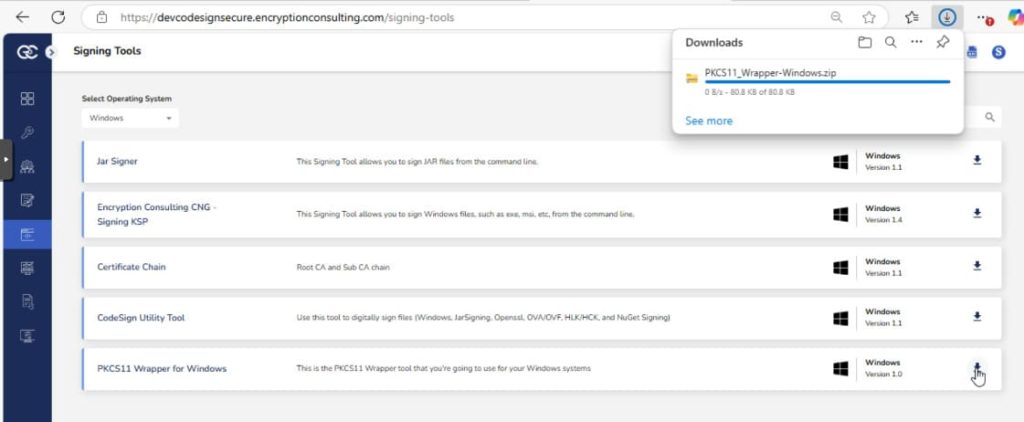

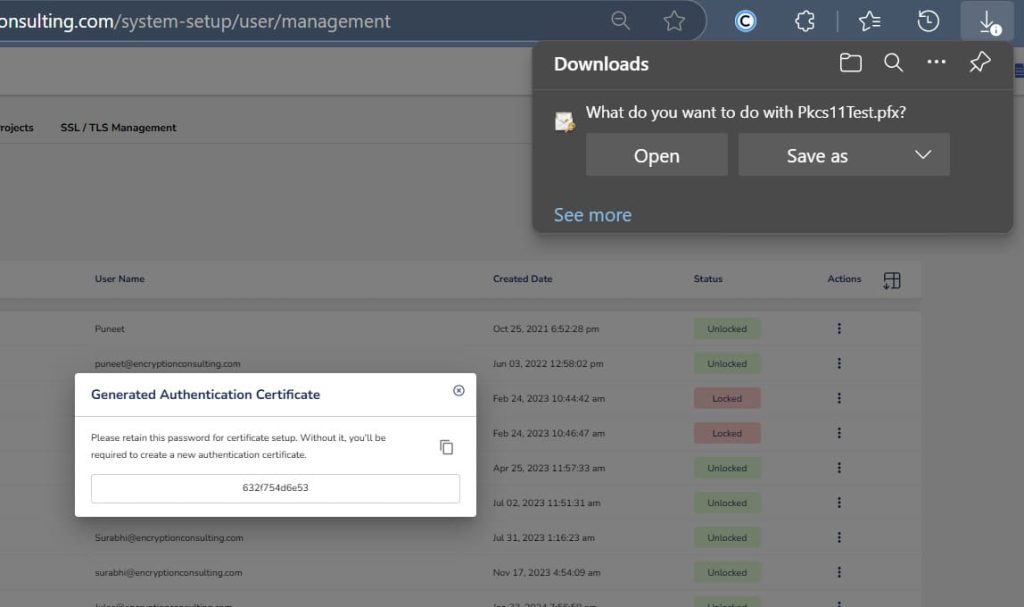

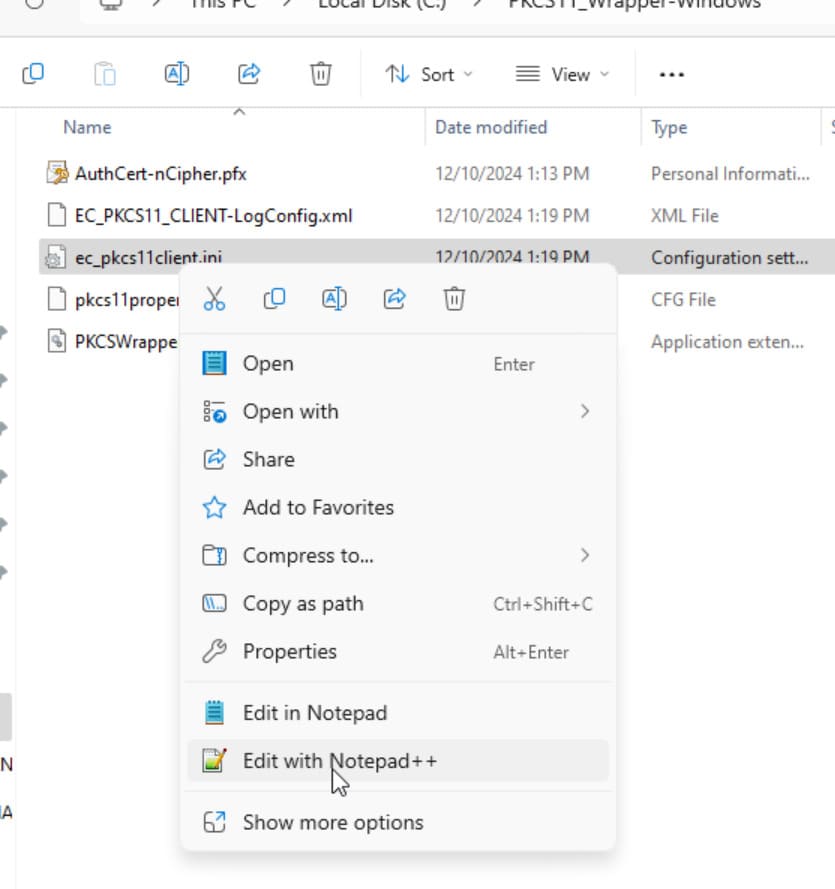

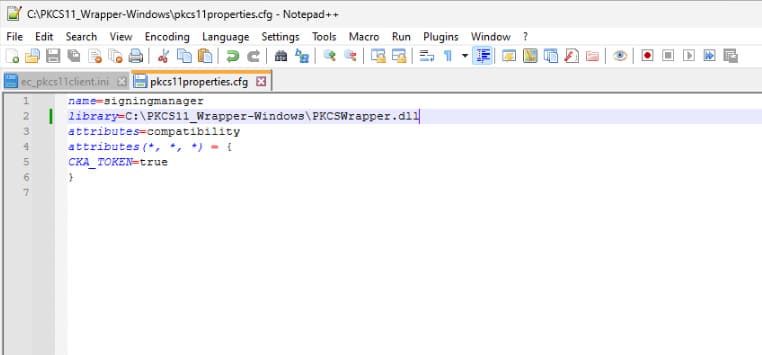

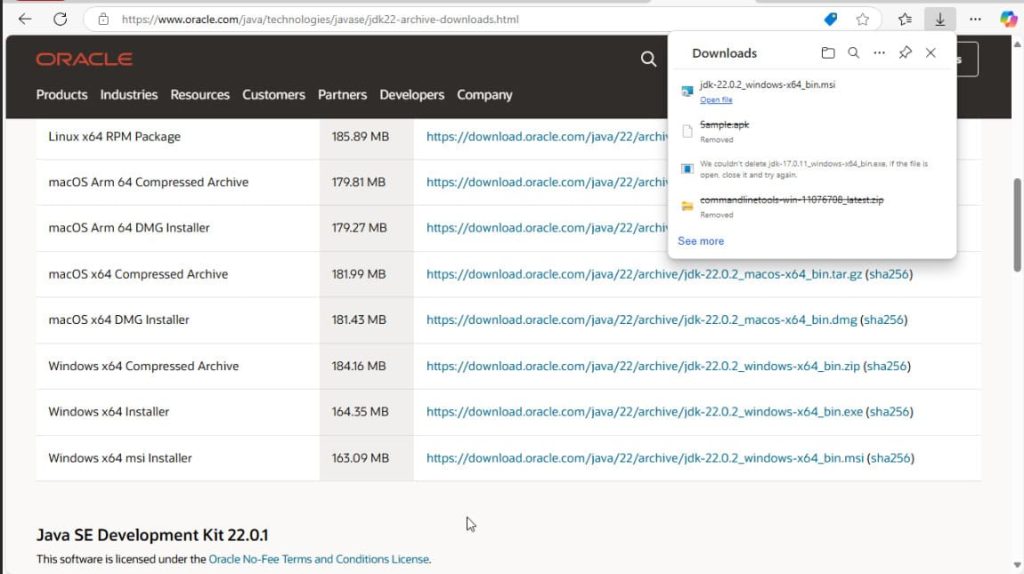

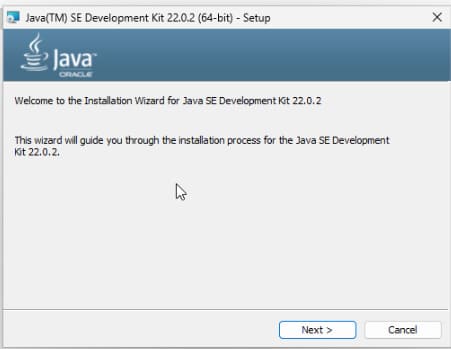

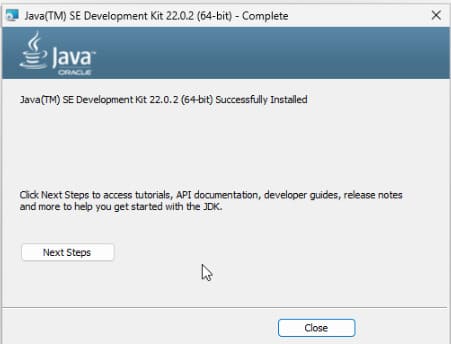

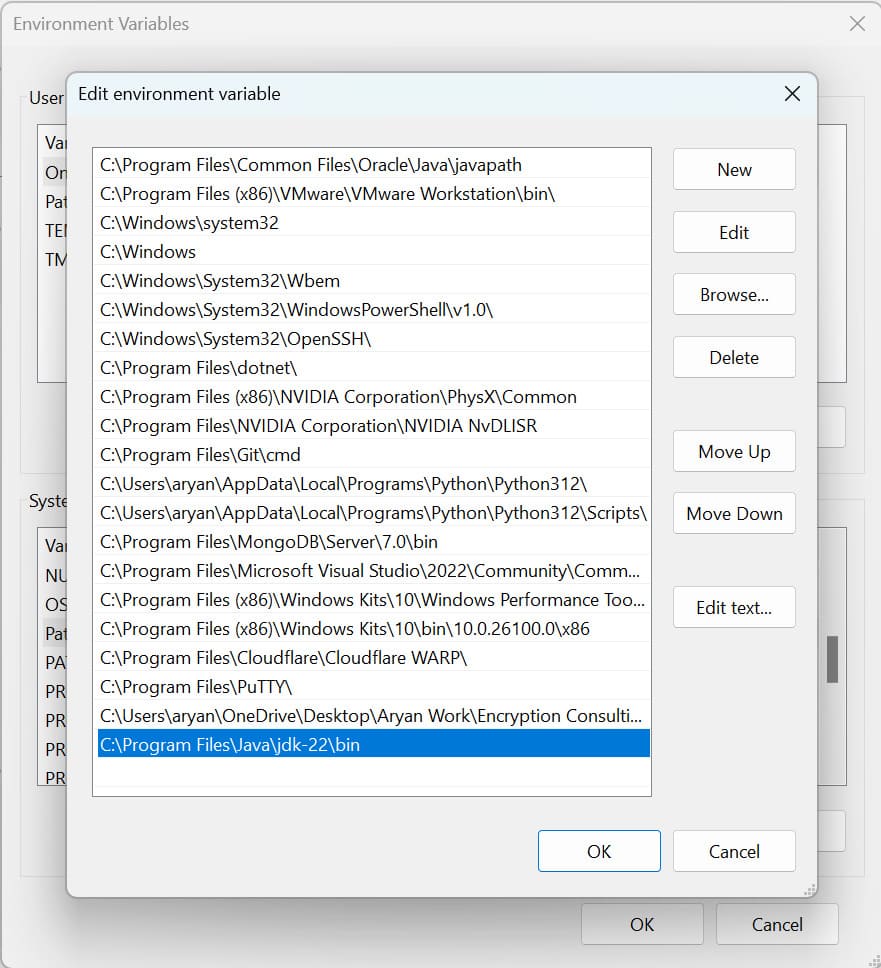

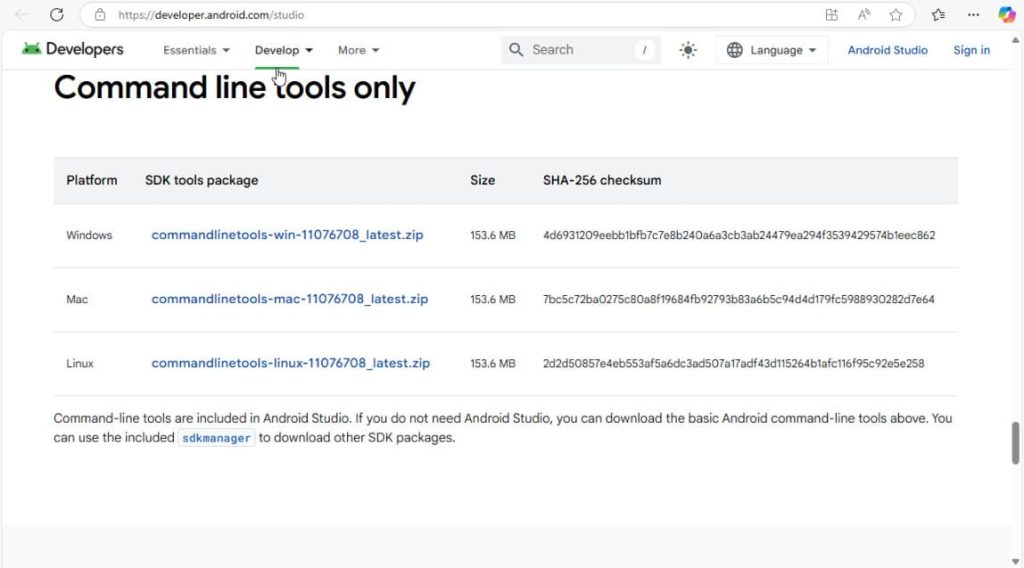

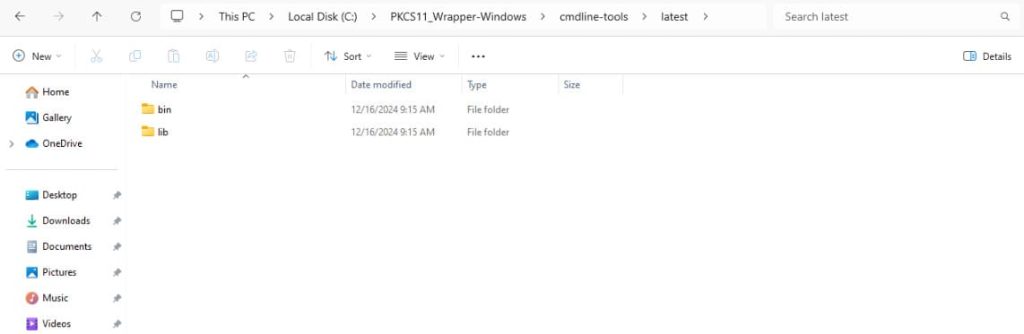

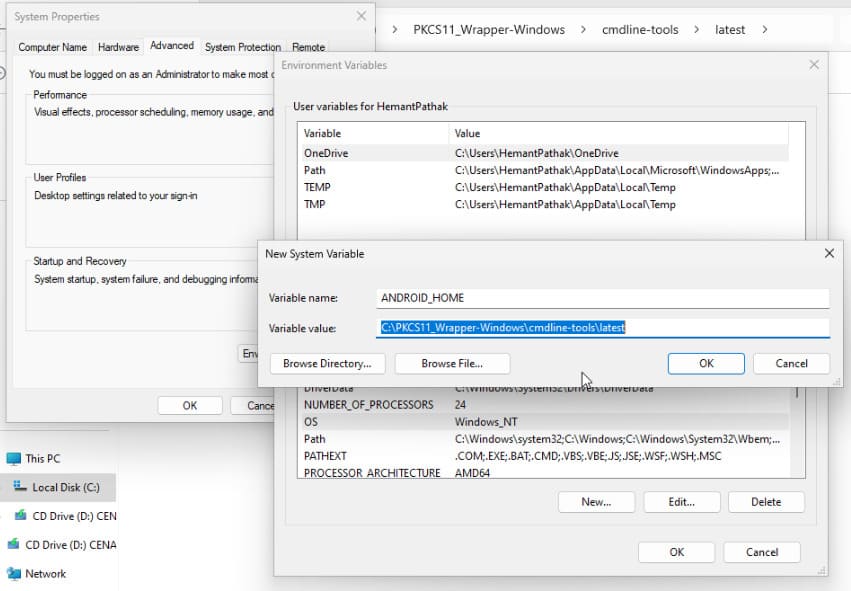

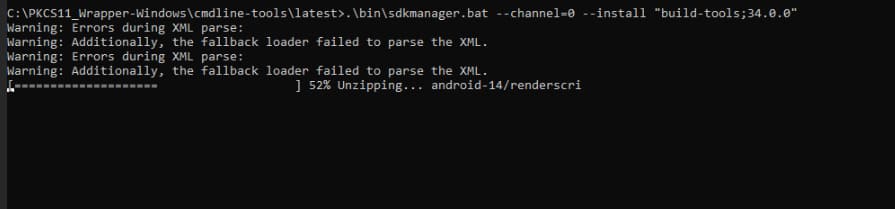

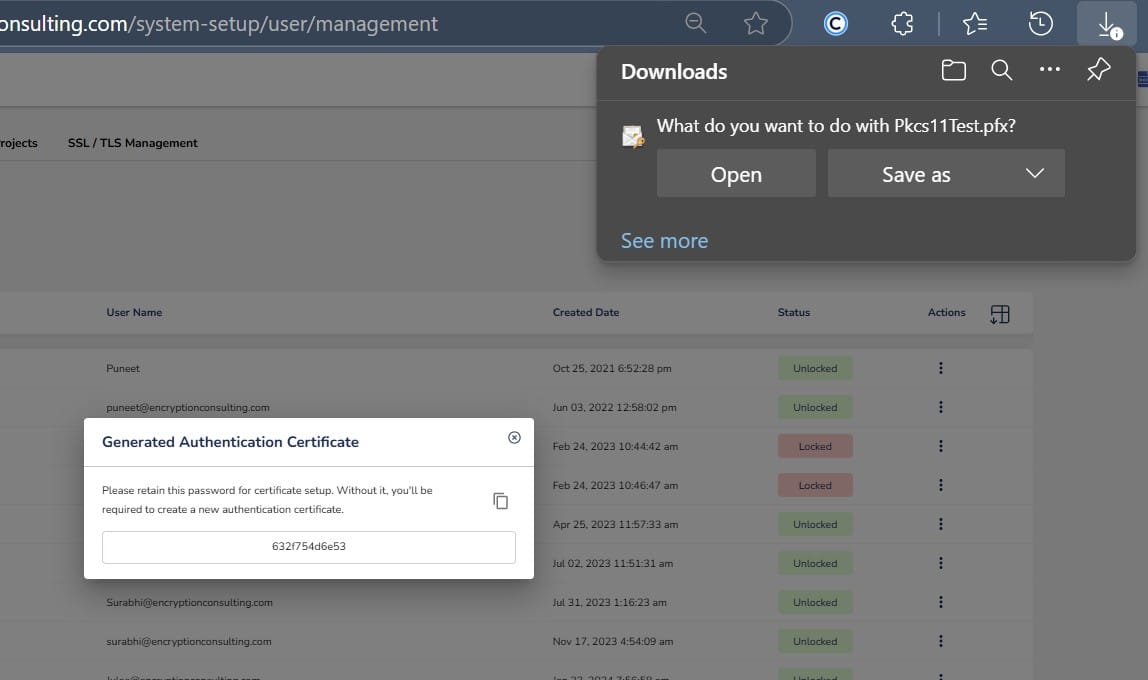

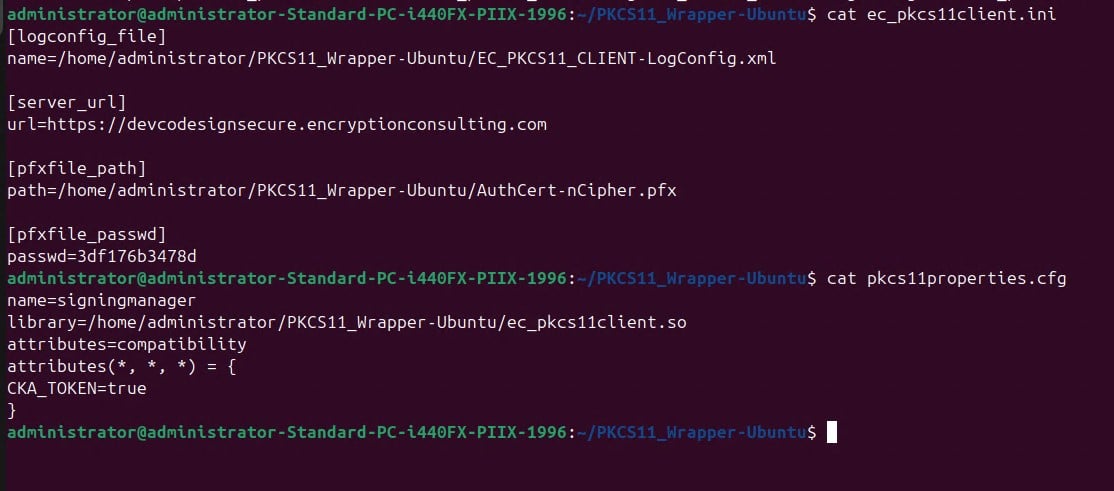

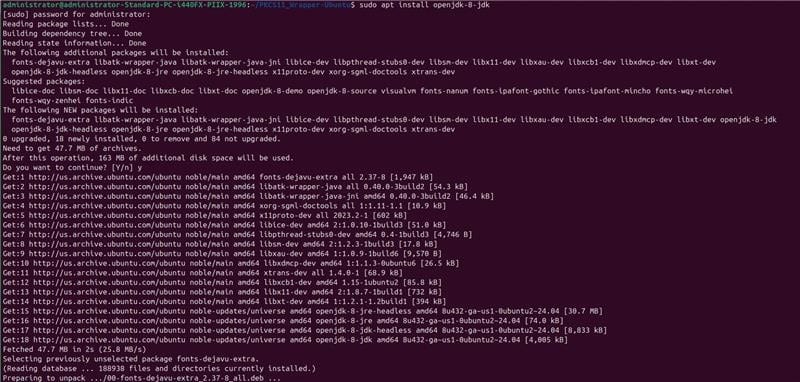

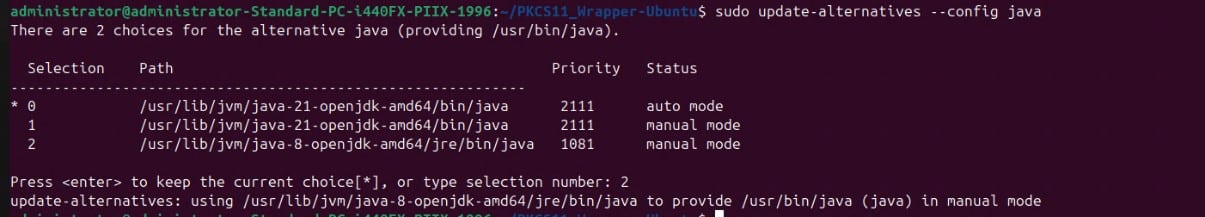

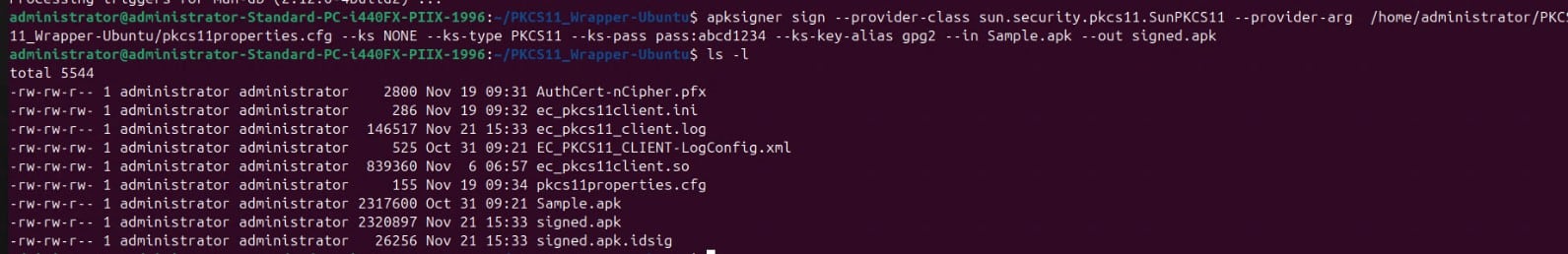

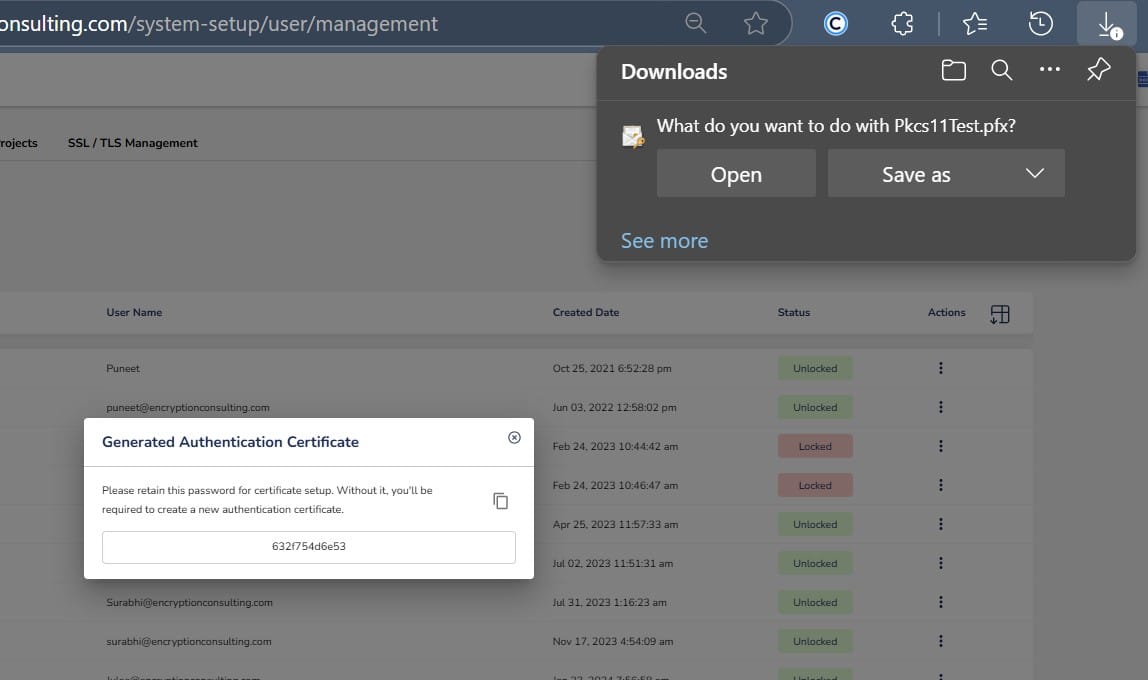

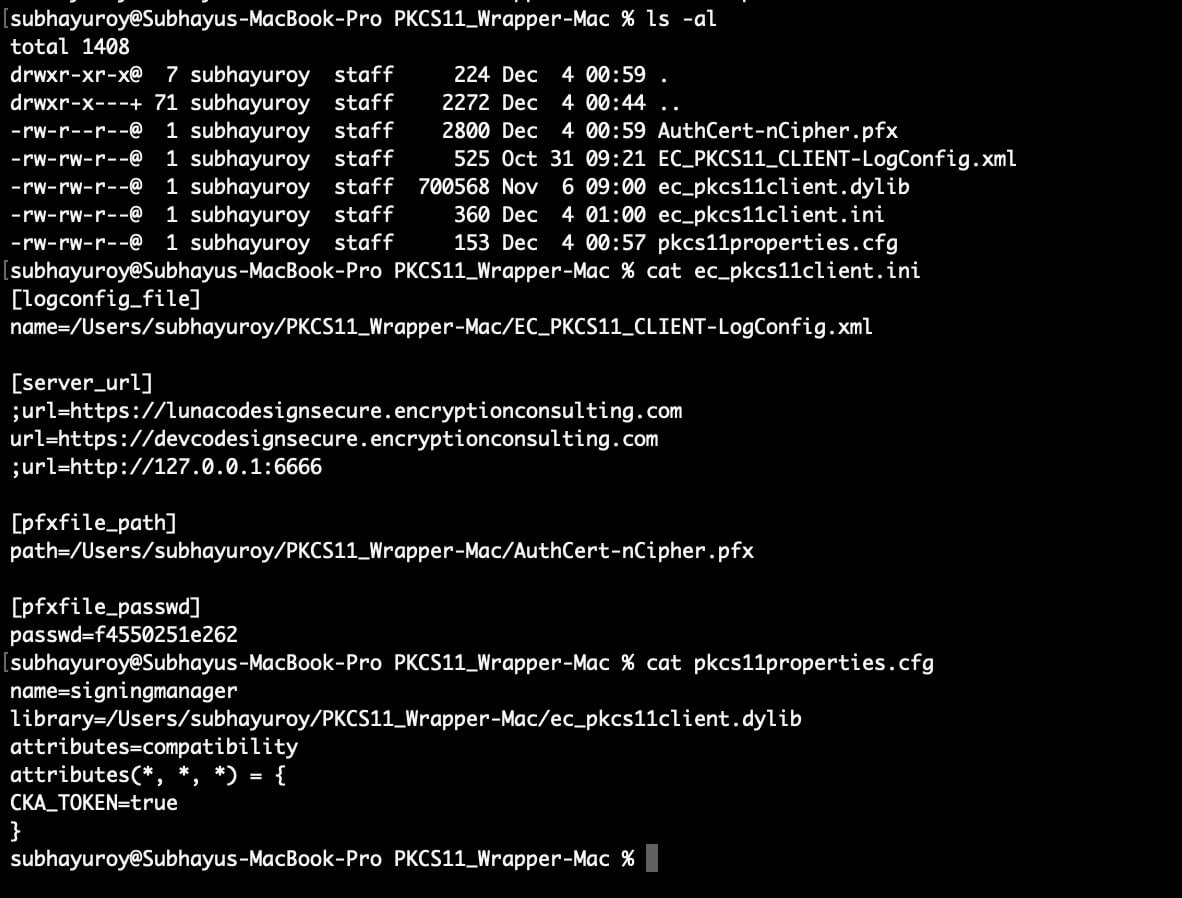

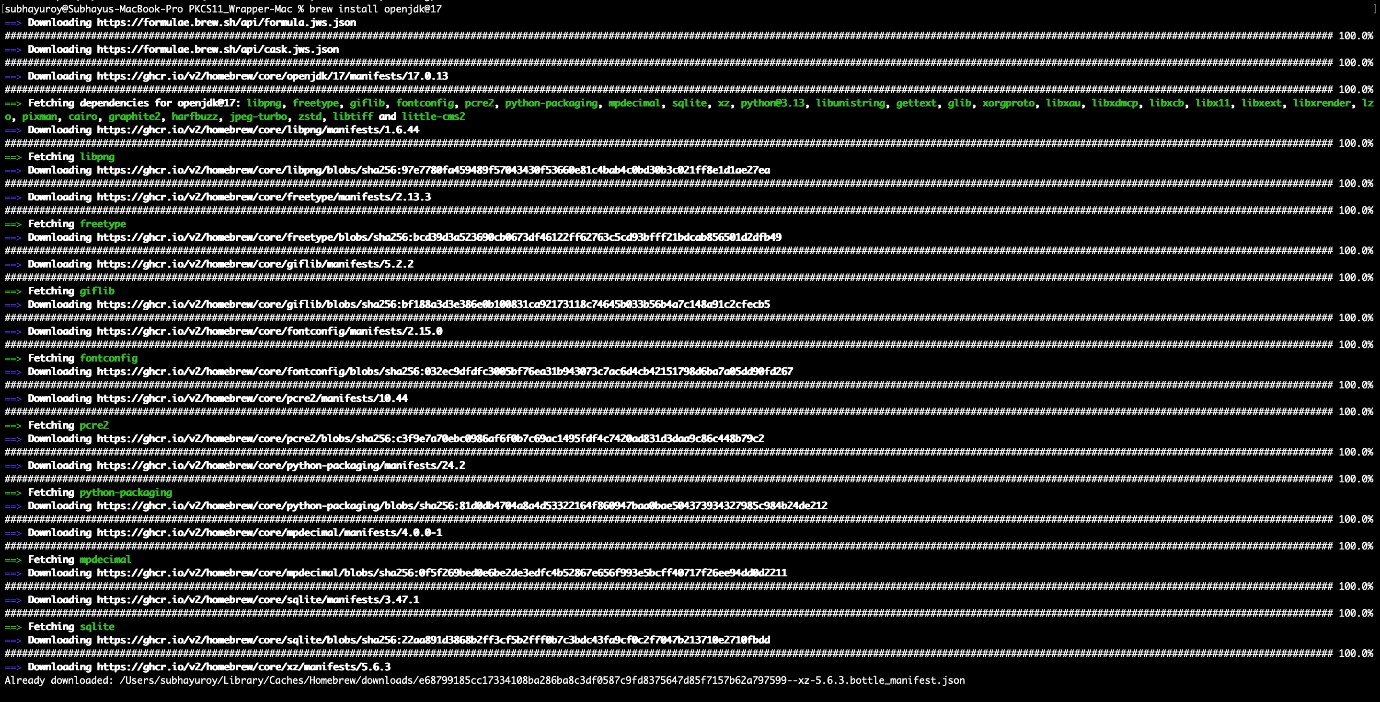

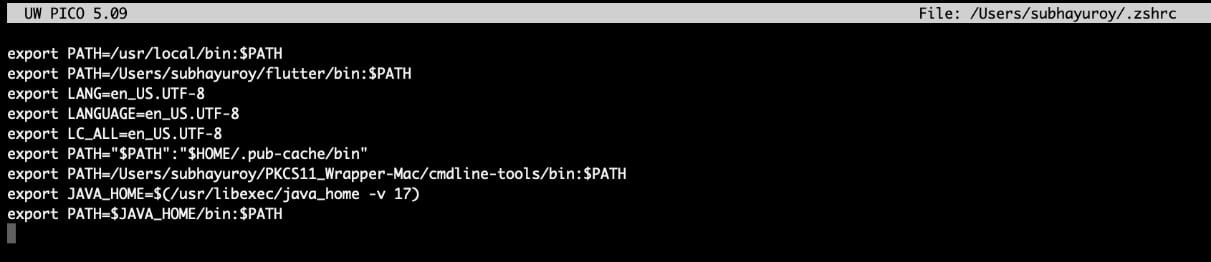

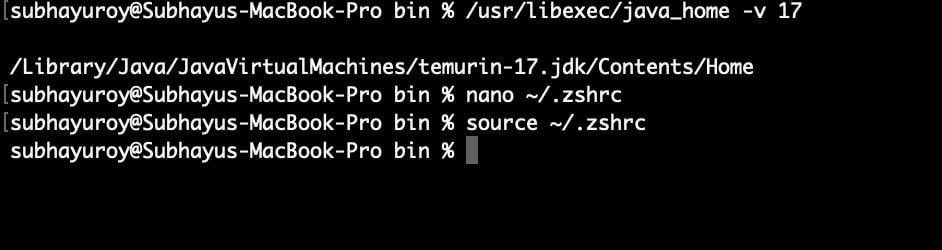

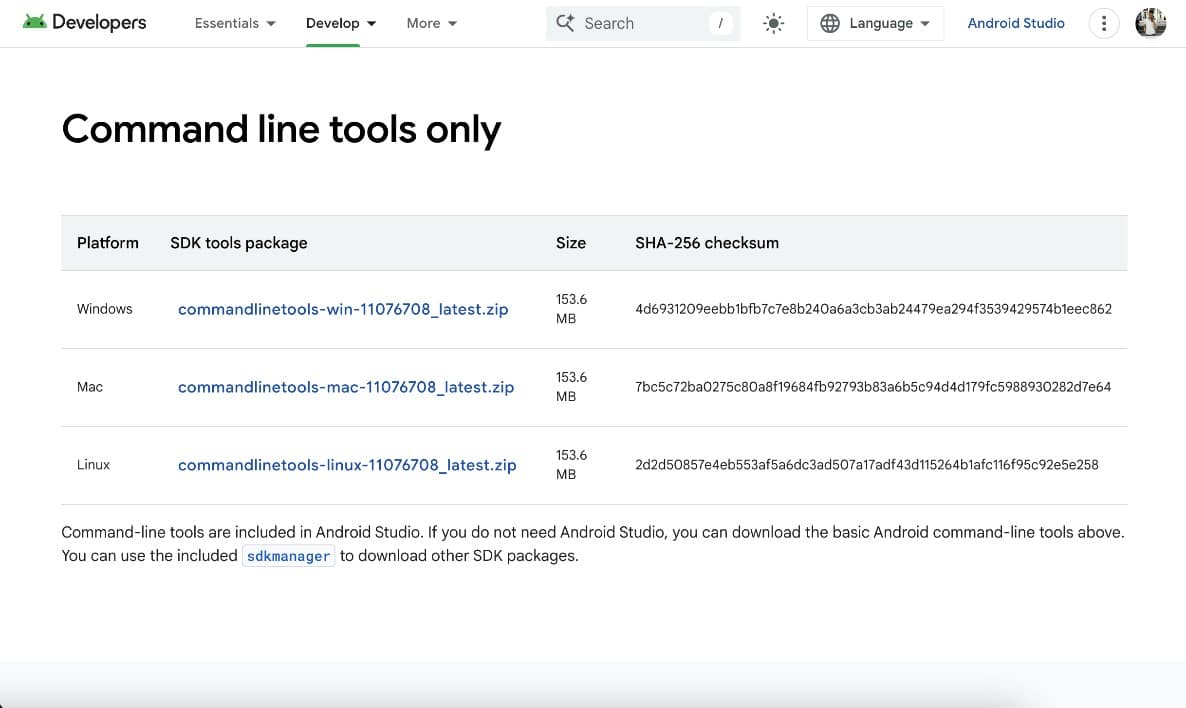

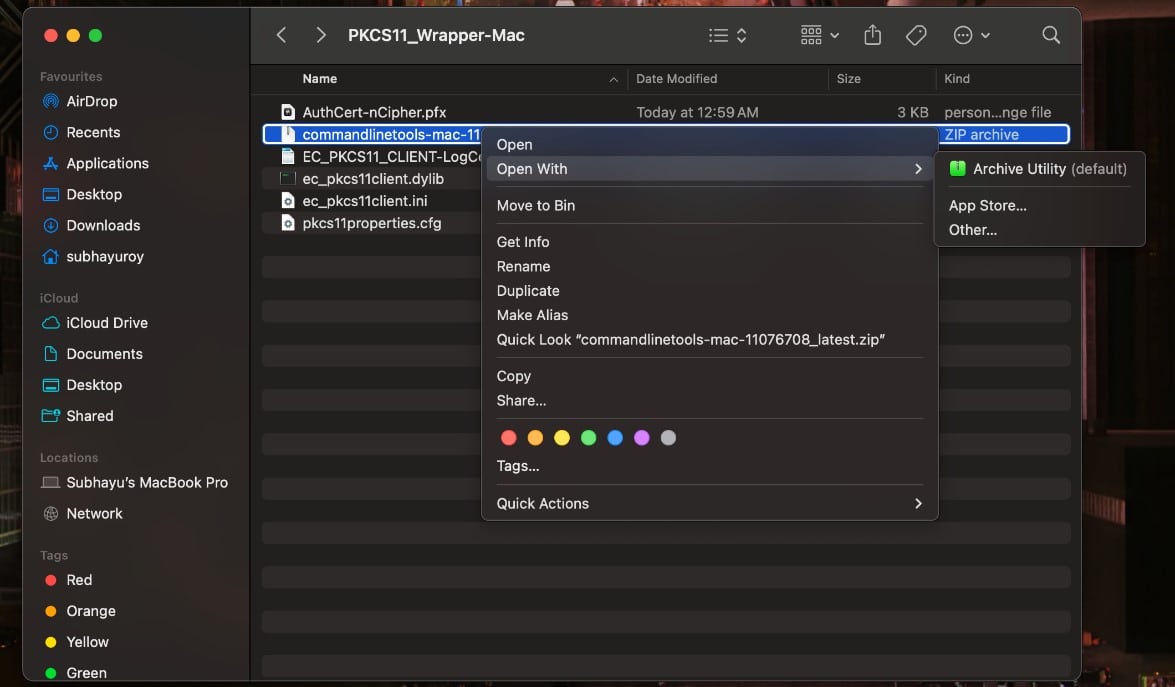

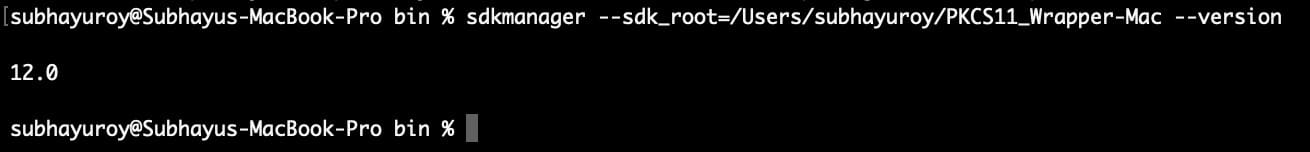

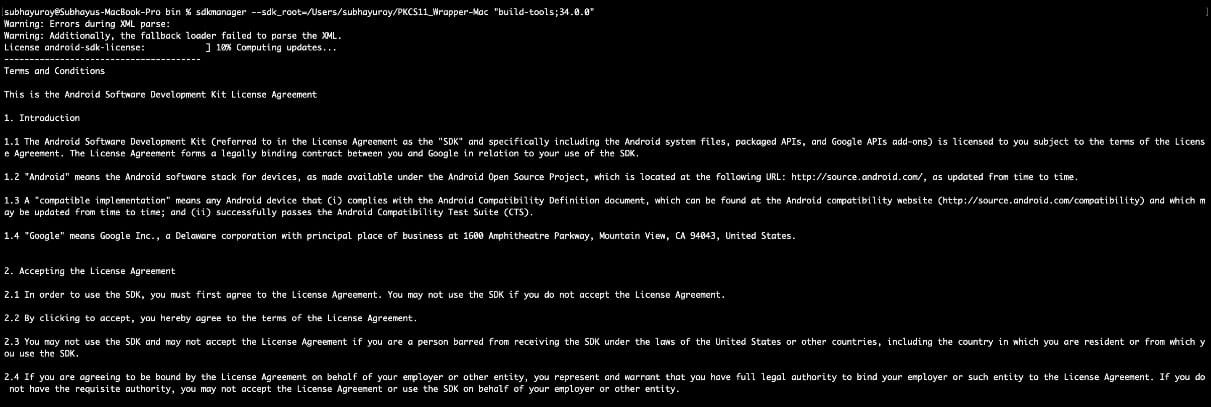

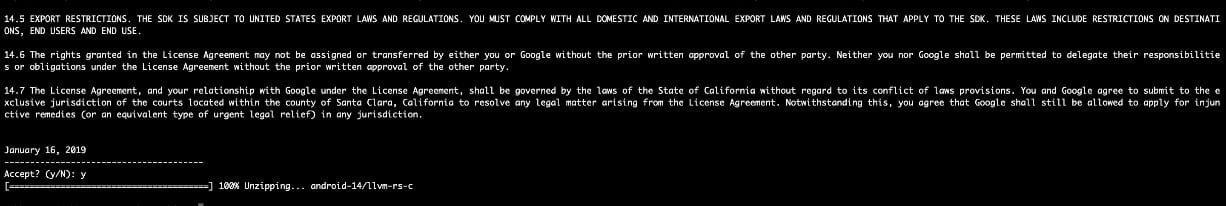

Then we moved on to the building stage, where we focused not only on the technological infrastructure that would be designed but also on integrating Hardware Security Modules (HSMs) for secure key storage. A key ceremony was held to ensure customer trust and compliance with guidelines such as HIPAA and FIPS. We securely conducted the ceremony, created and stored cryptographic keys within HSMs, and limited access only to authorized persons. All root CA and intermediate CA private keys were safely stored in tamper-proof HSMs to maintain compliance and operational integrity.

Then, after thoroughly evaluating the client’s infrastructure and determining their security requirements and main challenges, we designed and implemented a dependable and scalable two-tier Microsoft PKI infrastructure. This infrastructure consists of a secure offline Root CA, which serves as the foundation of trust, and two online subordinate CAs for issuing machine-based and user-based certificates.

We created thorough PKI documentation for architecture, Key Ceremony procedures, Certificate Policy, and Certificate Practice Statement. These documents provided clear guidance and standardized processes for managing and scaling the PKI infrastructure securely. We then conducted knowledge transfer sessions with the client, covering a detailed plan for PKI operations and disaster recovery procedures to ensure the infrastructure remained resilient and secure against emerging threats.

We configured the CAPolicy.inf file on the Root CA and Issuing CAs, specifying the PathLength basic constraint to limit subordinate CA tiers. This mitigated the risk of rogue subordinate CAs and enhanced the security and trust of the PKI system.

To enhance the security of the Root CA and Issuing CA private keys, we defined a key custodian matrix, ensuring that no single administrator had full control over the cryptographic keys. Key management operations, including key generation, access, and recovery, needed approval from multiple custodians, enforcing separation of duties. This helped reduce the risk of unauthorized changes, insider threats, and security issues while improving accountability and oversight.

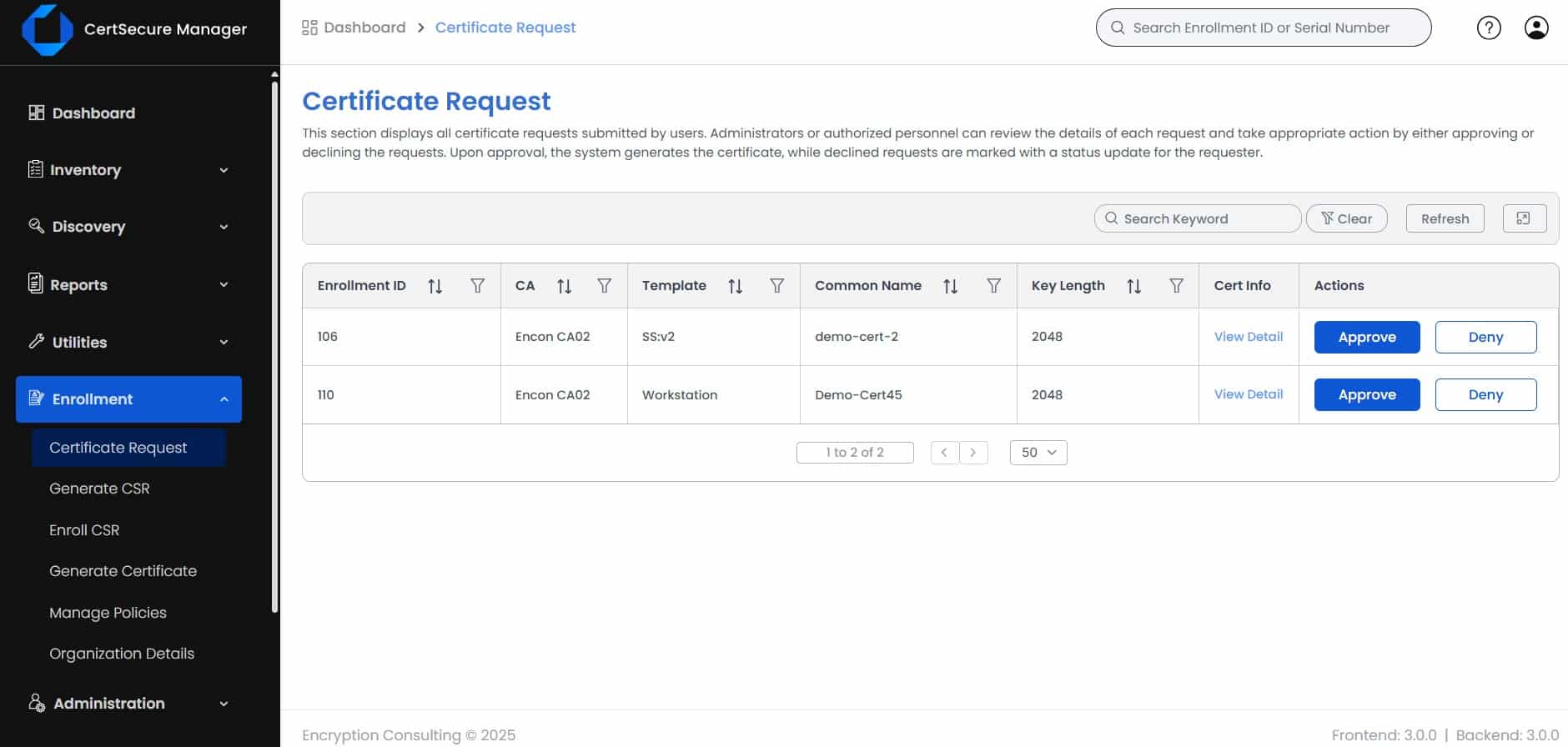

We also implemented clear guidelines for generating and managing self-signed certificates, ensuring they are tracked, regularly reported to the relevant team, and properly revoked when needed. We also implemented an approval process for generating self-signed certificates, ensuring only authorized individuals can create them.

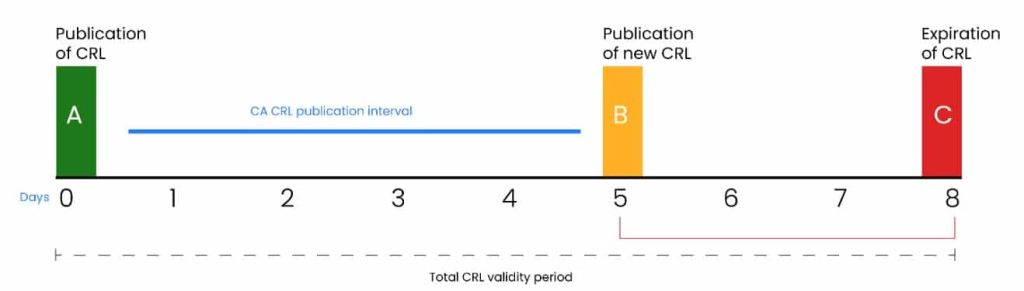

We improved the security of the PKI system and made device management easier by integrating the Network Device Enrolment Service (NDES) to safely issue certificates to mobile devices managed by the client’s infrastructure. To serve as a reverse proxy for NDES enrollment, we set up a Web Application Proxy (WAP) server in the perimeter network and implemented NDES on the internal corporate network. To guarantee that all communication occurs over secure HTTPS connections, firewall rules were set up to permit only necessary traffic on port 443. To provide effective and safe certificate revocation management, we also put in place an Online Certificate Status Protocol (OCSP) Responder to check the status of certificates in real time.

Functional testing was critical to ensure the system was intact and reliable. We developed test cases and conducted thorough functional testing to confirm that every part of the system worked according to plan. All the variations found were corrected immediately, thus ensuring that the system was functioning as planned.

Our service didn’t stop there. We also developed and implemented a disaster recovery and business continuity plan for the PKI infrastructure. These plans were essential for ensuring quick recovery from disruptions, cyberattacks, or data breaches and maintaining continuous business operations. We recommended Hardware Security Modules (HSMs) for securely storing cryptographic keys, as well as scheduled backups of all PKI-related data and configurations. On top of that, we added redundancy and failover systems to make sure the PKI infrastructure stays up and running, even in the event of a system failure.

With this wide, multi-phased approach, we were able to deliver a reliable, scalable, and compliant PKI infrastructure that addressed the client’s immediate needs while also gearing them up for future success.

Impact

Encryption Consulting tackled all the key challenges and successfully implemented a two-tier Microsoft PKI infrastructure to resolve them. With the new infrastructure in place, they eliminated all the operational inefficiencies and compliance gaps, reduced the risks of data breaches, and were ready for growth and security in the long run.

The secure offline Root CA, along with the online subordinate CAs, ensured the integrity and trustworthiness of all digital certificates. The offline Root CA and secure key storage in HSMs ensured that their private keys and sensitive operations were now protected from unauthorized access.

Proper comprehensive documentation provided the healthcare organization with clear, detailed guidelines for managing and operating their PKI. It made day-to-day management more efficient, simplified scaling and made the system more robust and reliable.

By reducing the certificate validity period and introducing key custodians, the organization reduced the vulnerability window for attackers. This made it difficult to perform unauthorized changes and ensured that any potential issues were flagged and resolved instantly.

By establishing clear guidelines for managing self-signed certificates, the client was able to maintain better control over how certificates were issued and used, which helped minimize risks. Regular tracking and reporting to the security team ensured proper certificate lifecycle management, preventing expired certificates from causing downtime or operational disruptions. This streamlined process also reduced administrative overhead and operational inefficiencies by ensuring certificates were up-to-date and properly monitored.

With the disaster recovery and business continuity plan in place, the firm now had a safety net that allowed it to quickly recover from any unexpected disruptions and continue operations with minimal downtime. By implementing scheduled backups and redundancy measures, they ensured that sensitive data and operational systems were always protected and could be quickly restored in the event of a failure. This gave them peace of mind, knowing that their critical systems would remain operational even during emergencies.

Overall, the PKI setup added a strong layer of protection to their digital environment. The risk of unauthorized access and data breaches was reduced because all communications and sensitive data were securely encrypted and authenticated. As a result, by establishing a sound and scalable PKI system, we effectively addressed all the vulnerabilities that had been holding them back and geared them up for future success.

Conclusion

By implementing our comprehensive PKI, the healthcare provider enhanced its services while maintaining the trust of stakeholders and customers. This solution also allowed them to build an effective security framework and increase their scalability.

They gained a competitive edge in the market, in addition to ensuring compliance, enabling them to focus on delivering high-quality patient care and ensuring trust in their digital services for the future.

A solid PKI infrastructure might be the answer if you want to improve data security, optimize your public key infrastructure, and guarantee compliance with evolving regulations. Let us talk about how we can assist you in putting in place a PKI system that is customized to your requirements!