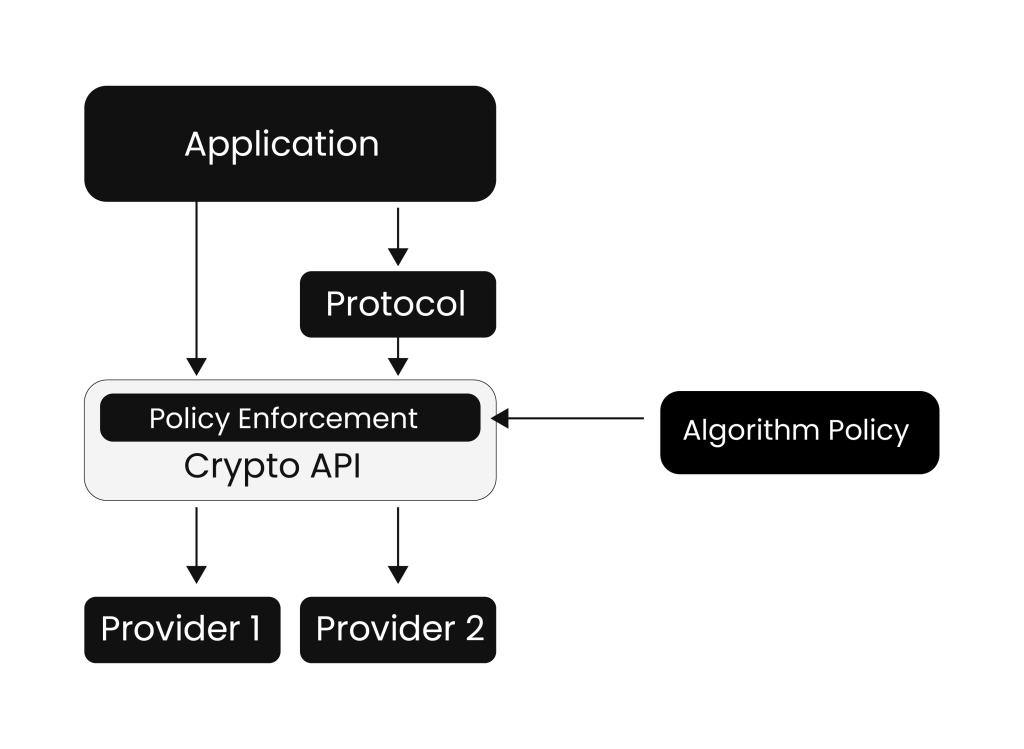

Developed by Encryption Consulting, CodeSign Secure is a centralized, secure, and scalable code signing platform built to help organizations sign code confidently without compromising on security or speed. We have designed it for modern DevOps environments, integrating with popular CI/CD pipelines (like Azure DevOps, Jenkins, GitLab, and so on), and enforcing strict access controls and approval workflows to keep your signing process clean and compliant.

Encryption Consulting’s CodeSign Secure continues to evolve with the release of version 3.02, delivering a landmark update that integrates Post-Quantum Cryptography (PQC) support. This new version introduces PQC, making it one of the first enterprise code signing solutions to prepare for the quantum computing era. V3.02 includes built-in support for MLDSA (Multivariate Lattice Digital Signature Algorithm) and LMS (Leighton-Micali Signatures), two NIST-recognized, quantum-resistant signature schemes.

Whether you’re already thinking about quantum readiness or just looking to strengthen your code signing infrastructure, Code Sign Secure v3.02 is built to help you stay ahead – securely and smartly.

Why PQC Matters in Code Signing

Let’s face it – quantum computing is no longer just a distant theory. It’s rapidly progressing, and while it’s not mainstream yet, it’s close enough that organizations can’t afford to ignore its impact on cybersecurity, especially when it comes to code signing.

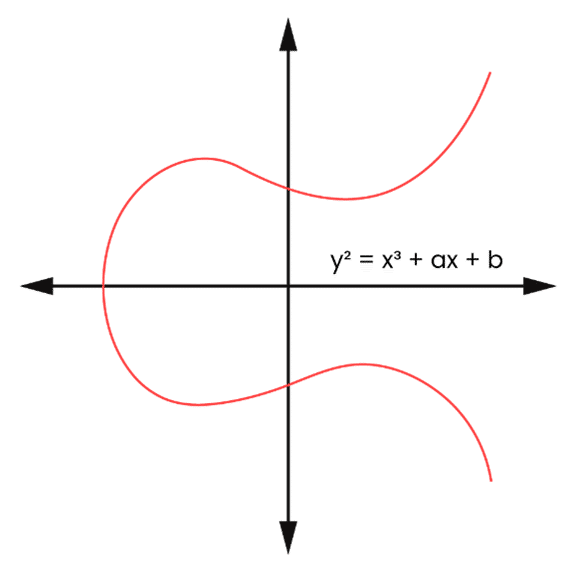

Traditional digital signature algorithms like RSA and ECC have served us well for years, but they’re vulnerable to attack from powerful quantum computers. Once these machines become capable enough, they could break today’s widely used cryptographic keys in a fraction of the time it takes classical computers. That’s a big deal for code signing.

Code signatures are meant to prove that software hasn’t been tampered with and comes from a trusted source. If quantum computers can forge those signatures, it opens the door to serious threats, such as malware impersonating trusted apps, backdoors inserted into software updates, and more. This is where Post-Quantum Cryptography (PQC) comes in.

PQC algorithms are designed to be resistant to quantum attacks. By adopting PQC in code signing workflows, you’re not just reacting to a future problem—you’re proactively protecting your software supply chain for the long haul. It’s about future-proofing your trust model, especially for long-lived software like IoT firmware, embedded systems, and government-grade applications.

CodeSign Secure v3.02 supports PQC out of the box, giving organizations a head start in adapting to the next era of cryptography without sacrificing usability or performance. It’s a smart move now and a necessary one for the future.

New PQC Algorithms Supported in v3.02

MLDSA (44, 65, 87) and LMS

With version 3.02, our CodeSign Secure introduces support for cutting-edge Post-Quantum Cryptography (PQC) algorithms, giving your code signing process a serious upgrade in future-readiness. So, what’s new?

MLDSA (Multivariate Lattice Digital Signature Algorithm) – 44, 65, 87

These are the three strength levels of a quantum-safe digital signature algorithm based on lattice cryptography. MLDSA is designed to provide strong security against quantum attacks, while still being efficient enough for real-world applications.

- MLDSA-44: Optimized for speed and smaller signatures, which is ideal for lightweight signing use cases.

- MLDSA-65: A balanced option offering strong security with good performance.

- MLDSA-87: The most secure variant, great for signing sensitive or high-value software assets.

By offering multiple MLDSA levels, our CodeSign Secure lets you choose the right balance between performance and protection, depending on your software and regulatory needs.

LMS (Leighton-Micali Signatures) via PQSDK

LMS is one of the first PQC algorithms approved by NIST for digital signatures, and it’s especially well-suited for long-term integrity protection for firmware, IoT devices, and critical infrastructure systems. Our CodeSign Secure v3.02 integrates LMS via PQSDK, allowing you to easily sign software with quantum-resistant credentials that stay trustworthy for years to come.

What’s even better? You can use these PQC algorithms in hybrid mode too, i.e., signing with both classical (RSA/ECC) and quantum-safe keys, giving you the flexibility to transition smoothly without breaking compatibility.

Hybrid Signing Workflows: Supporting Classical and PQC Algorithms

Let’s be honest, most organizations aren’t going to ditch RSA or ECC overnight. And that’s totally okay. Transitioning to Post-Quantum Cryptography (PQC) is a journey, not a flip of a switch.

That’s why CodeSign Secure v3.02 is built to support hybrid signing workflows—letting you combine both classical (RSA/ECC) and quantum-safe (MLDSA/LMS) algorithms in the same signing process.

What does that mean for you? You can continue signing software with trusted classical keys for compatibility, while adding a layer of PQC protection for future-proofing. This dual-signature approach ensures your software remains verifiable both today and in a post-quantum world, no matter which type of verifier is checking the signature.

Why it matters:

- Smooth Transition: Adopt PQC at your own pace without breaking existing systems or user workflows.

- Increased Security: Benefit from the strengths of both classical and quantum-resistant signatures.

- Broad Compatibility: Maintain support for legacy tools and platforms while preparing for upcoming standards.

Whether you’re signing application binaries, firmware, or container images, CodeSign Secure handles hybrid signing cleanly and efficiently, integrated into your CI/CD pipelines and approval workflows like it’s always been there.

So, if you’re looking to prepare for tomorrow without disrupting today, hybrid signing is the bridge, and CodeSign Secure makes crossing it seamless.

Using MLDSA and LMS in CodeSign Secure v3.02: Step-by-Step Guide

MLDSA

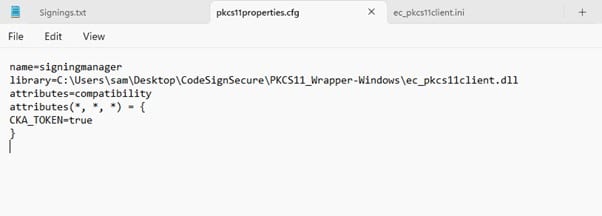

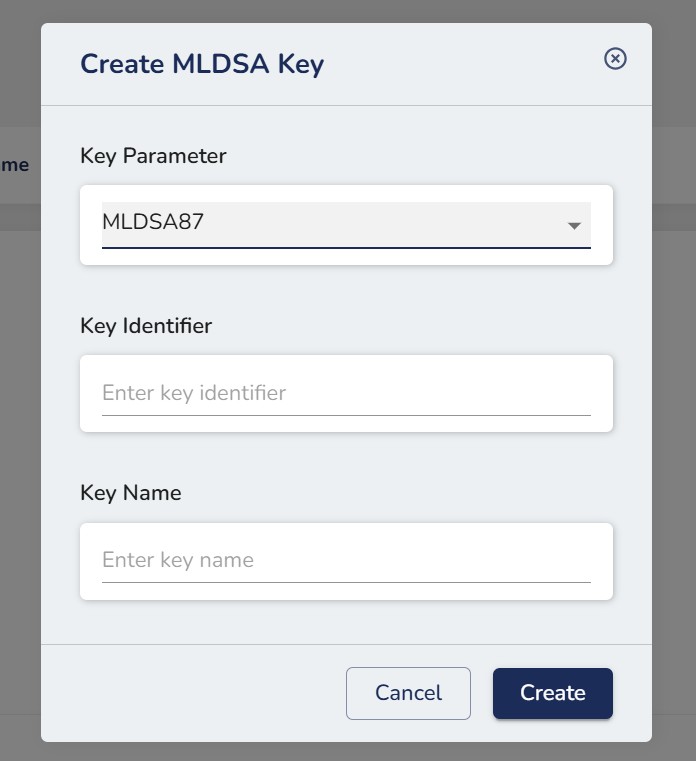

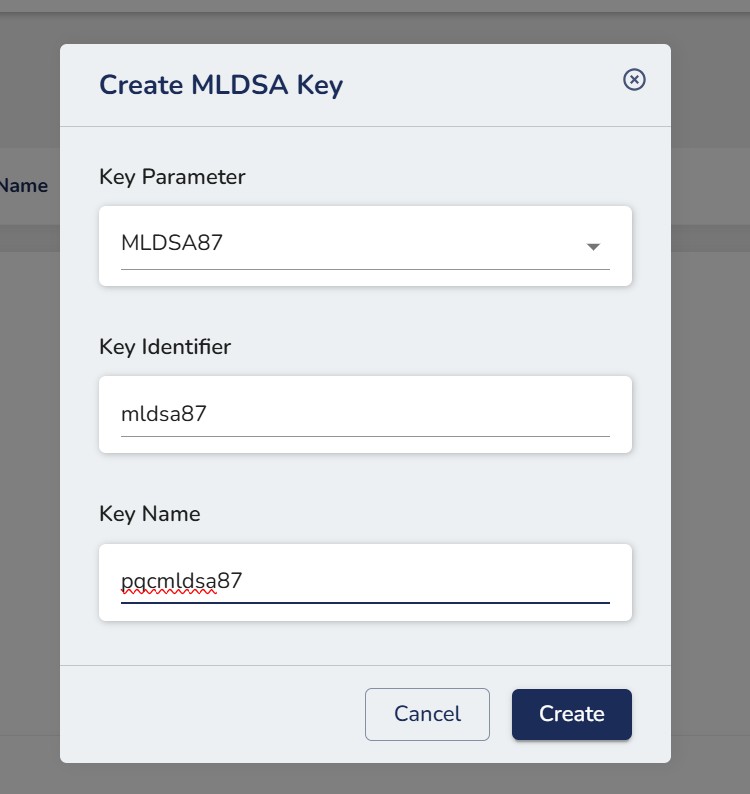

Step 1. Go to our CodeSign Secure’s Signing Request Tab and click on Create MLDSA Key.

Step 2. Enter the necessary details in the form(Key parameter is for the MLDSA Algorithm parameter – MLDSA 44, 65, 87)

Step 3. After entering the necessary details, click on the Create button.

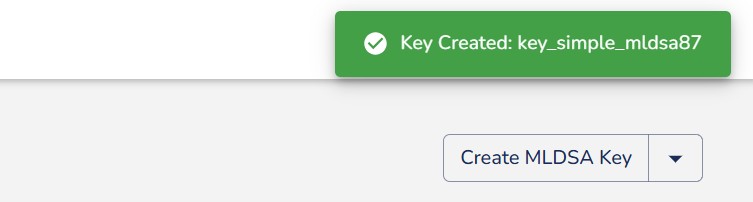

Step 4. Your MLDSA key will be created in the HSM.

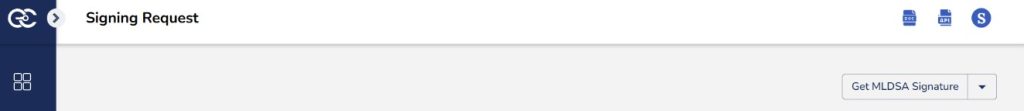

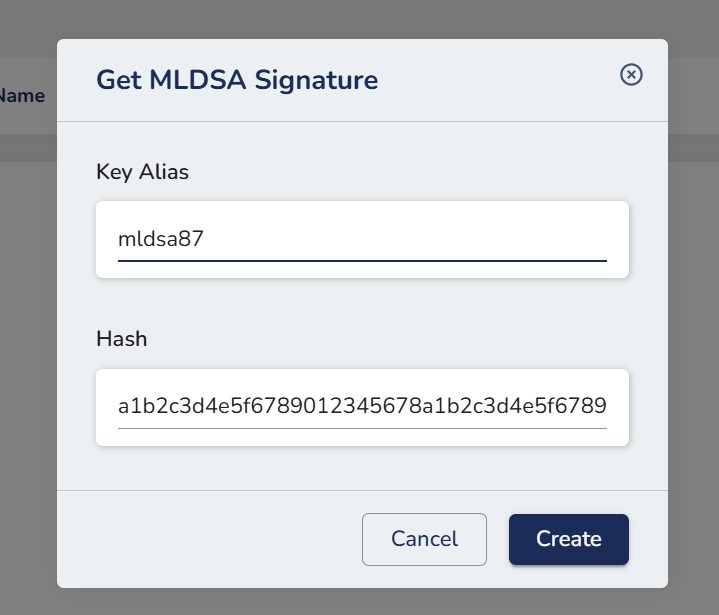

Step 5. Now, we will create a digital signature using this MLDSA private key in the HSM. So, select Get MLDSA Signature and click on it.

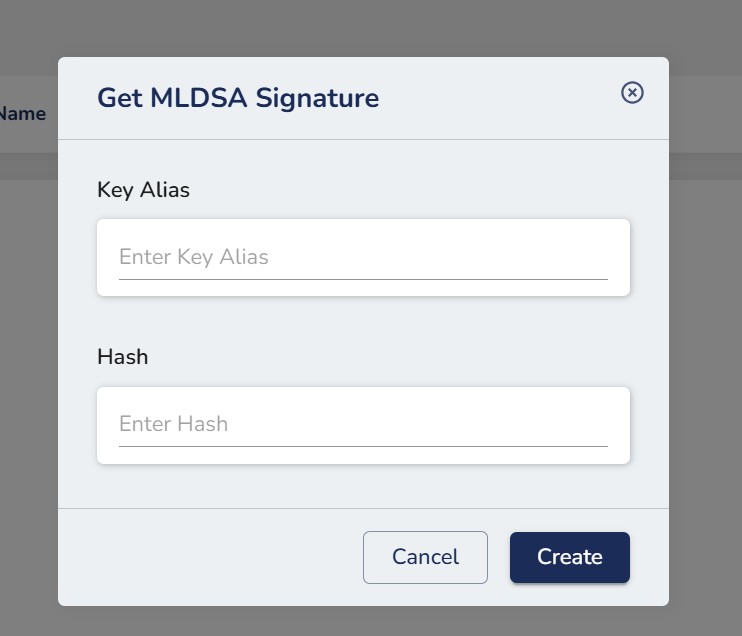

Step 6. You’ll be prompted for the necessary details:

- Key Alias is the key identifier you entered when creating the MLDSA key

- Enter a SHA-256 hash of the file you want to sign.

Step 7. After entering the appropriate details, click on the Create button.

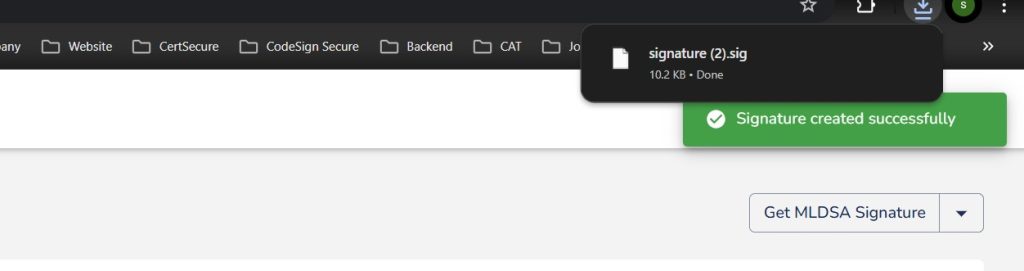

Step 8. A .sig file with the MLDSA Signature will be downloaded to your local system.

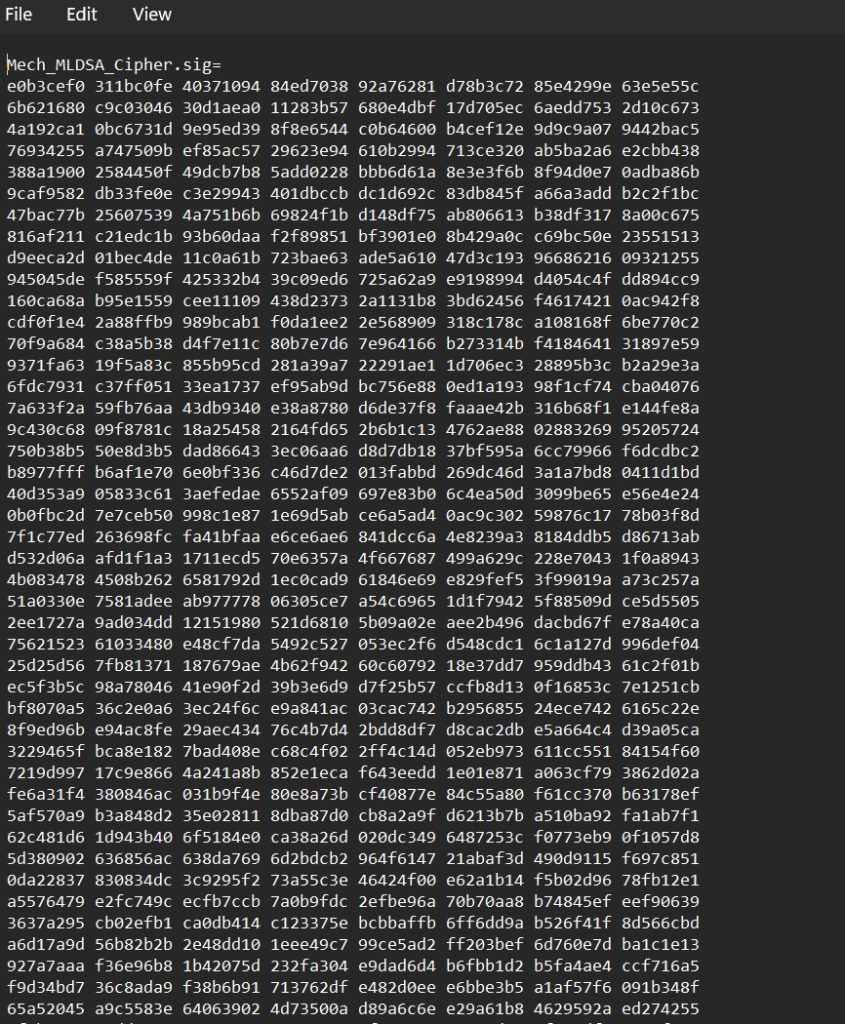

Step 9. This is what your MLDSA Signature will look like

LMS via PQSDK

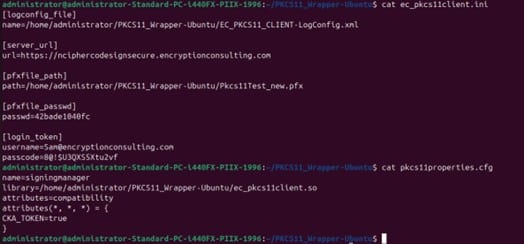

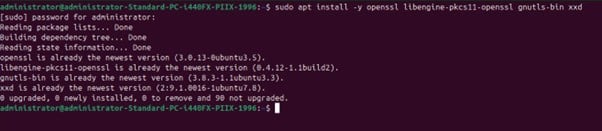

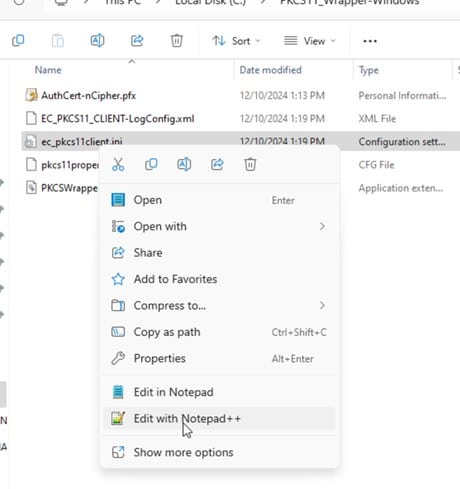

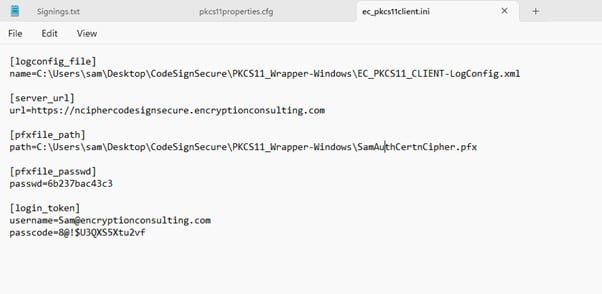

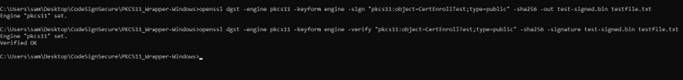

Step 1. Extract the PQSDK tarball for installation

tar -xvzf libpqsdk-*.tar.gz

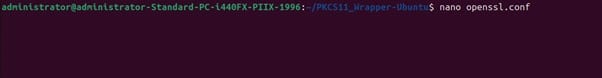

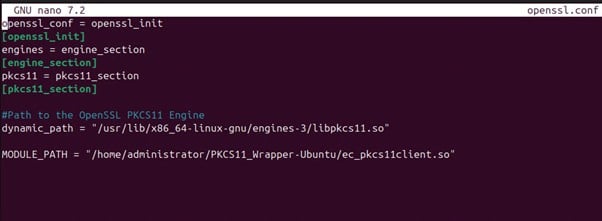

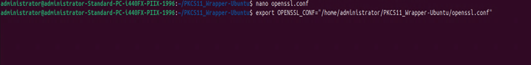

Step 2. Set up the environment variables required for using PQSDK tools.

source setup.sh

Step 3. Generate an LMS private key using PQSDK

./pq-tool genkey –algo LMS –key-out lms.key

Step 4. Extract the LMS public key from the private key

./pq-tool getpub –key-in lms.key –pub-out lms.pub

Step 5. Sign a file (here, data.txt) using the LMS private key and output a signature file

./pq-tool sign –key-in lms.key –in data.txt –sig-out data.txt.sig

Step 6. Verify the LMS Signature of the signed file using the corresponding public key

./pq-tool verify –pub-in lms.pub –in data.txt –sig-in data.txt.sig

Step 7. Create and Load CodeSafe SEEWorld:

./csg-compile –input sign_lms.c –output sign_lms.see./csg-load –load sign_lms.see –name sign-lms-world

- csg-compile compiles the signing logic into a SEEWorld (secure enclave)

- csg-load loads the SEEWORLD into the HSM with a given name

Step 8. Execute LMS signing of a sample file within the loaded CodeSafe SEEWorld environment.

./csg-exec –world sign-lms-world –sign data.txt –sig-out data.txt.sig

Step 9. Verify the signature created inside SEEWorld using the public key

./pq-tool verify –pub-in lms.pub –in data.txt –sig-in data.txt.sig

Use Cases of PQC-Based Code Signing

- Firmware Signing: Firmware updates are a critical target for attackers, especially in IoT, automotive, and industrial systems. Post-quantum code signing ensures that even if quantum computers become a reality tomorrow, firmware updates pushed today stay secure and verifiable. Using PQC algorithms like LMS or ML-KEM helps future-proof these devices against quantum threats, all while maintaining trust in device integrity.

- Enterprise Applications: Enterprise software often includes confidential logic and connects with sensitive systems. By using PQC-based code signing, organizations can safeguard their internal applications from tampering, even in a post-quantum world. It’s especially important for apps that handle identity, encryption, or compliance-sensitive operations, where traditional signatures might eventually become weak.

- Long-Term Support (LTS) Software: Software that’s going to be maintained for a decade or more, like OS kernels, embedded device stacks, or regulated financial systems, needs to be protected for the long haul. PQC-based code signing ensures that those signatures will still be considered secure well into the future, even as cryptographic standards evolve. It’s a proactive move for any vendor offering long-term support.

Compliance and Regulatory Alignment with PQC Readiness

Staying ahead of compliance requirements is more than just ticking boxes; it’s about being proactive and future-ready. As regulatory bodies and standards organizations like NIST, ETSI, and NSA start recommending or mandating post-quantum cryptography (PQC), companies that adopt PQC early are putting themselves in a strong position.

For example, NIST has already finalized its first round of PQC algorithm selections, and governments around the world are pushing agencies and vendors to start migrating. If your code signing process already supports algorithms like LMS, ML-KEM, or Dilithium, you’re not just checking a security box; you’re aligning with emerging compliance frameworks that will soon be expected across industries.

Being PQC-ready also helps in audits. Whether it’s for SOC 2, ISO 27001, or sector-specific frameworks (like automotive’s UNECE WP.29 or healthcare’s HIPAA), showing that you’re using quantum-resistant signatures signals forward-thinking risk management and long-term cryptographic hygiene.

In short, PQC isn’t just a technical upgrade; it’s fast becoming a compliance expectation. Getting ahead now avoids the scramble later.

Conclusion

The shift toward post-quantum cryptography isn’t a distant concern; it’s already happening. Whether you’re signing firmware, securing enterprise applications, or delivering long-term support software, adopting PQC ensures your digital signatures stay secure in a post-quantum world.

CodeSign Secure is built with this future in mind. With native support for PQC algorithms like LMS and ML-KEM, integration with trusted HSMs, and seamless compatibility with your existing CI/CD pipelines, it delivers a robust, compliant, and forward-looking code signing solution.

By choosing our CodeSign Secure, you’re not just preparing for tomorrow’s threats; you’re leading with confidence today.