Overview

Encryption Consulting partnered with one of the largest retail farm and ranch store chains in the United States, which caters to recreational farmers, ranchers, and rural homeowners. The chain operates in than 1,800 stores across 40+ states. They offer a wide range of products, including livestock feed, pet supplies, tools, hardware, clothing, and outdoor living items, and takes pride in providing expert advice through knowledgeable staff and supporting rural communities.

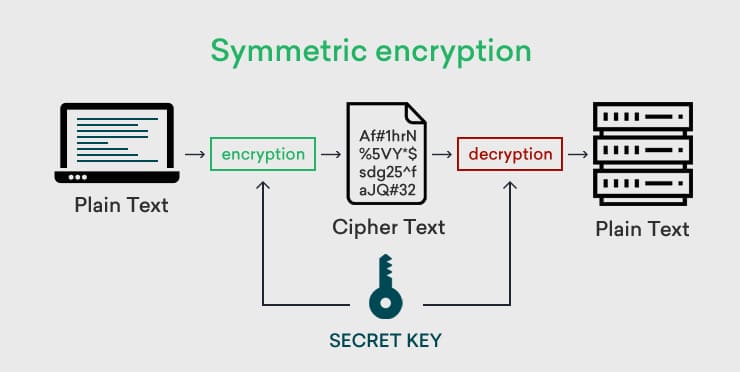

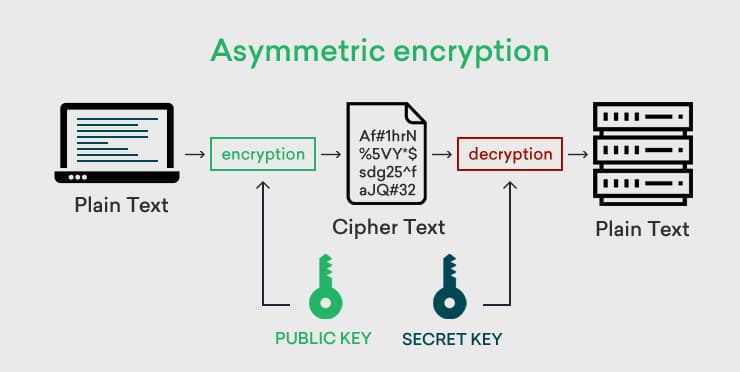

Recognizing the importance of a strong security framework, the retail chain reached out to us for an assessment of its Public Key Infrastructure (PKI) environment. They aimed to ensure that their existing PKI could effectively support their operations and safeguard sensitive information as they continue to grow. The primary objective of this initiative was to evaluate the current state of their PKI environment, identify any existing gaps, and define the future state PKI requirements necessary for the organization’s strategic growth and enhanced security posture.

As part of this engagement, Encryption Consulting delivered a prioritized strategy and implementation roadmap designed to strengthen their overall PKI security and operational efficiency. The assessment revealed several gaps within the organization’s PKI environment. We assessed the PKI components, and the evaluation highlighted areas needing improvement.

Challenges

As we assessed the PKI environment of the organization, we encountered various challenges that posed risks. Their managed PKI was hosted on a legacy Windows Server. The defined cryptographic standards lacked a Certificate Policy (CP) or Certificate Practice Statement (CPS), which posed as a significant challenge because these are critical for outlining the rules and guidelines governing the issuance, management, and use of certificates within a public key infrastructure (PKI).

Cryptographic policies, such as Cryptographic Controls and Key Management policy, were not reviewed and updated at regular intervals. We also observed that the organization lacked a formal PKI governance program for both private and public CAs, resulting in a lack of accountability and oversight, which led to inconsistencies and potential security vulnerabilities across the organization.

The assessment revealed significant deficiencies in documentation across the PKI environment. Core documentation for architecture, installation, and operations was either missing or incomplete. There was no defined certificate subscriber agreement outlining subscriber responsibilities for managing assigned keys or certificates.

Standard documentation, such as disaster recovery plans, a RACI (Responsible, Accountable, Consulted, Informed) Matrix, a Target Operational Model (TOM), and an Incident Response Management Plan, was also lacking. The key management processes in the Managed PKI setup were not fully aligned with the organization’s internal security policies.

Furthermore, the procedure for publishing the Certificate Revocation List (CRL) was not formally documented. There was also no clear documentation mapping issued certificates back to their respective certificate signing requests (CSR), thus causing challenges in tracking and validation. We found that a documented process or defined policy for the usage of Public CA was missing, making it challenging to map certificates or track certificate usage across their environment steadily.

The Public CA also lacked formal documentation outlining certificate management procedures, including guidelines for certificate validity periods, cryptographic algorithms, key sizes, and the certificate issuance process. The absence of formal Root CA key ceremony documentation meant there was no valid proof of the private key generation procedure.

Additionally, PKI incident response management, disaster recovery plans, and related procedures were not fully developed, documented, or executed. There was no formal troubleshooting guide to address common issues, patch management, or testing mechanisms for the PKI environment, and formal procedures to verify the key pair a user was using were also absent.

The Issuing CAs relied on LDAP as the main method for publishing revocation data, which can be problematic for Linux and macOS systems that don’t natively support LDAP-based CRL retrieval—this limited cross-platform certificate validation. Storing critical certificate data and logs on the system drive posed a risk of performance issues or potential service disruptions due to storage limitations. There were inconsistencies in the validity periods of CRL Distribution Point (CDP) locations across the issuing CAs, and the time intervals between CRL updates were not standardized, which could affect the reliability of certificate status information.

Regarding access control of managed PKI, we noted that the principle of least privilege was not implemented, resulting in weak RBAC and inadequate separation of duties. This increased the attack surface, enabling potential misuse, such as the issuance of fraudulent certificates, creation of rogue subordinate CAs, and unauthorized access to private keys. Proper roles and responsibilities were not defined for managing certificates issued by the managed PKI.

The organization also faced challenges with cross-border data transfers, raising compliance concerns regarding data sovereignty and regional regulations. For the Public CA, there were no specific roles and responsibilities assigned to manage the public CA-signed certificates, resulting in accountability issues.

The Certificate Signing Requests (CSRs) lacked a formal approval process and proper oversight, increasing the risk of weak cryptographic configurations. Additionally, there were no checks to verify the authorization of CSR submissions. The absence of a well-defined workflow for validating CSRs and a uniform process for submission and monitoring further complicated the management of certificate requests. We observed that there were no proper records of requests associated with certificate issuance, renewal, re-key requests, and revocation requests, nor were there proper records of the approval or rejection of certificate requests.

The assessment identified severe gaps in certificate tracking and monitoring across the PKI environment. There was no system in place to track private keys or certificates, which allowed for unauthorized access, nor was there any monitoring of wildcard or self-signed certificate usuage. The organization lacked a certificate discovery mechanism and had inadequate records for issuing, renewing, and revoking requests. Essential processes were entirely missing, including revocation procedures for lost or stolen devices, mapping certificate templates to their creators and intended uses, and a centralized inventory of cryptographic keys.

There was no oversight of compromised certificates, which enabled the unauthorized issuance, modification, or retention of active certificates. Some decommissioned systems were still left exposed because the credentials tied to them hadn’t been revoked. At the same time, there was a lack of clear communication between teams regarding essential cryptographic standards, such as required key algorithms and minimum key sizes, leading to inconsistencies in certificate issuance. The organization also hadn’t established service-level agreements (SLAs) for handling compromised certificates, which made it harder to respond effectively to security incidents. Key recovery processes were missing, and there were no proper records of renewal requests.

Private keys weren’t being destroyed securely, and there was no formal process to revoke certificates that were no longer in use. The entire certificate lifecycle, from requesting and renewing to revoking and approving, was handled without automation or well-defined procedures, which increased the risk of oversight. There was no consistent approach to revoking certificates, leaving the environment vulnerable to security risks. The key generation process lacked safeguards as access to key files wasn’t restricted to the owner, increasing the risk of unauthorized access.

No standard checks were in place to validate important certificate parameters, like key length or key usage, before certificates were issued. Instead of using a dedicated Certificate Lifecycle Management tool, the organization relied on email reminders, which frequently led to delays, errors, and a lack of visibility across the certificate ecosystem.

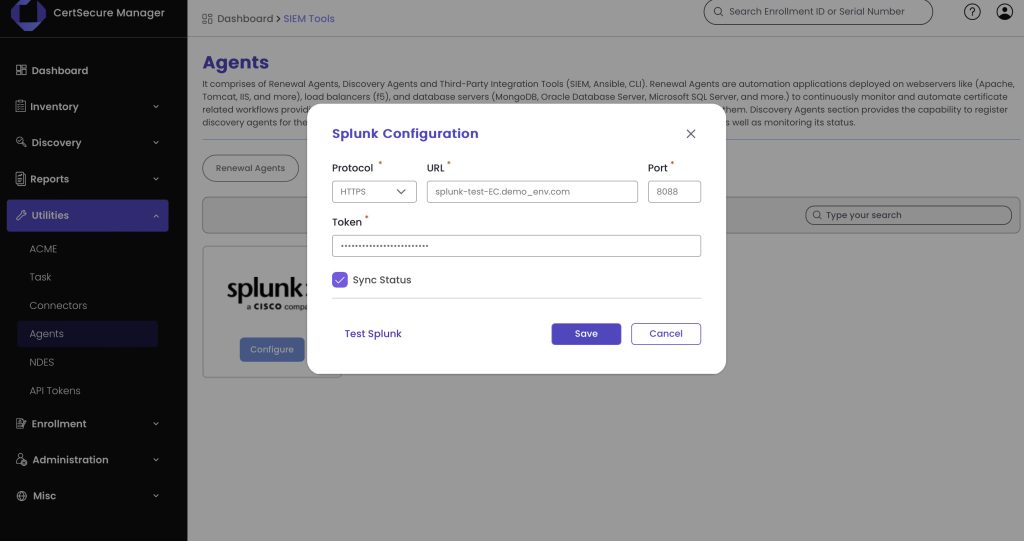

It was observed that in the existing PKI architecture, the CA path length constraint was not defined, which could lead to a very long certificate chain, thereby increasing complexity. It could introduce vulnerabilities and attack surfaces with many intermediate CAs, escalating privilege risks, as gaining access to a lower-level issuing CA with a long chain could potentially elevate privileges. We observed that private keys were not stored in HSMs. We also observed that Root CA had an exceptionally long validity period, exceeding the recommended best practices. The CA Policy file was not used on the Managed Root CA and Issuing CA. There was no procedure for sending logs to SIEM.

There was no defined PKI upgrade process, leaving the architecture vulnerable to security risks associated with outdated algorithms and key lengths. The CA database cleanup hadn’t been performed in a long time, the procedure for regular CA database backup was missing, and an incident response and data recovery plan were not developed for PKI.

The signing algorithm for Root CA was not aligned with the industry standards. The Supply Chain teams imported self-signed certificates into the MDMS (Mobile Device Management Software) without validating the certificate chain, resulting in a security vulnerability. This occurred because the certificates were assigned directly, bypassing trust chain validation and exposing the environment to the risk of unauthorized certificate trust.

We noticed that protocols and procedures for managing certificate templates were missing, resulting in inconsistent configurations, uncontrolled changes, and auditing challenges. More than 60% of the keystores generated by Managed PKI, especially via Issuing CAs, had a 1024-bit key size, including code signing and key exchange templates, which posed a significant cryptographic risk, such as brute-force attacks and non-compliance with industry best practices. The assessment revealed significant gaps in the organization’s certificate management framework, exposing critical security and operational risks. High-risk certificates were issued without proper safeguards, and some allowed users to encrypt data individually without key recovery mechanisms, risking permanent inaccessibility upon employee departures.

Certificate templates that were no longer in use were published, posing a significant cryptographic risk. For some certificate templates we observed, private keys were inadequately protected, stored without HSM protection, lacked backups for disaster recovery, weak cryptographic practices persisted, including the use of outdated key lengths and the absence of validation checks during certificate issuance. Certain certificate types granted excessive permissions, enabling unauthorized issuance or modification without managerial approval. Extended usage capabilities were permitted without restrictions, creating opportunities for attackers to forge credentials or escalate privileges.

There was a major overlap in templates among the CAs, indicating redundancy and failover capability, which could also introduce complexity in template management and governance. These deficiencies reflected a failure in both technical controls and governance, leaving the PKI environment susceptible to exploitation, data breaches, and operational disruptions.

For PKI operations, we noticed that there was no two-person integrity (TPI) check mechanism in place for modifications made to the managed PKI components, and system configurations weren’t being regularly reviewed. Handovers lacked formal knowledge transfer, often relying on informal communication. A Business Impact Analysis (BIA) wasn’t performed for the PKI components. Testing was done directly in production, as there was no separate environment. Changes to the PKI environment weren’t governed by a formal path as a formal configuration review process was absent for the Managed PKI environment, which increased the risk of non-functional paths going unnoticed.

There was also no centralized monitoring of private keys or their usage. Active monitoring to detect issues with OCSP responses, LDAP CDPs, PKI functionality, or Active Directory containers was not in place. The resources allocated to managing PKI and handling certificate-related issues were limited, which could result in delays in responding to incidents, performance monitoring, and addressing security issues. There was no dedicated role defined to oversee PKI and its components. Vulnerability scanning was not performed to identify potential weaknesses and misconfigurations. Only a small number of staff had the necessary skills related to PKI.

For risk and compliance monitoring, it was observed that there was no certificate risk and compliance monitoring program. There were no procedures or tools to identify compliance issues and risks. Recovery Time Objective (RTO) was not defined. There was no formal documentation of risk reports and assessment processes.

Enterprise PKI Services

Get complete end-to-end consultation support for all your PKI requirements!

Solution

To assess the organization’s existing PKI environment, Encryption Consulting began by reviewing cryptographic policies and documentation they shared. We conducted workshops with key stakeholders from various business units to evaluate current risks and usage scenarios. Technical evidence was collected through stakeholder discussions and execution of a PKI assessment tool, focusing on Certificate Authority (CA) properties, registry settings, and configurations. We also reviewed the process the company follows to obtain public CA-signed certificates. A Capability Maturity Model Integration (CMMI) framework was employed to evaluate the maturity of PKI-related practices throughout the organization.

While the company has established foundational PKI components, we identified several process gaps. Strategic and tactical changes were recommended for enhancing security, sustainability, and consistency. Immediate actions included addressing PKI configuration issues, implementing regular and secure backups of the CA databases, and enhancing disaster recovery planning. Long-term programs included documenting the PKI architecture in a detailed form, adopting strong cryptographic standards, and implementing HSM-based protection of the platform.

In addition, the implementation of certificate revocation mechanisms, clear-cut operational procedures, and prolonged training programs was suggested to provide operational resilience along with long-term PKI governance.

To modernize and future-proof the company’s PKI infrastructure, we have suggested creating an explicit migration plan to transition the PKI infrastructure to Windows Server 2022 or the next supported version. Better security features, performance enhancements, and ongoing support from Microsoft is offered by the new platform, along with access to the latest security updates and patches to leverage known vulnerabilities.

We also suggested documenting the entire PKI architecture in detail, including high-level diagrams, trust models, component details, cryptographic settings, certificate lifecycle processes, and disaster recovery plans. Implementing HSMs (FIPS 140-2/3 level 3) for key generation and establishing a dedicated test environment to trial changes before applying them in production was also recommended.

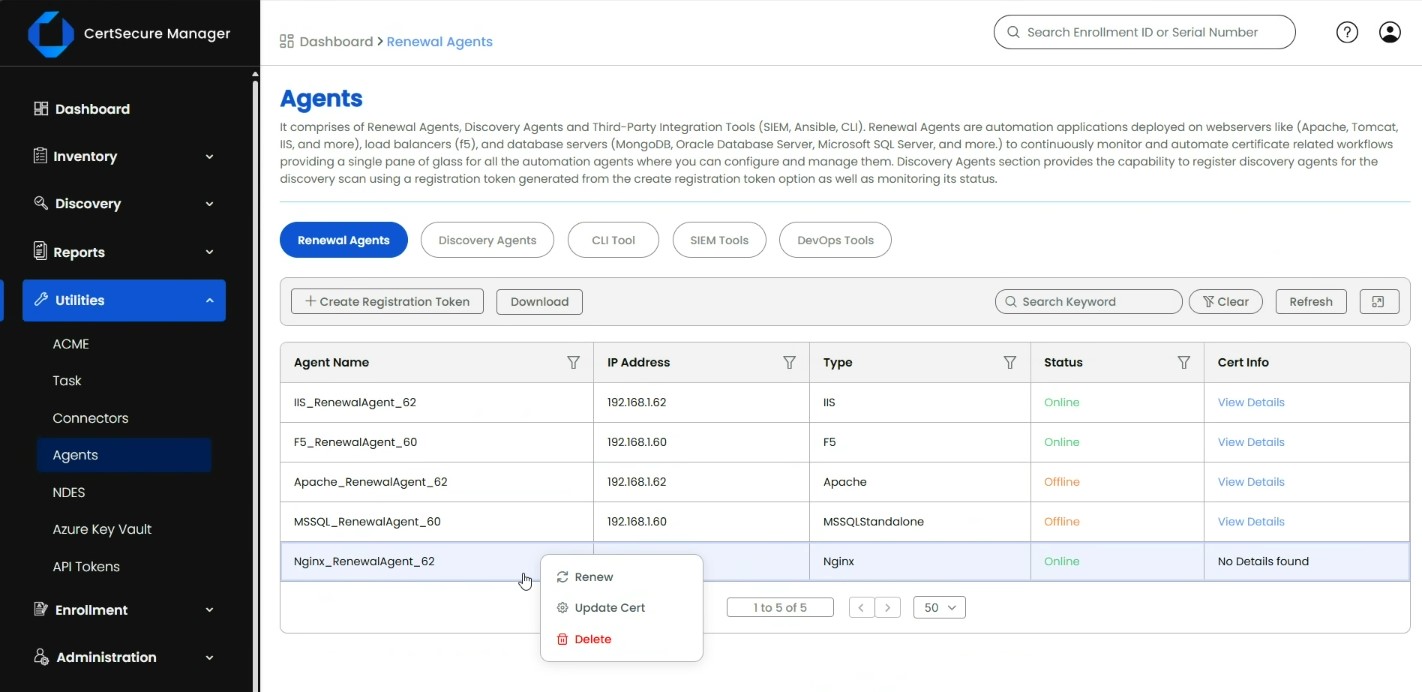

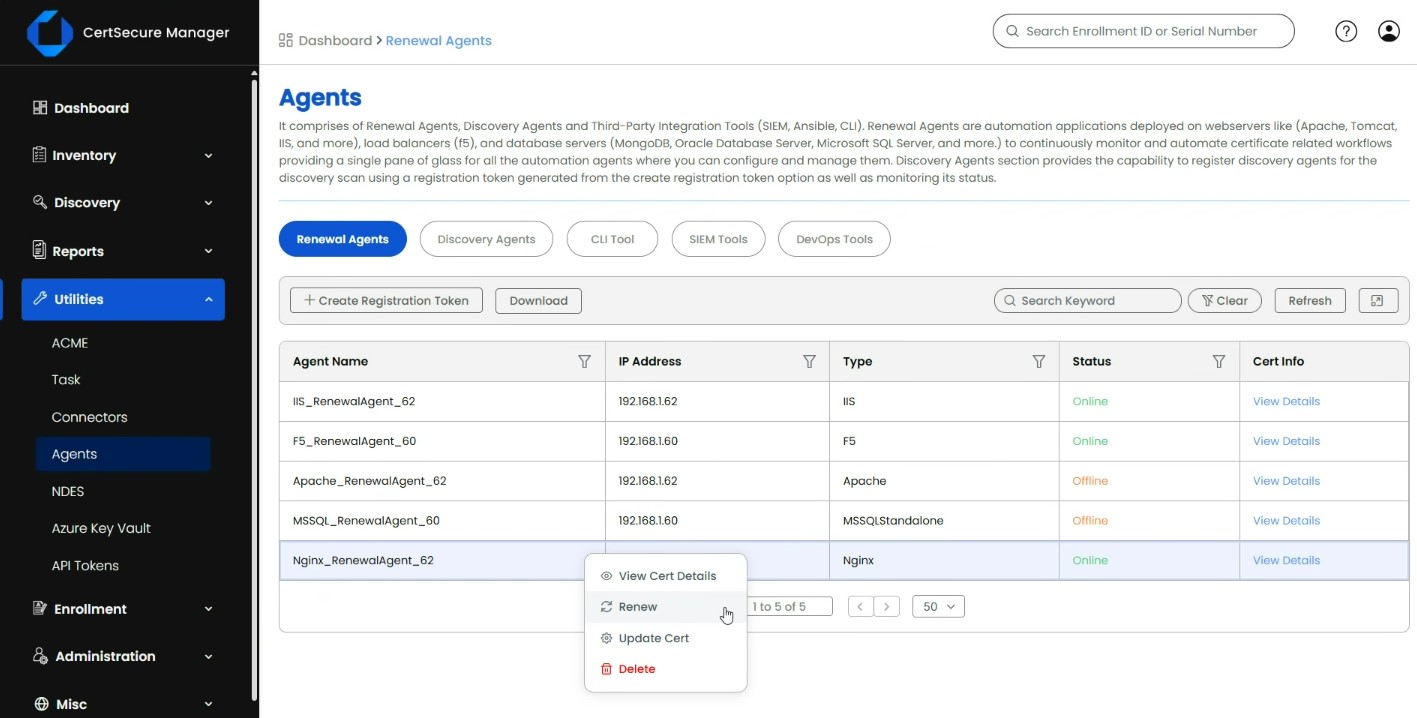

To enhance PKI operations, we have recommended implementing an automated certificate renewal process for both Managed PKI and Public CAs to minimize the risk of missed delays. Establishing a scheduled PKI health monitoring and notification service to alert if the PKI becomes non-functional at any time was also advised. We suggested enabling auditing on CAs to ensure accountability and support troubleshooting efforts. We recommended establishing a quarterly configuration review process against a PKI operations checklist to verify that all PKI system components are functioning properly.

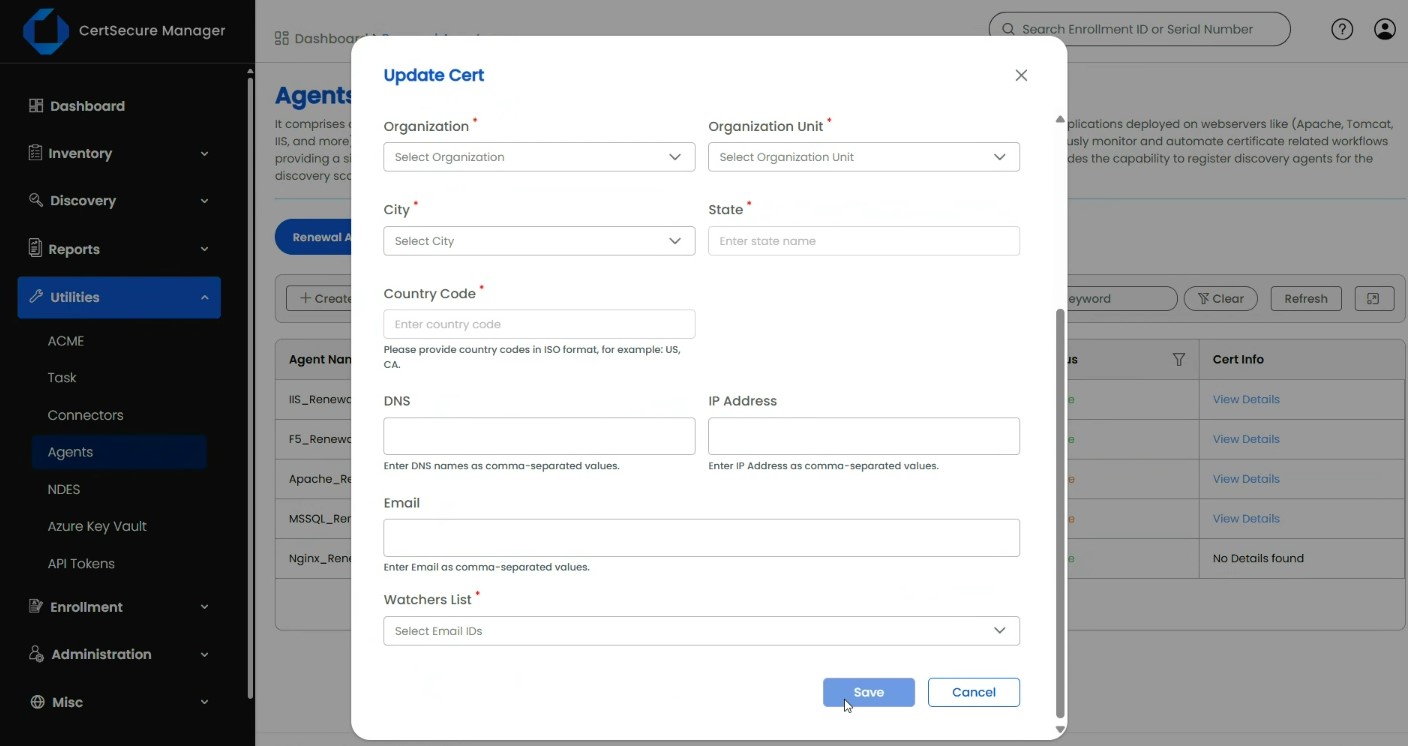

For effective certificate management, we advised establishing a well-defined process for managing certificate templates, involving establishing precise guidelines for their production, setting up approval mechanisms, monitoring changes, and centralizing the storage of templates. We recommended updating certificate templates that lack the Security Identifier (SID) to satisfy Microsoft’s forthcoming Strong Certificate Mapping requirements.

Additionally, we suggested clearing up the CA database by eliminating inactive certificate templates and failed, expired, and revoked certificates. Furthermore, an automated renewal system for SSL/TLS certificates, as well as a stated exception policy for those that require manual renewal, was deemed necessary. We strongly advised having a Certificate Lifecycle Management Tools for improved certificate discovery and automated certificate lifecycle processes.

We suggested establishing a centralized inventory or registry for all certificate templates, clearly identifying the template name, owner/creator, intended use, associated policies, and permissions. A mandatory, formal, and standardized approval process for all Certificate Signing Requests (CSRs) was recommended, including defined criteria for validating key parameters.

Involving a manager or designated authority to review CSRs to ensure that the attributes align with the intended use cases and accurately reflect the requester’s identity was also suggested. We also recommended limiting broad enrollment and auto-enrollment permissions by identifying specific users who require particular certificates.

We have advised developing an information security policy document that addresses specific PKI functions. This document will serve as a comprehensive framework for managing information security across the organization, containing objectives, principles, and requirements related to PKI. Conducting annual reviews and updating guidance policy documents was also suggested. Furthermore, creating a Certificate Policy (CP), a Certificate Practice Statement (CPS), and a subscriber agreement that clearly outlines the roles and responsibilities of key owners to enhance governance and accountability was advised.

We recommended establishing disaster recovery strategies and documenting requirements addressed by a business impact assessment. We suggested conducting regular backups of the CA database every three to six months, with special care taken to store the Root CA backup and its private key offline in secure storage like an HSM. Implementing Two-Person Integrity (TPI) to enforce dual authorization control for all configuration and operational changes for CAs was also advised.

To enhance security, we have recommended implementing strict access controls based on the principle of least privilege, thereby ensuring a clear segregation of roles and duties. Regularly reviewing access logs for anomalies and creating dedicated administrative groups, such as CA administrators, was suggested. Establishing comprehensive cryptographic control and standard documentation for certificate management procedures will help determine which Public CA should be used for specific use cases or domains.

We suggested developing comprehensive risk and compliance programs to address possible risks. Defining the Recovery Time Objective (RTO) for all PKI-related services and components, and categorizing PKI services based on their criticality to business operations, was recommended. Establishing a formal risk reporting process for the PKI environment, including periodic evaluations to identify, track, and remediate issues related to PKI, was also suggested.

We have advised implementing a regular vulnerability scanning process that targets issued certificates and establishing a formal audit mechanism to capture all relevant information about wildcard certificates. Developing a comprehensive reporting mechanism to provide an overview of wildcard certificates will enhance monitoring capabilities, enabling more effective management and control.

We provided on-demand PKI and HSM training to stakeholders, enabling them to build stronger expertise and enhance their understanding of critical security infrastructure.

Impact

The remediation roadmap empowered the client to tackle critical challenges and establish a secure PKI environment. Implementing these recommendations significantly enhanced the organization’s PKI security posture, operational efficiency, and governance framework. Migrating to a modern infrastructure, such as Windows Server 2022 or the latest version, not only provided improved security features and performance enhancements but also ensured ongoing support from Microsoft, effectively addressing known vulnerabilities. Automating the certificate lifecycle management process minimized the risk of missed renewals and service disruptions, reducing the potential for human error and enhancing overall reliability.

Strengthening cryptographic standards by migrating to key lengths of 2048 bits or higher mitigated risks associated with weaker keys, hence strengthening the integrity of the PKI environment. Enforcing strict access controls based on the principle of least privilege limited unauthorized access and reduced the attack surface, significantly lowering the risk of key compromise and unauthorized certificate issuance.

Establishing well defined policies, documentation, and audit mechanisms improved accountability and monitoring capabilities. This ensured that all stakeholders understood their roles and responsibilities in managing the PKI environment. Developing a centralized inventory for certificate templates and a formal approval process for Certificate Signing Requests (CSRs) streamlined operations and enhanced governance, fostering a culture of compliance and security awareness.

Furthermore, implementing a strong disaster recovery plan and regular backup procedures ensured business continuity in the event of a security incident or system failure. By fostering collaboration with the internal team and other stakeholders, the company was better positioned to evaluate data sovereignty and compliance implications, particularly when engaging with external service providers.

Overall, these measures minimized vulnerabilities and supported regulatory compliance and ensured a more resilient and scalable PKI environment that can adapt to future challenges and technological advancements.

Enterprise PKI Services

Get complete end-to-end consultation support for all your PKI requirements!

Conclusion

We provided a strategy and a comprehensive remediation roadmap to address the identified weaknesses and risks within the company’s PKI infrastructure. By modernizing the environment and migrating to a more secure platform, the organization will enhance its ability to protect sensitive data and maintain trust in its digital transactions. The implementation of automated processes for certificate lifecycle management will streamline operations, reduce the likelihood of human error, and ensure timely renewals, thereby minimizing service disruptions.

Moreover, the emphasis on strengthening cryptographic standards and enforcing strict access controls will significantly mitigate risks associated with unauthorized access and key compromise. Establishing clear policies, documentation, and audit mechanisms will foster a culture of accountability and transparency, ensuring that all personnel understand their roles in maintaining the security of the PKI environment.

The development of a comprehensive disaster recovery plan and regular backup procedures will further enhance the company’s resilience against potential security incidents, ensuring business continuity and operational stability. By collaborating internally and with other relevant stakeholders, the organization will be well-equipped to navigate the complexities of data sovereignty and compliance, particularly about external service providers.

In summary, this comprehensive approach not only secures the organization’s digital assets but also positions the organization for future growth and adaptability in an ever-evolving threat landscape. By prioritizing these initiatives, the organization can ensure that its PKI environment remains secure, compliant, and capable of supporting its long-term strategic objectives.