Free digital certificates, also referred to as identity certificates, are electronic credentials issued to verify the identity of a user, device, server, or website. Certain Certificate Authorities (CAs) issue these digital certificates at no cost. Trusted CAs issue certificates, such as Let’s Encrypt, organizations that verify identities and digitally sign certificates to verify their authenticity.

Free digital certificates are most used to enable HTTPS (Hypertext Transfer Protocol Secure) on websites by supporting SSL/TLS protocols (SSL and TLS are cryptographic protocols that secure communications over networks).

Key Components of a Digital Certificate

A digital certificate contains critical information, such as the public key, subject and issuer details, validity period, and a digital signature, that collectively enable secure, authenticated communications.

- Public Key: A Public Key is used in asymmetric cryptography to encrypt data or verify digital signatures.

- Subject Name: Identifies the entity (domain, user, or device) to which the certificate is issued.

- Issuer Name:Specifies the Certificate Authority (CA) responsible for generating the certificate.

- Validity Period: Indicates the start and end dates during which the certificate remains active and trusted.

- Digital Signature: A cryptographic value added by the CA to verify the certificate’s legitimacy and integrity.

Who Issues Free Digital Certificates?

Free digital certificates are provided by trusted Certificate Authorities (CAs) that follow industry standards for verification. Some of these Certificate Authorities are:

Let’s Encrypt is a widely adopted non-profit CA offering free SSL/TLS certificates. It is trusted by major browsers like Chrome and Firefox and is used to secure millions of websites.

ZeroSSL also offers free domain validation certificates.

Buypass Go SSL provides free certificates valid for 180 days.

These organizations follow industry standards to verify identity before issuing a certificate, even when it is offered at no cost.

How Do Free Digital Certificates Work?

Once issued, how are these certificates used in practice? Let’s walk through how free digital certificates are deployed and how they help secure online communication.

- Certificate Installation: The website administrator installs the certificate on the server.

- Client Request: When a client, say a web browser, initiates a connection, the server responds by providing its digital certificate.

- Validation: The client verifies the certificate’s digital signature and checks if a trusted CA issued it.

- Encrypted Session Establishment: If the certificate is valid, the client and server establish an encrypted communication channel using SSL/TLS. This step protects data from interception or tampering.

Note: Free certificates from CAs like Let’s Encrypt provide the same level of encryption as paid certificates. The main differences are in support, warranty, and validation levels.

Benefits of Free Digital Certificates

Free digital certificates offer strong encryption and authentication benefits without the financial burden, and they also support automation for easy management. They are recognized by all major browsers, making them accessible for individuals and organizations.

- Confidentiality: Encrypts the data exchanged between the client and server to block access by unauthorized parties.

- Authentication: Verifies the server’s identity (and, optionally, the client), reducing the risk of impersonation attacks (where attackers pretend to be a legitimate server).

- Integrity: It ensures that the data remains unchanged while being transmitted.

- Cost-Effective Security: Free certificates lower the barrier for individuals, small businesses, and non-profits to implement secure communications.

- Automation: Many free certificate providers support automated issuance and renewal using protocols like ACME (Automatic Certificate Management Environment), reducing manual effort and the risk of expired certificates.

- Browser Compatibility: All major browsers and platforms trust free certificates from recognized providers. This ensures users do not receive security warnings when accessing your website.

Use Cases for Free Digital Certificates

So, where exactly can these certificates be applied? Let us look at some common and impactful use cases where free digital certificates are already making a difference.

- Securing Web Applications: Websites use free SSL/TLS certificates to enable HTTPS, ensuring that the data exchanged between a user’s browser and the server is encrypted. Let’s Encrypt has issued over three billion global certificates to support encrypted web traffic. According to Let’s Encrypt and WIRED, most websites now use HTTPS by default, largely due to the accessibility of free certificates.

- Device Authentication: Free digital certificates verify devices in enterprise and IoT networks, strengthening access control and securing communication.

- Email Security: S/MIME (Secure/Multipurpose Internet Mail Extensions) certificates encrypt and sign emails, protecting against interception and spoofing.

- API Security: Certificates add a layer of protection to APIs by ensuring that only trusted clients can connect to backend systems.

- Secure Development Environments: Developers often use free certificates to protect non-production setups like staging or test environments. These certificates are suitable for internal use, even if production requires stronger validation.

Real-World Example

Many widely used platforms have adopted free certificates to enhance user trust and data security at scale. Here are some real-world examples of how free digital certificates make a difference.

GitHub Pages hosts millions of static websites and uses Let’s Encrypt to automatically provide free TLS certificates for all GitHub.io and custom domains. This enables developers to secure their sites with HTTPS easily and at no cost.

According to Let’s Encrypt’s 2023 Annual Report, most web traffic in the United States now occurs over HTTPS, largely due to the widespread use of free certificates. This demonstrates the effectiveness and reliability of free digital certificates in securing web communications at scale.

Free vs. Paid Digital Certificates

Not all certificates are created equal. Before choosing one, it’s important to understand the key differences between free and paid options. Let’s compare them side by side.

| Feature | Free Certificates | Paid Certificates |

|---|---|---|

| Cost | No charge | Recurring annual or multi-year fees |

| Encryption | Industry-standard encryption | Same encryption strength as free certificates |

| Validation | Basic domain ownership check (DV only) | Offers DV, OV, and EV options with identity verification |

| Support | Limited to online resources or community forums | Includes dedicated customer support |

| Warranty | Typically, none or very limited | May include financial protection in case of certificate failure |

| Common Use | Personal websites, testing, and internal applications | Business websites, e-commerce, and enterprise use cases |

Validation Types Explained

- Domain Validation (DV): Verifies that the applicant controls the domain.

- Organization Validation (OV): Verifies domain control and confirms the organization’s legitimacy.

- Extended Validation (EV): Involves a thorough identity check of the organization, providing the highest level of trust and visual indicators in browsers.

Limitations

Though useful, free digital certificates do come with certain trade-offs that must be considered, especially for high-security environments. We must also know that it comes with some limitations.

- Limited Validation: Most free certificates are domain-validated only. They do not verify organizational identity.

- No Warranty: Free certificates do not provide financial compensation in case of certificate misuse or compromise.

- Support: Free CAs usually offer community-based support rather than dedicated technical support.

- Short Validity Periods: Free certificates often have shorter lifespans (e.g., 90 days), requiring automated renewal.

How to Get a Free Certificate from Let’s Encrypt?

Obtaining a free SSL/TLS certificate from Let’s Encrypt is a straightforward process that can be completed with minimal technical expertise. Below are the detailed steps:

1. Meet the Prerequisites

- You must own a registered domain name.

- The domain should point to the public IP address of your server.

- You need root or administrative access to your server.

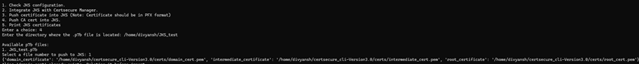

2. Choose and Install an ACME Client

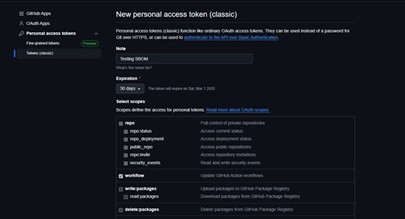

Let’s Encrypt certificates are issued using the ACME protocol, and you’ll need client software to interact with the Let’s Encrypt API. The most popular and widely supported client is Certbot.

On Ubuntu/Debian, install Certbot with:

sudo apt update

sudo apt install certbot python3-certbot-apache # For Apache

sudo apt install certbot python3-certbot-nginx # For Nginx

3. Request a Certificate

For Apache:

sudo certbot –apache

For Nginx:

sudo certbot –nginx

For a manual or DNS-based challenge (useful if you don’t have a web server running or for wildcard certificates):

sudo certbot certonly –manual

Certbot will prompt you to enter your domain name(s) and handle the domain validation process automatically. You may be asked which domains and subdomains to secure.

4. Complete the Domain Validation

Certbot will perform domain validation, usually by placing a temporary file on your server or by updating DNS records, to prove you control the domain.

5. Certificate Installation and Deployment

Once validation is successful, Certbot will automatically install the certificate and configure your web server for HTTPS. The certificate files are typically stored in:

/etc/letsencrypt/live/your_domain/

Key files include:

- fullchain.pem (the certificate)

- privkey.pem (the private key)

6. Automatic Renewal

Let’s Encrypt certificates are valid for 90 days. Certbot sets up automatic renewal by default, so you don’t need to renew the certificate manually. You can test renewal with:

sudo certbot renew –dry-run

7. Alternative: Using cPanel or Hosting Control Panels

If your hosting provider offers cPanel, you can install Let’s Encrypt certificates through the SSL/TLS Status or AutoSSL feature, typically with just a few clicks.

8. Alternative: Bitnami and Other Stacks

For Bitnami or other application stacks, you may use the Lego client or built-in scripts to generate and install certificates, following the provider’s documentation.

How to Get a Free Certificate from ZeroSSL

ZeroSSL provides free 90-day certificates. The steps include:

- Creating a free account on their website

- Generating a Certificate Signing Request (CSR)

- Verifying domain ownership (via email, DNS, or HTTP methods)

- Downloading and installing the certificate on your server

How to Get a Free Certificate from Buypass Go SSL

Buypass Go SSL also issues free certificates valid for 180 days. The process involves:

- Registering for an account

- Generating a CSR

- Requesting a certificate and completing domain validation (email or DNS)

- Downloading and installing the certificate on your server

These providers support automation through protocols like ACME, making it easy to keep certificates up to date and avoid service interruptions.

How can Encryption Consulting Help?

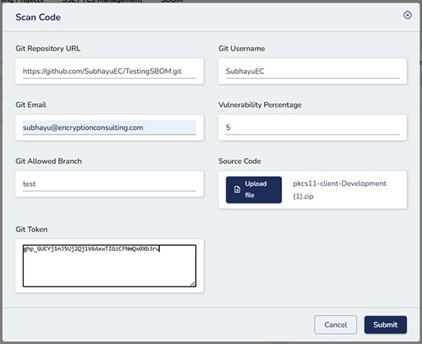

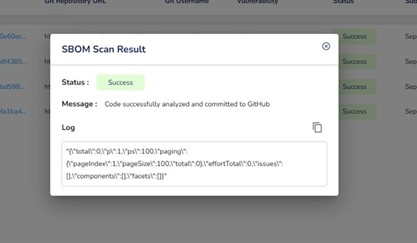

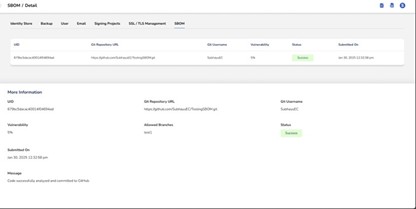

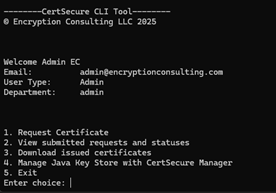

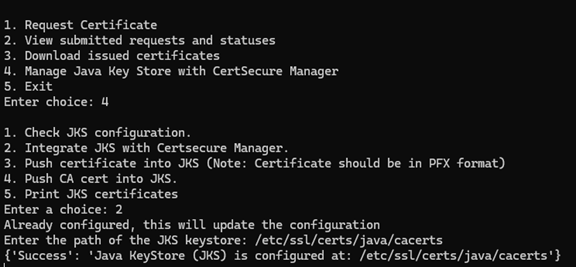

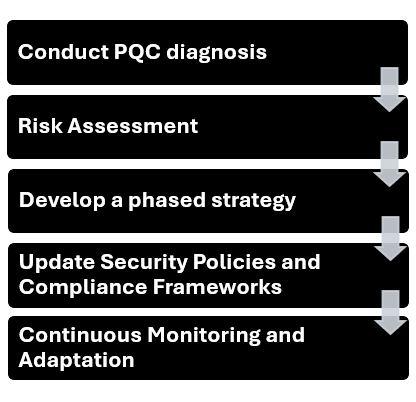

Encryption Consulting enables secure use of free digital certificates by helping organizations implement structured management through CertSecure Manager, which automates issuance, renewal, and tracking across environments. For those using free certificate providers like Let’s Encrypt, they ensure proper integration, enforce renewal policies, and maintain visibility. Their PKI as a Service supports cloud-based management of both free and enterprise certificates, while PKI Health Checks evaluate existing deployments for compliance with industry standards.

For teams relying on free certificate providers such as Let’s Encrypt, Encryption Consulting ensures seamless ACME protocol integration. This allows certificates to be automatically requested, installed, and renewed without manual intervention, reducing the risk of expiration and improving operational agility. Renewal policies can be enforced, and complete visibility into certificate usage is maintained across all systems.

They also support secure software development with CodeSign Secure, which protects code-signing processes using HSMs, policy enforcement, and centralized approval workflows. This is especially relevant when developers use free certificates for internal or open-source projects and still need controlled and auditable signing operations.

Conclusion

Free digital certificates have transformed how encryption is adopted across the internet. They offer accessible, secure, and automated ways to implement basic identity verification and encryption at scale. While unsuitable for every situation, free digital certificates are still essential in the modern security toolkit.

Organizations should carefully evaluate when to use free certificates and when a higher level of assurance is required. With the correct tools and guidance, businesses can take full advantage of free certificates without compromising security or compliance.

Connect with Encryption Consulting today to learn more about how your organization can integrate free certificate usage with enterprise-grade controls.