In the ever-evolving cybersecurity landscape, encrypted tunnels stand as stalwart guardians, shielding sensitive data as it journeys across networks. Picture this: every day, in the vast expanse of cyberspace, a staggering 7.7 exabytes of crucial information traverse these secure channels. To put this into perspective, that’s equivalent to a mind-boggling 707 billion DVDs per year. Established through protocols like SSL/TLS or VPNs, these fortresses protect against the prying eyes of cyber threats. However, the common misconception that encrypted tunnels are impervious to dangers can foster a deceptive sense of security. This blog endeavors to demystify the realm of threats associated with encrypted tunnels, delving into both the known and lesser-known risks.

Understanding Encrypted Tunnels

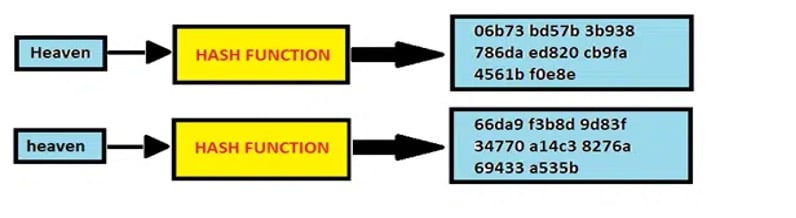

Before delving into the threats, one must grasp the basics of encrypted tunnels. These tunnels create a secure pathway for data to traverse the Internet or internal networks. They use encryption algorithms to scramble the information, making it unreadable to everyone with the proper decryption key. Let us investigate some examples of encryption tunnels:

-

SSL/TLS: A Standard for Web Security

SSL (Secure Sockets Layer) and its successor TLS (Transport Layer Security) are widely used protocols for securing communication over the Internet. SSL laid the groundwork for secure online communication, but TLS represents a significant evolution with enhanced security features.

TLS, the successor to SSL, addresses vulnerabilities identified in its predecessor, providing a more robust framework for data protection. This evolution ensures a higher level of security for the encrypted tunnels established between a user’s web browser and the server. SSL/TLS certificates validate the server’s authenticity, guaranteeing that users communicate with the intended website and not a malicious actor. This validation is a crucial aspect of maintaining the integrity of the encrypted tunnel.

-

VPNs: Extending Security to Networks

Virtual Private Networks (VPNs) extend the concept of encrypted tunnels to entire networks. By encrypting the communication between devices and the VPN server, VPNs enable secure data transfer over public networks, such as the Internet.

In the case of VPNs, the encrypted tunnel safeguards data and masks the user’s IP address, adding an extra layer of anonymity. This anonymity is particularly important for remote workers accessing sensitive company information over public networks, reducing the risk of malicious actors intercepting valuable data.

Common Threats in Encrypted Tunnels

A comprehensive understanding of potential threats is paramount for fortifying digital defenses in encrypted tunnels. While encryption is a robust shield against unauthorized access, various vulnerabilities within specific tunnel types necessitate a nuanced approach to cybersecurity.

-

Man-in-the-Middle (MitM) Attacks

Despite encryption, attackers can exploit vulnerabilities to insert themselves between the communicating parties. In MitM attacks, the perpetrator intercepts and potentially alters the data flowing through the tunnel. A notable incident occurred in 2014 when Lenovo distributed computers with Superfish Visual Search adware. This allowed attackers to create and deploy ads on encrypted web pages, altering SSL certificates to add their own. Consequently, attackers could view web activity and login data while users were browsing on Chrome or Internet Explorer. To mitigate this threat, it’s crucial to implement strong authentication mechanisms and regularly update encryption protocols.

-

Endpoint Vulnerabilities

The security of an encrypted tunnel is only as strong as its endpoints. If a device connected to the tunnel is compromised, it can serve as an entry point for malicious activities. A well-known example is the 2017 Equifax breach, where compromised endpoints in the company’s network led to the exposure of sensitive consumer data. The incident underscored the importance of regular security audits, patch management, and endpoint protection measures to minimize the risk of breaches.

-

Weak Encryption Algorithms

The strength of encryption directly impacts the tunnel’s resilience against attacks. Outdated or weak encryption algorithms may be susceptible to brute-force attacks. The “Logjam” vulnerability in 2015 highlighted the risks of weak encryption algorithms. Major websites, including government portals, fell prey to potential eavesdropping. To counter such threats, organizations should stay abreast of industry standards and adopt robust encryption methods.

-

Insider Threats

Internal actors with malicious intent pose a significant risk. Employees or contractors with access to encrypted tunnels can misuse their privileges. In 2018, Cisco’s cloud infrastructure, lacking reliable access management tools or two-factor authentication mechanisms, became vulnerable. Exploiting these weaknesses, a malicious ex-employee accessed the infrastructure and deployed malicious code. Implementing stringent access controls, conducting regular audits, and fostering a security-aware culture are essential to mitigating insider threats.

-

Denial of Service (DoS) Attacks

While encrypted tunnels focus on data confidentiality, availability is equally crucial. DoS attacks, flooding a system with traffic to overwhelm its resources, can disrupt the functioning of encrypted tunnels. The Six Banks DDoS Attack of 2012 involved powerful, coordinated attacks on major U.S. banks, aiming to disrupt online services. This incident highlighted digital vulnerabilities and the potential impact of cyber assaults. To enhance resilience against DoS attacks, employing traffic filtering, load balancing, and redundant infrastructure is essential.

-

IPsec Tunnels: A Potential Gateway for Intrusion

IPsec, a cornerstone of secure VPNs, may become an enticing target for cyber intruders. During discovery and incursion, attackers could exploit IPsec tunnels, emphasizing the importance of fortifying VPN endpoints. In response to the 2018 cyber attack, where hackers targeted an organization’s IPsec tunnels, it became evident that organizations need to enhance security protocols and rigorously monitor IPsec tunnels. Implementing intrusion detection systems (IDS) and regularly updating security configurations are crucial steps.

-

Site-to-Site VPN Tunnels

Site-to-site VPNs, crucial for large organizations, may provide concealed routes for cyber reconnaissance. A multinational corporation faced cyber reconnaissance through its site-to-site VPNs in 2019, underscoring the lack of regular inspection during the reconnaissance phase. To prevent concealed routes for cyber espionage, organizations should implement robust traffic monitoring and logging systems. Regularly analyzing logs and employing intrusion prevention systems (IPS) can help identify and thwart potential threats.

-

SSH Tunnels: Covert Movements and Stealth Attacks

SSH tunnels, designed for secure data transfer, may attract attackers due to their role in creating secure conduits. Compromised SSH tunnels could serve as pathways for covert movements, urging enhanced vigilance and monitoring. Organizations should implement strong authentication mechanisms, regularly update SSH configurations, and utilize tools that provide real-time monitoring of SSH traffic. Continuous scrutiny of SSH tunnels, including tracking user activity and auditing access logs, is paramount to detecting and mitigating potential security breaches.

-

TLS/SSL Tunnels: Potential for Identity Manipulation

TLS/SSL tunnels, commonly used for securing web transactions, may become arenas for identity manipulation. Attackers might employ tactics like man-in-the-middle, exploiting false identities, emphasizing the need for continuous scrutiny. Implementing robust certificate management practices, regularly updating SSL/TLS protocols, and employing web application firewalls (WAFs) are essential steps. Organizations should also conduct regular security assessments to identify and address vulnerabilities.

-

Guarding Against Encrypted Phishing

Phishing exploits leverage TLS/SSL tunnels to create deceptive websites using stolen certificates. Trust in HTTPS sessions becomes vulnerable when attackers manipulate SSL/TLS tunnels, necessitating proactive measures and regular validation..

Recent years have witnessed an evolution in phishing attacks exploiting encrypted tunnels. In the “DarkTequila” campaign of 2021, cybercriminals ingeniously utilized TLS-encrypted connections to mask their malicious activities. By deploying deceptive websites with stolen SSL certificates, the attackers successfully targeted users’ sensitive information. This example underscores the urgency of implementing proactive measures to guard against encrypted phishing attacks, such as advanced threat detection systems, regular security awareness training, and enhanced SSL/TLS certificate validation processes.

Best Practices for Secure Encrypted Tunnels

To bolster the security of encrypted tunnels, consider implementing the following practices:

-

Comprehensive Visibility with TLS/SSL Inspection

Understanding data streams is essential for identifying potential threats. Orchestrating TLS/SSL inspection provides comprehensive visibility. Organizations can effectively monitor traffic by accessing private keys, allowing a swift response to emerging risks.

-

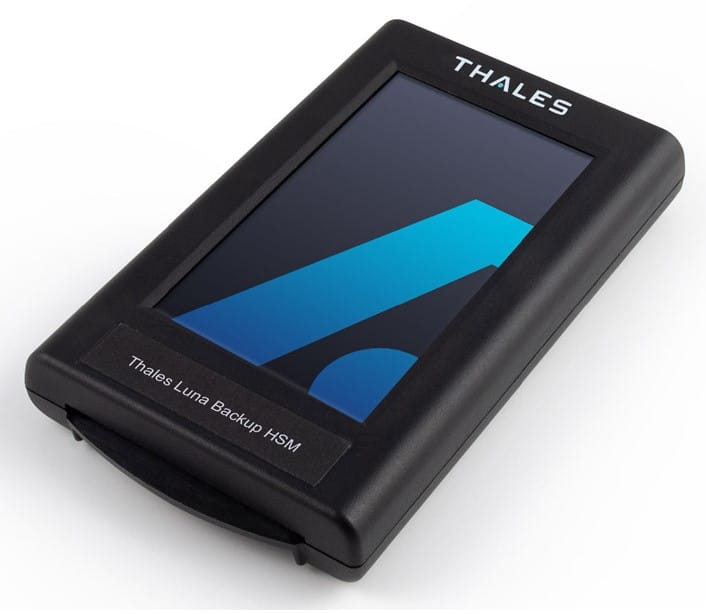

Oversight of SSH Key Entitlements

Recognizing the susceptibility of any encrypted tunnel, extend oversight to SSH keys. Regularly monitoring SSH key entitlements adds an extra layer of protection against insider threats and unauthorized access.

-

Continuous Monitoring and Timely Key Management

Automated tools are instrumental in continuously monitoring machine identities. This ensures the timely detection of expired or unused keys. Promptly managing outdated keys mitigates the risk of man-in-the-middle attacks and malware injection.

-

Multi-Factor Authentication (MFA) Implementation

Implementing Multi-Factor Authentication (MFA) adds an extra layer of security to encrypted tunnels. Require multiple verification forms to enhance access controls and thwart unauthorized access attempts.

-

Regular Security Training for Employees

Invest in regular security training for employees to enhance their awareness of potential threats. Educated employees are better equipped to identify and respond to security risks, reducing the likelihood of insider threats.

-

Secure Configuration of VPNs and Encryption Protocols

Ensure that VPNs and encryption protocols are securely configured. This includes using strong encryption algorithms, regularly updating protocols, and adhering to industry best practices for secure configurations.

-

Incident Response Plan Development

Develop a robust incident response plan specific to encrypted tunnel breaches. A well-defined plan enables organizations to respond swiftly and effectively to security incidents, minimizing potential damage.

-

Regular Security Audits and Penetration Testing

Conduct regular security audits and penetration testing to proactively identify vulnerabilities in your network and encrypted tunnels. Regular assessments help in uncovering potential weaknesses, ensuring that security measures are robust and effective. Penetration testing simulates real-world attacks, providing valuable insights into the organization’s defensive capabilities and areas for improvement.

Conclusion

Demystifying threats in encrypted tunnels is the first step towards fortifying your cybersecurity defenses. By understanding the potential risks, you can implement proactive measures to safeguard your data and maintain the integrity of your encrypted connections.

Regularly updating security protocols, educating personnel on cybersecurity awareness, and leveraging advanced threat detection technologies are essential components of a robust cybersecurity strategy.

In an ever-evolving digital landscape, staying informed and vigilant is key. As you navigate the complexities of encrypted tunnels, remember that knowledge is your greatest asset in the ongoing battle against cyber threats. Looking ahead, the dynamic nature of cybersecurity suggests that new threats will emerge. Therefore, the importance of staying informed about future threats cannot be overstated. By anticipating and adapting to evolving challenges, you can keep your cybersecurity defenses resilient. Stay secure, stay informed, and keep your encrypted tunnels impenetrable.