Introduction

In 2025, cybersecurity and regulatory compliance have become strategic priorities for organizations worldwide, transcending traditional check-the-box exercises to underpin business resilience and trust. Cyber threats, privacy concerns, and emerging technologies are prompting new laws and standards at a rapid pace. In parallel, stakeholders from investors to consumers expect organizations to not only secure data and systems but also demonstrate ethical governance and transparency.

This comprehensive overview explores key compliance trends shaping 2025, spanning data protection and privacy, encryption mandates, AI governance, breach disclosure, supply chain security, identity management, automation of compliance, and challenges in critical industries. The goal is to provide cybersecurity professionals, compliance experts, and technical stakeholders with a clear picture of the evolving global compliance landscape and practical insights for navigating the road ahead.

Global Data Protection and Privacy Regulations Evolve

Data protection regulations have continued to expand across the globe, with more countries and states enacting privacy laws. As of early 2025, 144 countries have established data protection or consumer privacy laws, covering roughly 79 to 82% of the world’s population. This represents a dramatic increase in just the last five years. In the United States, privacy regulation is shifting from a single-state phenomenon (California’s pioneering CCPA/CPRA) to a patchwork of state laws. 42% of U.S. states (21 states) have now passed comprehensive consumer privacy statutes as of 2025.

Critically, eight new state privacy laws take effect in 2025, including Delaware, Iowa, Nebraska, New Hampshire (all January 1), followed by others like Tennessee, Indiana, Montana, Oregon, Texas, and more rolling out later in the year. By year’s end, these laws will double the number of states with privacy frameworks from 16% to 32% of all states, covering an estimated 43% of the U.S. population. This rapid expansion signals a new era where data privacy is a baseline legal requirement across much of the U.S., not just a Californian-centric concern.

GDPR and international frameworks are maturing. In the EU, the landmark General Data Protection Regulation (GDPR) remains a global benchmark and is being refined and enforced rigorously. Regulators in the EU issued €1.2 billion in fines in 2024 (a decreased of 33 % from 2023)alone for GDPR violations, showing that enforcement has teeth. While GDPR’s core principles stay the same, there are discussions on simplifying compliance for SMEs and other refinements to ensure the law remains effective.

The UK, Post Brexit, is updating its own regime – the Data Protection and Digital Information Bill (often dubbed “UK GDPR”) is under review in 2025 to tweak requirements and reduce certain burdens while maintaining high standards. Outside Europe, many countries have introduced or bolstered privacy laws: e.g. India’s Digital Personal Data Protection Act (enacted 2023) is coming into force, and countries from Brazil to South Korea and Kenya have new or updated data protection statutes by 2025.

According to the UN, over 70% of countries now have data privacy legislation and another 10% are drafting laws, a nearly global adoption. This means organizations operating internationally face a variety of requirements (consent, data localization, breach notification, individual rights, etc.), and must keep up to date of regional nuances.

U.S. federal action and global alignment pressures. Despite the flurry of state level laws, the U.S. still lacks a single federal privacy law. However, public pressure is high 72% of Americans believe there should be more government regulation of how companies handle personal data, and over half of U.S. voters (in surveys) support a unified national privacy law.

The now-stalled American Data Privacy and Protection Act (ADPPA) showed bipartisan interest in Congress; while it didn’t pass, it outlined measures widely supported by the public (e.g., banning the sale of data without consent, data minimization, private rights of action). This indicates that federal standards could eventually emerge to harmonize the patchwork.

Internationally, frameworks like the OECD’s privacy guidelines and the Global Cross Border Privacy Rules (CBPR) system are gaining momentum, aiming to bridge differences and facilitate data flows between jurisdictions with mutual recognition. Notably, in 2023 the EU and U.S. agreed on a new Data Privacy Framework to permit trans Atlantic data transfers, replacing the invalidated Privacy Shield a critical development for multinational companies.

In Asia, cross border frameworks and regional agreements are also taking shape. Overall, the trend is toward greater global convergence in privacy principles, even as local compliance details proliferate.

Key implications for organizations: Data protection compliance in 2025 requires a truly global outlook. Companies must track and implement a myriad of requirements from GDPR’s strict consent and data subject rights, to state laws granting rights like opt-outs of sales or AI profiling. They should expect more frequent updates and new laws, for example, at least 264 regulatory changes in privacy were recorded globally in a single month (May 2025), underscoring how fast moving this space is.

Enforcement is intensifying not just via fines but also via court rulings and cross border cooperation among regulators. Businesses should invest in robust privacy programs such as conduct data mapping, update privacy notices, enable consumer rights request workflows, and ensure a “privacy by design” approach for new products. The growing public awareness and concern about privacy (a majority in many countries are worried about how their data is used) means compliance is also key to maintaining customer trust and reputation. In short, protecting personal data is no longer just a legal checkbox, it’s part of core operations and brand integrity.

Encryption and Cryptographic Compliance

Encryption moves from best practice to baseline requirement. As cyber threats escalate, encryption of sensitive data has become a centerpiece of compliance and risk mitigation strategies. Organizations increasingly recognize that robust encryption both for data at rest and in transit can dramatically reduce the impact of breaches. The 2025 Global Encryption Trends Study found that 72% of organizations implementing an enterprise encryption strategy experienced reduced impacts from data breaches, highlighting how effective encryption is in safeguarding data.

Conversely, companies lacking encryption protections suffer more severe breaches; one analysis noted that organizations with comprehensive encryption in place are 70% less likely to experience a major data breach compared to those without full encryption coverage.

Regulators are taking notes. Many data protection laws explicitly or implicitly require encryption of personal data. GDPR, for instance, cites encryption as a recommended safeguard (and breach notifications can be avoided if stolen data was encrypted). In the U.S., state laws and sector regulations (like finance and healthcare) increasingly mandate encryption for certain data.

Even historically lenient regimes are stiffening: a proposed 2025 update to the U.S. HIPAA Security Rule would make encryption of electronic protected health information a mandatory requirement (where previously it was an “addressable” implementation). Similarly, the EU’s NIS2 Directive lists “policies for the use of cryptography and encryption” as a required security measure for essential services. In short, what was once optional is now expected to encrypt your data or face compliance consequences.

Tailored Advisory Services

We assess, strategize & implement encryption strategies and solutions customized to your requirements.

Key Findings from the 2025 Encryption Report

This year’s Global Encryption Trends report (and related studies) also reveal several emerging themes in cryptographic compliance:

- Encryption adoption is at all time highs: Enterprise adoption of encryption has surged, with more organizations applying encryption consistently across databases, applications, and cloud services. A Ponemon Institute survey noted that the past year saw the largest increase in encryption deployment in over a decade, reflecting both regulatory pressure and board level attention on data security. However, challenges remain in managing encryption keys, discovering all sensitive data to encrypt, and integrating encryption across hybrid cloud environments.

- Automation and AI in key management: About 58% of large enterprises now leverage AI or advanced automation for encryption key management and compliance tasks. This includes using machine learning to rotate keys, detect anomalous access to cryptographic modules, and streamline encryption deployment. Automation helps address the complexity of managing thousands of keys and certificates, an area highlighted in audits as a common weakness. The trend also ties into crypto agility; organizations are investing in tools to automatically update or swap out encryption algorithms and keys when required (for example, in response to a compromise or an algorithm being deprecated).

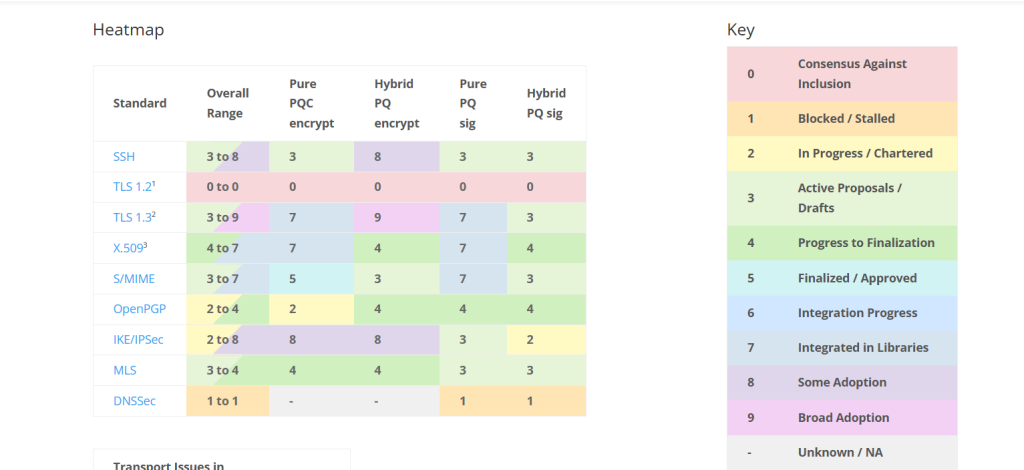

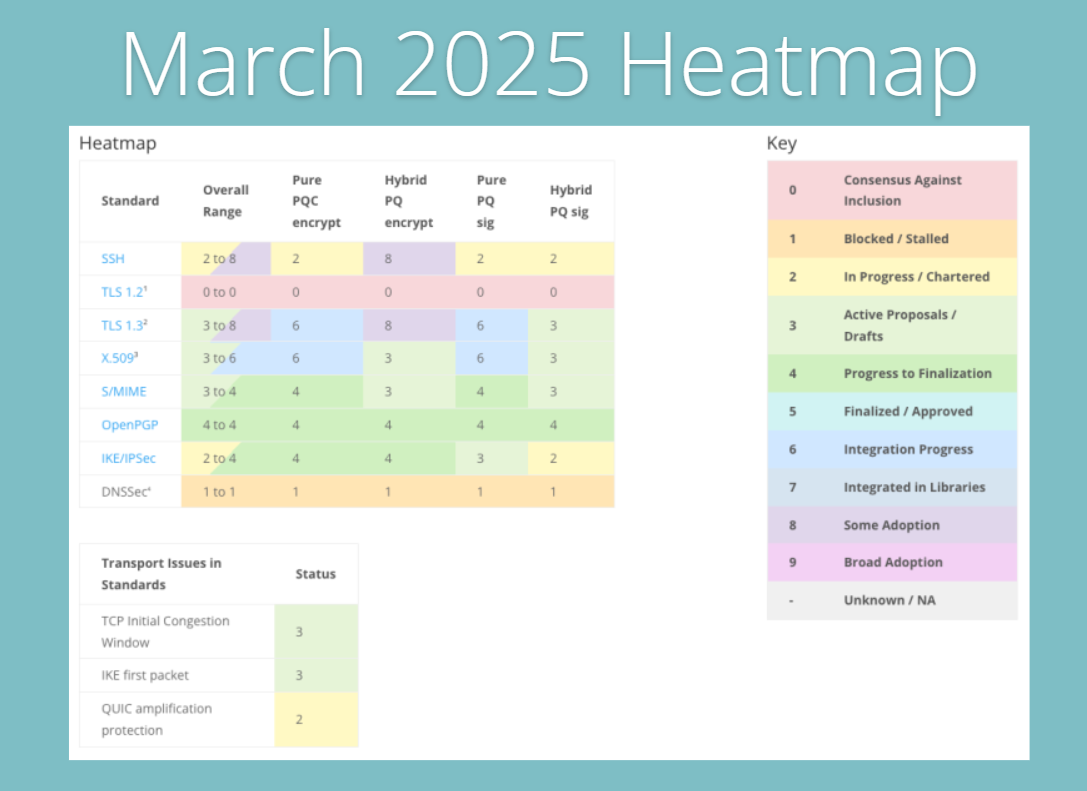

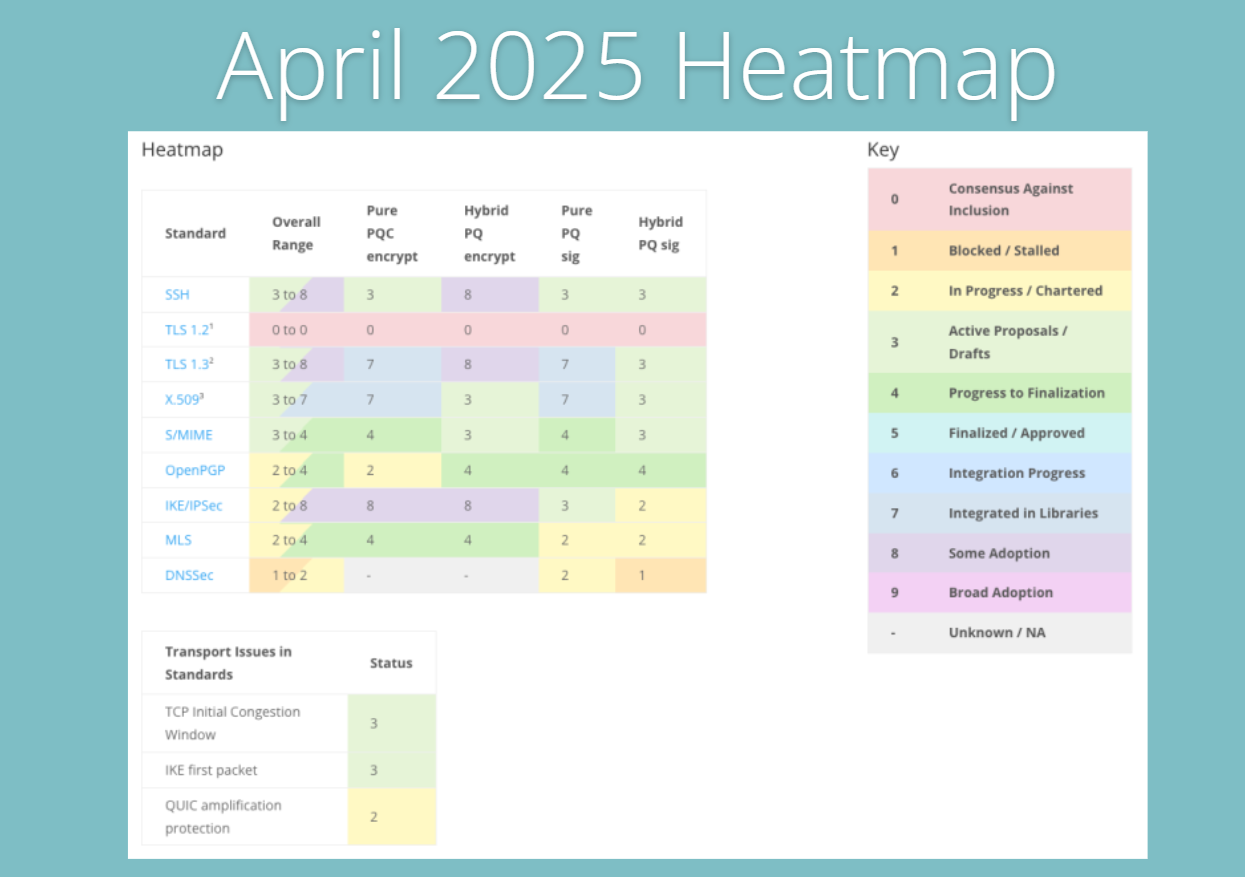

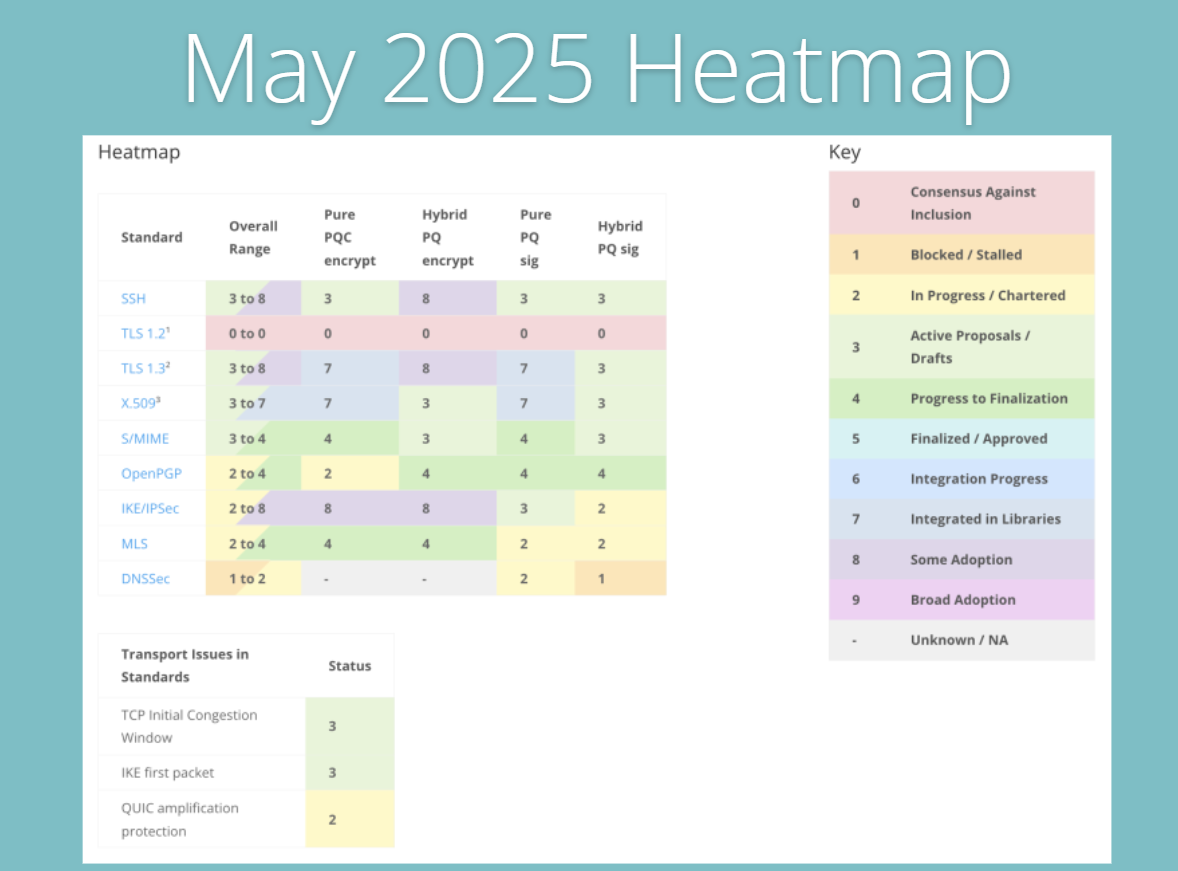

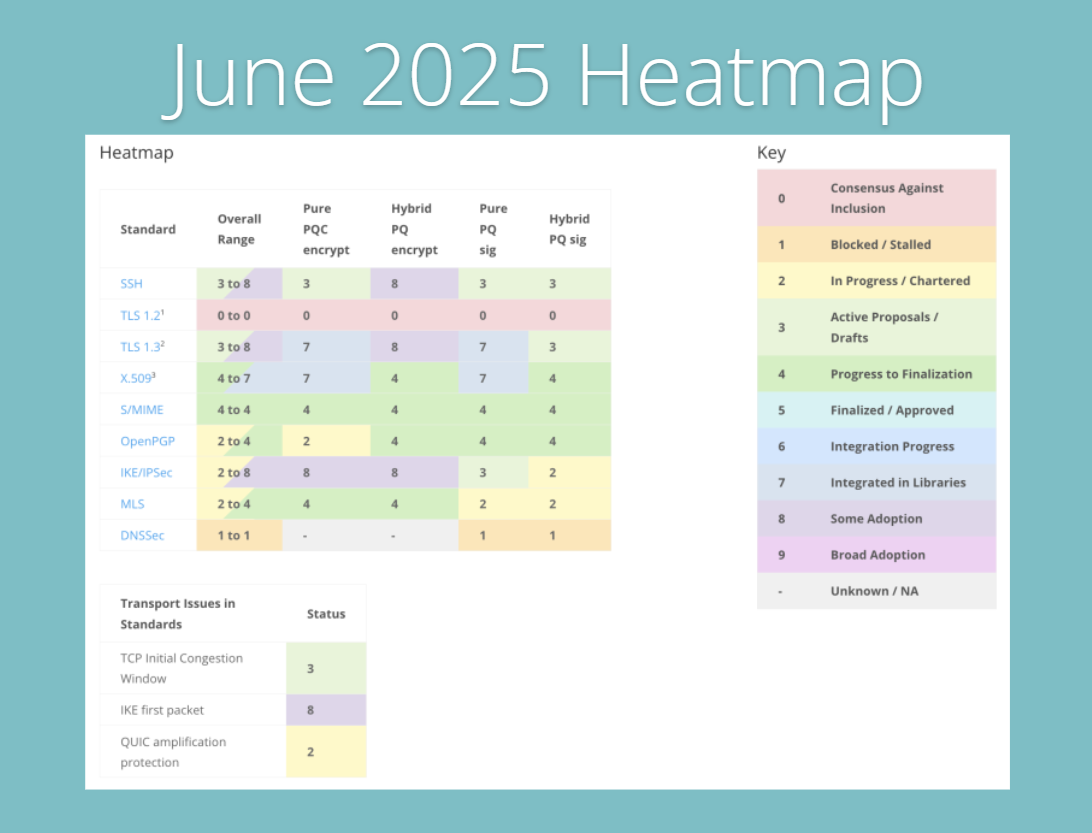

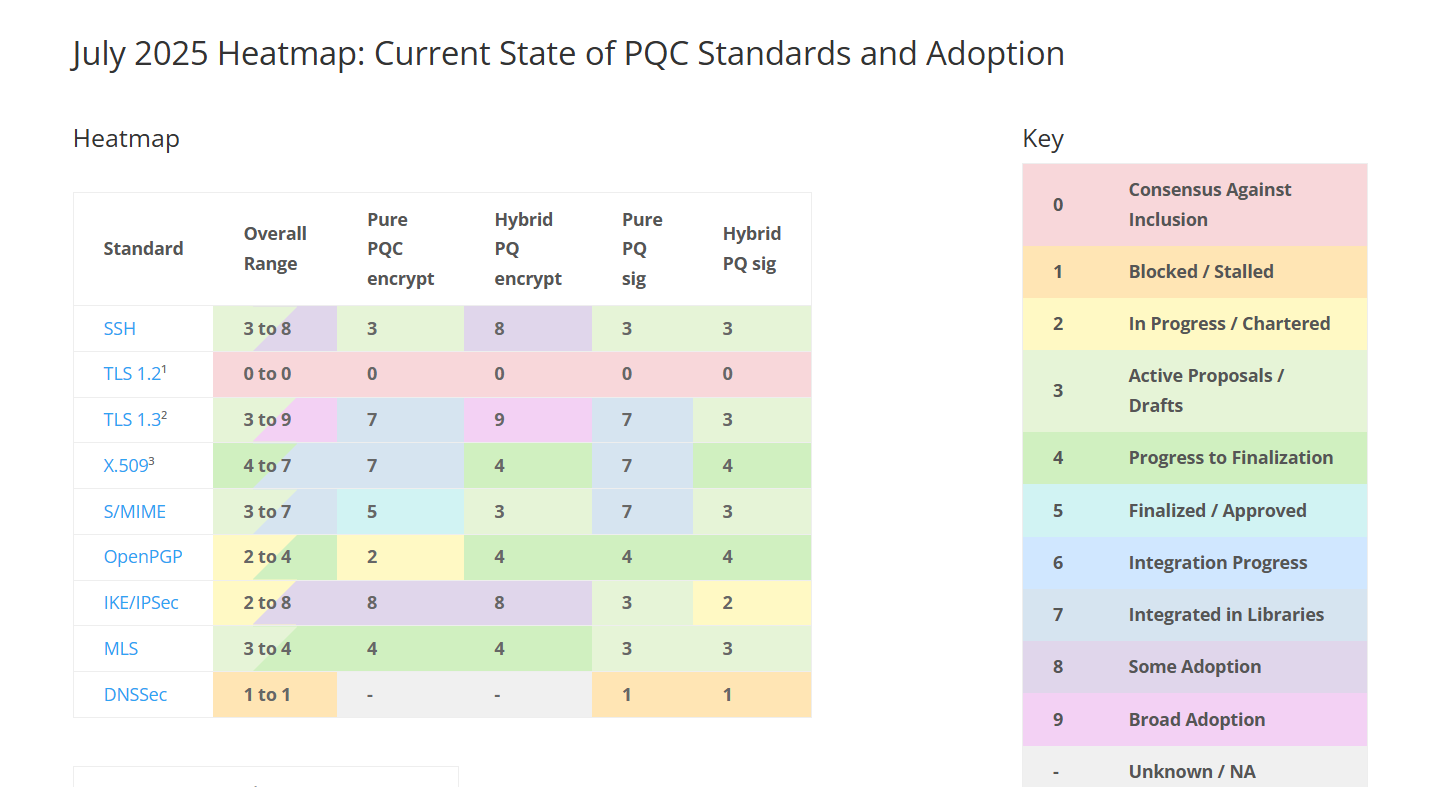

- Postquantum cryptography (PQC) readiness: A prominent theme in 2025 is preparation for the quantum computing era. While powerful quantum computers capable of cracking today’s encryption (like RSA or ECC) are not here yet, the “harvest now, decrypt later” threat looms large. In the Thales 2025 Data Threat survey, 63% of security professionals flagged “future encryption compromise” by quantum attacks as a major concern, and 58% are worried about adversaries harvesting encrypted data now to decrypt once quantum capabilities arrive.

- Standards bodies are responding: NIST released new post quantum encryption standards (e.g. CRYSTALS Kyber) and a transition roadmap in 2024, recommending phasing out RSA/ECC by 2030 and ceasing their use entirely by 2035. Businesses are beginning to act. Over half of organizations (around 57–60%) report they are prototyping or evaluating PQC algorithms in 2025, and nearly half are assessing their current cryptographic inventory to identify where upgrades will be needed. Additionally, 45% say they are focusing on improving crypto agility, ensuring they can swap algorithms easily when required. Regulators may soon ask about quantum readiness in risk assessments, so early movers aim to be prepared. In some jurisdictions, government agencies have mandates to inventory and transition crypto by set deadlines signaling what may eventually trickle down to the private sector.

- Encryption and digital sovereignty: As data protection laws proliferate, encryption is seen as a tool to enable compliance with data residency and sovereignty requirements. The Thales report highlights a growing emphasis on “who controls data and encryption keys.” With 76% of enterprises using multiple public cloud providers, organizations are asserting control via techniques like BYOK (Bring Your Own Key) and HYOK (Hold Your Own Key) encryption, where the enterprise retains custody of encryption keys rather than the cloud provider. This ensures that even if data is stored overseas or in the cloud, the company can prevent unauthorized access (including from cloud admins or governments) by withholding keys.

In 2025, 42% of organizations identified strong encryption and key management as key enablers of digital sovereignty goals (e.g., ensuring compliance with EU data transfer rules). Expect to see more solutions that allow companies to localize keys, use hardware security modules (HSMs), or employ techniques like homomorphic encryption to comply with jurisdictional requirements while still leveraging global services.

Regulatory Compliance for Cryptography

Regulations are increasingly prescriptive about cryptographic standards. For example, many laws and industry standards now specify using strong encryption algorithms (e.g., AES256, TLS 1.3) and deprecating outdated protocols. The U.S. Federal Trade Commission’s Safeguards Rule and various state laws require encryption for personal information either by law or effectively as the standard of care. The Payment Card Industry Data Security Standard (PCI DSS) 4.0 (effective March 2025) mandates encryption of cardholder data both in transit and at rest with specific technical requirements.

In healthcare, as noted, upcoming rules would remove flexibility and demand encryption and MFA outright for all systems handling patient data. Governments are also setting expectations: the U.S. White House issued directives for federal agencies to adopt quantum resistant cryptography in the coming years, and the EU’s Cybersecurity Agency (ENISA) has guidance for state-of-the-art cryptographic controls under the NIS2 directive.

Organizations should track these developments closely. Noncompliance can be costly beyond breach risk, failing to meet encryption requirements can draw fines or legal liability. On the upside, implementing strong encryption not only meets compliance obligations but also can reduce breach notification obligations (if data is properly encrypted, many laws exempt the incident from public disclosure).

For 2025 and beyond, encryption is both a compliance imperative and a business differentiator, demonstrating to customers that their data is safe. Firms should conduct crypto audits, ensure proper key management (with separation of duties and backups), and stay updated on cryptographic policy (for instance, any government restrictions on encryption use or algorithms allowed for certain data exports).

AI Governance and Algorithmic Transparency Regulations

Regulators tackle the AI revolution. Artificial Intelligence (AI) has seen explosive adoption, from machine learning in enterprise analytics to generative AI models transforming business processes. This wave has prompted urgent calls for governance to ensure AI is used responsibly, fairly, and safely. In 2025, we are witnessing the rollout of the world’s first comprehensive AI regulations.

The European Union’s AI Act was finalized as Regulation (EU) 2024/1689 and is being phased in over the next few years. The EU AI Act takes a risk based approach: it bans certain “unacceptable risk” AI uses outright (e.g., social scoring, exploitative techniques) starting February 2025 for some provisions, and imposes strict obligations on “high risk” AI systems (such as those used in critical infrastructure, employment decisions, credit scoring, medical devices, etc.

High risk AI providers and deployers will need to implement conformance assessments, transparency, human oversight, and robust risk management for their systems. For example, AI systems in recruitment or loan approvals must be tested for bias and their outcomes traceable. The Act also mandates transparency for AI in general: users must be informed when they are interacting with an AI (rather than a human), and AI generated content (like deepfakes) should be labeled as such in many cases. Some requirements start in 2025 (e.g. certain transparency rules and voluntary codes of conduct), while full compliance for high risk systems will be required by 2026 or 2027 after implementation periods.

The EU AI Act is ground breaking, it is the first major AI rulebook and is expected to influence regulations globally similar to how GDPR influenced privacy laws.

In the United States, there isn’t a single AI law yet, but regulators are using existing powers and guidelines to rein in AI. The FTC (Federal Trade Commission) has warned that it will treat biased or deceptive AI outputs as potential violations of consumer protection law (for instance, if an AI decision algorithm unfairly discriminates in lending, that could be an “unfair practice”). The U.S. Equal Employment Opportunity Commission (EEOC) has similarly cautioned employers that using AI hiring tools that have a different impact could violate discrimination laws.

We also see targeted legislation cropping up in the US, for example, the “TAKE IT DOWN” Act and other bills in Congress seek to criminalize certain malicious deepfakes (particularly sexually explicit deepfakes or those intended to incite violence). Another proposed bill would mandate that AI generated content be watermarked or carry a disclosure. While these haven’t passed as of 2025, they indicate bipartisan concern about AI misuse.

At the state level, New York City implemented a first of its kind law (effective 2023–2024) requiring companies to conduct bias audits on AI hiring tools and to notify candidates when AI or algorithms are used in hiring decisions. Other jurisdictions are considering similar algorithmic accountability measures, especially in employment and credit contexts.

Cybersecurity Disclosure and Incident Reporting Mandates

Mandated breach disclosure on the rise. One of the clearest compliance trends in cybersecurity is the move toward mandatory disclosure of cyber incidents. Gone are the days when companies could quietly handle breaches; regulators now demand timely reporting to authorities, investors, and affected individuals. In the United States, the Securities and Exchange Commission (SEC) implemented a landmark rule in 2023 (phased into 2024) that requires publicly traded companies to disclose material cybersecurity incidents to the market within 4 business days of determining an incident is material.

Starting in late 2023, companies must file an 8K report detailing the nature and impact of a major cyber incident, for example, a significant data breach or system outage that investors would consider important. The only allowed delay is if the U.S. Attorney General certifies that immediate disclosure would pose a grave risk to national security or public safety. By late 2025, even smaller reporting companies will be coming into compliance with these SEC requirements.

In addition, the SEC now requires periodic disclosures about a company’s cyber risk management, governance, and board oversight in annual 10K reports. This includes identifying which board committee is responsible for cybersecurity. In fact, the percentage of S&P 500 boards that lacked a designated cybersecurity committee dropped from 15% in 2021 to just 5% by 2024 after these rules 95% now explicitly assign cyber oversight at the board level, partly due to the SEC’s emphasis on governance accountability.

Fast incident reporting to regulators and stakeholders: Beyond the SEC’s investor focused rule, governments are imposing breach reporting in critical sectors. The U.S. enacted the Cyber Incident Reporting for Critical Infrastructure Act (CIRCIA) in 2022, and by October 2025 the implementing regulations are expected to take effect. CIRCIA will require companies in designated critical infrastructure sectors (e.g. energy, healthcare, finance, transportation, and others vital to national security) to report substantial cyber incidents to the Department of Homeland Security’s Cybersecurity and Infrastructure Security Agency (CISA) within 72 hours, and ransomware payments within 24 hours.

New industry specific disclosure rules are also emerging. The U.S. Department of Defense now requires defense contractors to promptly report cyber incidents that affect DoD information, or risk losing contracts. State regulators are joining in, for example, the New York Department of Financial Services (NYDFS) Cybersecurity Regulation requires regulated financial institutions to notify NYDFS of certain cyber events within 72 hours. In healthcare, HIPAA has long required breaches of health data affecting 500+ individuals to be reported to HHS and the public within 60 days; now, the trend is toward even faster reporting and greater transparency (some proposed federal legislation would tighten health breach notice timelines).

Public disclosure and communication: Another aspect is disclosure to the public and affected individuals. Privacy laws like GDPR mandate that if personal data is breached and there’s a high risk to individuals, they must be notified “without undue delay.” Many of the new state privacy laws in the U.S. also contain breach notification provisions or rely on existing state breach laws (which typically require notice to residents within 3060 days of discovery, with some variations). The result is that companies suffer reputational damage if they don’t handle breach communications well, delay or obfuscation can lead to regulatory fines on top of the incident itself.

For instance, in 2023, several companies were fined by European regulators for failing to notify customers of breaches in a timely manner. In 2025, with the SEC requiring disclosure of material incidents, we will see more companies making public statements via filings, which in turn could trigger investor lawsuits or drops in stock price if the incident is serious. This creates a new incentive to ensure strong cyber defenses: the market will directly penalize companies that get breached, in addition to regulatory penalties.

Cybersecurity as a governance and compliance issue: These disclosure requirements are forcing executive teams and boards to be directly involved in cybersecurity oversight. Since a major incident must be reported and will become public quickly, boards are asking: are we prepared to handle a breach? Compliance now means having not just technical safeguards but also clear incident response processes, internal escalation paths, and decision frameworks for what is “material”.

Interestingly, the SEC rule’s requirement to disclose how the board oversees cyber risk has led many companies to improve their governance structure (e.g., establishing a cyber risk committee or adding cyber to the audit/risk committee charter). Nearly 77% of large U.S. companies now say cybersecurity is explicitly an audit committee responsibility, up from just 25% in 2019 a dramatic shift in a few years. This means more directors are getting educated on cyber, and companies are conducting board level cyber reviews and tabletop exercises.

Preparing for the new normal: To comply with these mandates, organizations should ensure they can detect incidents quickly (you can’t report what you don’t know about). This means robust monitoring and threat detection capabilities. They should establish criteria for what constitutes a reportable incident (material impact, certain compromised data, etc.) aligned with the laws that apply to them.

Having an incident response plan that includes notification steps is crucial, for example, who contacts regulators, who drafts the public statements, and how to get accurate information while under pressure. Many companies are also securing cyber insurance, which often requires notification within strict timeframes to the insurer as well. The emphasis should be on transparency and accuracy; several regulators have indicated that while initial reports can be sparse, they expect timely follow ups as more is learned.

Tailored Advisory Services

We assess, strategize & implement encryption strategies and solutions customized to your requirements.

Integrating Compliance with Broader Governance

Cybersecurity joins the ESG agenda. In 2025, cybersecurity and data protection are no longer seen as purely technical issues they are now squarely part of the Environmental, Social, and Governance (ESG) considerations for organizations. ESG compliance traditionally focuses on environmental sustainability, social responsibility, and corporate governance ethics. Cybersecurity has forced its way into this conversation under the “Governance” pillar (and arguably the “Social” pillar, when considering privacy as a consumer right). Investors, rating agencies, and regulators are evaluating how companies manage cyber risks as an indicator of good governance.

In a recent survey, nearly 4 out of 5 investors (79%) said boards of directors should demonstrate expertise in cybersecurity (as well as climate and other emerging risks) and detail their efforts to mitigate those risks. In other words, stakeholders expect top leadership to treat cyber risk on par with financial or strategic risks. This is a big shift, a decade ago, cybersecurity rarely made it into annual reports or investor discussions, whereas now a major breach can destroy stock value and stakeholder confidence overnight, which investors recognize.

Regulatory drivers on the ESG side: New regulations in the ESG realm implicitly incorporate cyber and privacy. The EU’s Corporate Sustainability Reporting Directive (CSRD), effective 2025 for large companies, requires extensive disclosures on governance and risk management, which would include how companies handle risks like cybersecurity to ensure business continuity and resilience. The European Sustainability Reporting Standards (ESRS) under CSRD explicitly ask companies to report on “business conduct” matters, data security and privacy practices can fall here as part of social and governance factors.

Furthermore, regulations such as the EU Digital Operational Resilience Act (DORA) for financial entities, while primarily about cyber/operational risk, also connect to ESG by emphasizing operational resilience and stakeholder impacts of disruptions (resilience is increasingly viewed as a sustainability issue, a business can’t be sustainable if it’s constantly breached or disrupted).

Meanwhile, SEC climate disclosure rules (though currently on hold) have made boards aware that comprehensive risk disclosure is becoming the norm; many believe cyber risk disclosure could be next on the SEC’s agenda beyond the incident reporting rule. Even without explicit rulemaking, the SEC’s existing guidance calls for material cyber risks to be disclosed in annual reports if they could impact investors.

From a social responsibility perspective, safeguarding customer data is an ESG issue. Large data breaches can harm customers (identity theft, privacy invasion), so companies are being evaluated on how well they protect data, much like they’d be evaluated on product safety. Rating agencies that provide ESG scores often include data privacy and security as a subfactor in the “Social” or “Governance” score.

For instance, MSCI and Sustainalytics ESG ratings incorporate whether a company has had recent data breaches or fines for privacy violations, and what policies it has in place for information security. Thus, good cybersecurity is rewarded with better ESG scores, and conversely, a breach or compliance failure can hurt an ESG rating.

In summary, the trend for 2025 is that cybersecurity compliance is not siloed; it is part of the larger ESG narrative. Organizations that excel in this area treat cybersecurity as a core element of corporate governance, openly report on their security posture and improvements, and frame data protection as central to their social responsibility. This not only meets emerging compliance demands but also appeals to investors and customers who are increasingly valuing digital trust as an asset.

Supply Chain and Third-Party Risk Management Compliance

Third-party cyber risk under scrutiny: High profile incidents in recent years (from the SolarWinds backdoor to breaches via HVAC contractors) have taught regulators that a company is only as secure as its weakest link – often a supplier or service provider. In 2025, compliance requirements are zeroing in on supply chain cybersecurity. Organizations are expected to manage risks not just within their walls, but across a web of vendors, cloud providers, software suppliers, and partners.

According to a global survey of CISOs, a whopping 88% of organizations are worried about cyber risks stemming from their supply chain, and with good reason: over 70% had experienced a significant cybersecurity incident originating from a third party in the past year. These can include breaches caused by compromised software updates, vendor credentials being stolen, or data being stolen from a less secure partner.

Despite the concern, the same survey revealed a dangerous gap, less than half of organizations monitor even 50% of their suppliers for cybersecurity issues. In other words, visibility into third party risk is poor. Regulators see this gap and are responding by mandating more rigorous third party risk management (TPRM) practices.

Regulations mandating supply chain security: The EU’s NIS2 Directive is a prime example of codifying supply chain security obligations. NIS2, which as discussed applies to a broad range of critical and important entities, makes comprehensive supply chain risk management mandatory (no longer just guidance). Companies under NIS2 must identify and assess cyber risks associated with each supplier and digital service provider, implement appropriate security controls based on those assessments, and continuously monitor supplier risks. This effectively forces organizations to have a vendor security assessment program.

Additionally, NIS2 emphasizes supplier accountability, it expects organizations to flow down cybersecurity requirements to suppliers via contracts, set clear security expectations, and conduct regular audits of suppliers. In fact, Recital 85 of NIS2 suggests that major suppliers could be held jointly responsible if their negligence leads to incidents. This is a significant development, the era of finger pointing is ending, and both customers and suppliers may share liability for security lapses. NIS2 also requires coordination with suppliers during incident response, meaning you must have communication channels ready for when an incident involves a third party.

In the financial sector, the EU’s Digital Operational Resilience Act (DORA), effective January 2025, similarly requires banks and financial entities to manage ICT third party risk. DORA obliges firms to inventory their critical ICT providers, assess risks of outsourcing, and ensure contractual provisions for security and incident reporting. It also gives regulators power to oversee critical tech providers (e.g., cloud providers serving banks might be designated and directly supervised). The UK and other jurisdictions are considering similar rules, where cloud and technology providers for banks could be subject to regulation due to systemic risk concerns.

Software and hardware supply chain rules: Another dimension is product security: laws like the EU Cyber Resilience Act (CRA) (adopted in 2024, with enforcement by 2027) will require that manufacturers of digital products (software, IoT devices, etc.) build in cybersecurity and provide vulnerability disclosure mechanisms. While CRA’s full effect is a couple years out, its presence influences compliance strategy now, especially for any company that sells tech in Europe.

It basically says insecure products are noncompliant products. In the U.S., new IoT cybersecurity labeling (“Cyber Trust Mark”) is being launched so consumers can tell which devices meet certain security criteria. Governments have also banned or restricted certain high risk vendors from supply chains for security reasons (for example, bans on Chinese telecom equipment like Huawei in critical networks). Compliance now entails ensuring none of your suppliers are on prohibited lists and that you aren’t using components with known security issues.

Third-party risk management (TPRM) best practices are turning mandatory. Many organizations have implemented TPRM programs involving questionnaires, audits, and contract standards. Now these are being cemented into compliance obligations. As noted, NIS2 and others expect to see security requirements in contracts, meaning procurement and legal teams must include clauses for things like data handling, incident notice (e.g. requiring a vendor to notify you within X hours if they have a breach), the right to audit, and possibly minimum security certifications (like ISO 27001 or SOC 2).

Government and industry standards increasingly call for continuous monitoring of vendor security, not just an annual check-the-box. Given that only 26% of organizations integrate vendor incident response presently, this is a growth area. Automated tools that scan suppliers’ external cyber posture (using ratings services, etc.) are being adopted to meet the continuous monitoring expectation.

Implications for compliance programs: Companies should enhance their third-party risk assessments before regulators force the issue. This means cataloging all critical vendors and partners, classifying them by risk tier (e.g., who has access to sensitive data or systems), and performing due diligence on each. Due diligence can range from sending a detailed security questionnaire, to reviewing their audit reports, to onsite assessments for the most critical ones.

Many firms are now requiring vendors to have security certifications or assessments, e.g., a cloud provider with SOC 2 Type II report or an ISO 27001 certification to provide assurance. It’s also prudent to monitor news and threat intel for breaches at your suppliers, since sometimes you learn of an issue from media before the vendor notifies you.

Another key step is updating contracts, ensure new and renewal contracts include cybersecurity clauses. For instance, a clause that the vendor maintains a minimum security program, complies with relevant standards/laws, notifies of incidents within e.g., 48 hours, cooperates in investigations, and perhaps provides indemnity for security incidents. These contractual measures not only move you toward compliance (and align with laws like NIS2) but also protect you if something goes wrong.

Identity and Access Management and Zero Trust Mandates

Identity and access management (IAM) as a compliance cornerstone. Many cybersecurity frameworks and regulations now prioritize IAM controls like never before. The reasoning is simple: most breaches involve compromised credentials or abuse of excessive access. In 2025, virtually every major cyber regulation or standard includes requirements for strong authentication and access governance.

For example, the EU NIS2 Directive explicitly mandates the use of multifactor authentication (MFA) “where appropriate” as a baseline control for affected entities. It also calls for strict access controls and periodic review of accounts.

Likewise, proposed HIPAA Security Rule changes in the U.S. health sector will make MFA mandatory for any access to patient data systems, and require formal identity proofing and authorization procedures for healthcare workforce members.

These moves mirror what has already been best practice: Multifactor authentication is now effectively required in many industries, e.g., the Payment Card Industry (PCI) standards require MFA for administrators and remote access, the NYDFS bank cyber rule requires MFA for any access to sensitive data, and cyber insurers often insist on MFA as a condition of coverage. Regulators have explicitly stated that single-factor (password-only) logins for privileged or sensitive access are no longer acceptable.

Specific mandates for IAM controls

A clear trend is turning what used to be recommendations into requirements:

- Multifactor Authentication (MFA): As noted, MFA is required or strongly implied by many regulations now. For instance, NIS2’s 10th baseline measure specifically lists using multifactor or continuous authentication solutions for access to sensitive systems. The proposed HIPAA update would require MFA for administrators and remote access to health data systems. The U.S. President’s executive order in 2021 required MFA across all federal systems, and many state laws (like recent insurance data security laws based on the NAIC model) require MFA for access to nonpublic information. In practice, regulators expect MFA to be everywhere: one survey found that implementing MFA can prevent 99.9% of account compromise attacks, a statistic often cited by security agencies. So, compliance auditors now frequently ask, “Do you have MFA enabled for all users, especially privileged and remote access?”

- Least privilege and access reviews: Regulations also demand strict access governance. NIS2, for example, requires policies for access controls and that companies maintain an overview of all assets and ensure proper use and handling of sensitive data with role-based controls. Many standards require regular user access reviews, e.g., checking quarterly that ex-employees are removed and current users have appropriate rights. In the financial industry, regulators like FFIEC emphasize role-based access controls and immediate removal of access when no longer needed. We also see requirements for privileged access management (PAM), ensuring that powerful accounts are closely managed (unique credentials, MFA, and monitoring of admin sessions).

- Network segmentation and device trust: Zero Trust is not just about user identity but also device and network. Compliance is reflecting this by asking for segmentation of networks to limit lateral movement. For instance, the updated HIPAA proposal explicitly calls for network segmentation and periodic network testing as requirements, effectively urging healthcare entities to implement Zero Trust style network controls (splitting clinical devices, corporate IT, etc., and controlling communications between them). Other critical infrastructure guidelines, such as U.S. electric power NERC CIP standards, require segmentation between control systems and business networks. Additionally, ensuring only trusted devices connect (through device certificates or verification) is becoming part of the compliance checklist in highersecurity environments.

- Government and industry frameworks pushing Zero Trust: Even if not codified in law, many organizations are pursuing Zero Trust under the influence of government frameworks. For example, CISA’s Zero Trust Maturity Model provides a roadmap that some sectors are adopting as substandard. The U.S. Department of Defense released a Zero Trust Strategy in 2022, aiming for its networks to meet advanced Zero Trust capabilities by 2027, this trickles down to defense contractors who will need to align with those practices. Industry groups like the Cloud Security Alliance have Zero Trust guidelines that can shape compliance audits for cloud services. The general expectation emerging: “default deny”, assume every access request could be malicious until proven otherwise.

- Identity governance and compliance: Regulators also care about how organizations manage identities over their lifecycle. For example, ensuring onboarding and offboarding processes are in place (so accounts are created with correct roles and promptly deactivated upon employee exit) is often audited. Some privacy regulations intersect with IAM too, e.g., GDPR’s data minimization and security principles mean users should only access data they need, and logs/monitoring of access might be needed to prove compliance. Identity is at the center of both security and compliance; it’s no surprise that 80% of data breaches involve compromised or stolen credentials, so addressing identity issues mitigates compliance risk across the board.

Impacts and actions

For compliance officers and CISOs, aligning with these IAM and Zero Trust mandates means:

- Implement MFA whereever feasible: This is step one and likely the highest ROI security control. If there are legacy systems that can’t support MFA, plan to phase them out or put compensating controls. Document your MFA coverage, because auditors will ask.

- Enforce least privilege rigorously: Implement role based access control (RBAC) where possible and keep a tight process for privilege elevation (and only grant what’s needed, temporarily if possible). Use identity governance tools to automate access reviews and certifications, this both improves security and generates evidence for compliance. Data shows organizations with robust RBAC are 50% less likely to experience a major incident due to misuse of credentials.

- Adopt elements of Zero Trust Architecture: This includes segmenting networks (don’t have flat networks where an intruder can reach everything), verifying device health (through endpoint security enforcement), and deploying continuous monitoring of user behavior (UEBA) to detect if a legitimate account is acting suspiciously. While not all regulations explicitly state, “Zero Trust,” implementing it will inherently satisfy many specific controls that are required.

- User training and culture. People are part of IAM too, training users on good password hygiene, phishing awareness (since MFA isn’t foolproof, e.g., MFA fatigue attacks). Many regulations (like NIS2 and others) require cybersecurity awareness training for staff, and emphasizing identity-related threats (phishing, social engineering) in those trainings helps meet compliance and reduce incidents.

In summary, 2025’s compliance environment essentially demands that organizations prove they know who is accessing what, when, why, and how at all times. This identity-centric approach is the crux of Zero Trust. Forward looking organizations are not treating Zero Trust as just a buzzword, but translating it into concrete policies and controls that auditors can verify from MFA dashboards to access review records and micro segmentation diagrams.

In doing so, they not only comply with current rules but are better prepared for the future, where even more stringent access control requirements are likely to appear (for instance, we might see insurance regulators or others codify Zero Trust explicitly).

Tailored Advisory Services

We assess, strategize & implement encryption strategies and solutions customized to your requirements.

Industry Specific Compliance Challenges

While many compliance trends are broad based, certain challenges are unique to specific industries. In 2025, industries like financial services, healthcare, and critical infrastructure face tailored regulations and threats that require specialized attention. Below, we examine a few industry specific landscapes:

Financial Services

Financial institutions have long been heavily regulated, but now cybersecurity and technology risk are at the forefront of banking compliance. Banks, insurers, and investment firms not only must protect sensitive customer data (to comply with privacy laws and GLBA in the U.S.) but also ensure the resilience of critical financial systems against cyberattacks.

Digital Operational Resilience in the EU: The Digital Operational Resilience Act (DORA), taking full effect in the EU in January 2025, is a game changer for banks and finance companies. DORA requires firms to implement a comprehensive ICT risk management framework, conduct regular stress tests and scenario testing of their cyber resilience, and maintain business continuity plans that account for cyber incidents. It also mandates incident reporting to regulators within tight deadlines and formalizes oversight of third-party tech providers (like cloud services that many banks rely on).

Essentially, DORA bundles many best practices (which banks may have followed under guidance) into law, complete with penalties for non-compliance. A bank in Europe will need to show regulators evidence of things like annual penetration tests, network recovery drills, and governance where the board reviews cyber risks regularly. This raises the bar significantly and is likely a model that could be copied by regulators in other jurisdictions.

Cyber disclosure and governance: Financial firms globally are under pressure to be transparent about cyber risks. In the U.S., beyond the SEC rules for public companies, banking regulators expect notification of major incidents (as noted, within 36 hours). If a bank’s ATM network goes down due to a hack, regulators want to know right away.

The financial sector has also pioneered cyber info sharing (through FSISAC, etc.), and now compliance frameworks encourage participation in such information sharing as part of a strong security posture. Boards of financial institutions are expected to be particularly engaged , the New York Fed has even run workshops on cyber for bank directors.

Antifraud and data security: Financial services face unique threats like account takeovers and payment fraud, so compliance intersects with consumer protection. Regulations like the EU’s PSD2 (Second Payment Services Directive) require Strong Customer Authentication for online payments, which is basically MFA for banking customers.

In the U.S., banks must comply with the FTC Safeguards Rule (recently tightened in 2023), which dictates specific security controls for customer data, including encryption and access controls. There’s also an expectation to monitor transactions for fraud (AML/KYC laws), which now often involves cybersecurity teams because cyber fraud (phishing leading to wire fraud, etc.) is rampant.

Payments and PCI DSS 4.0: Any financial entity (or retailer, but many financial firms handle payments) must comply with the Payment Card Industry Data Security Standard version 4.0 by March 2025. PCI DSS 4.0 introduces new requirements, like more frequent phishing training, stricter MFA, more robust logging access, and an explicit focus on continuous compliance rather than a one-time standard. This is not a law but a contractual/regulatory requirement heavily enforced by payment networks. For banks issuing credit cards or merchants processing them, failure to comply can mean fines or even loss of ability to process card payments.

Financial firms should ensure they meet the highest common denominator of requirements. This often means adopting frameworks like NIST or ISO 27001 companywide, then overlaying specific regs. Strong encryption of financial data, continuous monitoring (many banks have 24/7 SOCs), third-party audits, and board reporting are must-haves. Given the personal liability in some cases (e.g., NIS2 could hold management liable, and in the UK, the senior managers’ regime could arguably be extended to tech risks), senior management involvement is key.

Ultimately, regulators in finance care about the stability of the financial system, a major cyber incident could cause loss of confidence or economic issues, so they are treating it on par with financial solvency. Compliance teams need to treat cyber controls with the same rigor as capital adequacy controls.

Healthcare

Healthcare organizations face the dual challenge of protecting highly sensitive personal health information and ensuring patient safety in an increasingly digital, connected care environment. Compliance requirements in this sector are tightening after years in which healthcare lagged other industries in security maturity.

In the U.S., the Health Insurance Portability and Accountability Act (HIPAA) has long set the baseline for health data privacy and security. However, its Security Rule (dating back to 2005) gave covered entities some flexibility with “addressable” controls. Now regulators are moving to eliminate that flexibility in light of current threats.

As mentioned, HHS proposed in January 2025 a rule to require all previously addressable specs, making encryption, multifactor auth, risk analysis, and incident response explicitly mandatory, among other changes. The proposal also introduces modern requirements: annual technical security assessments, asset inventory maintenance, network mapping, and documented recovery plans.

This is a big change many smaller clinics or business associates who were doing minimal compliance will have to step up significantly (e.g., if a clinic hadn’t encrypted its databases or used MFA for EHR access, that would no longer fly). HHS is also pushing for cybersecurity practices adoption under a 2021 law (HR 7898) that gives breach investigation leniency to entities that follow recognized security practices (like NIST HC Cybersecurity Framework). So effectively, healthcare providers are incentivized to adopt robust frameworks or face harsher penalties after incidents.

Medical device and IoT security: Hospitals are filled with connected devices (imaging machines, IV pumps, etc.). Recognizing the risk, the U.S. FDA now requires cybersecurity disclosures for new medical devices in the approval process (as of 2023 via the PATCH Act). Device makers must provide an SBOM and commit to patches.

For hospital compliance, this means maintaining an inventory of devices and their software, applying patches quickly, and segmenting devices on networks. The Joint Commission (a body that accredits hospitals) also introduced new standards in 2022-2023 around technology risk management, which hospitals must meet to stay accredited. In the EU, the MDR (Medical Device Regulation) includes essential requirements for cybersecurity of devices as well. Healthcare delivery organizations thus need to include device security in their overall compliance.

Privacy and patient rights: Privacy compliance remains huge; GDPR applies to EU patient data, which affects any multinational pharma or research. Interoperability initiatives (like the U.S. Cures Act, which gives patients more access to data via APIs) introduce new surface area for breaches, so compliance now also means vetting those third-party apps.

Healthcare entities often must navigate not just HIPAA but 42 CFR Part 2 (for substance abuse records confidentiality), state laws like California’s Confidentiality of Medical Information Act (CMIA), and now the new state privacy laws which often don’t exempt all health data (if an entity isn’t fully covered by HIPAA, they might have state obligations). Thus, healthcare compliance officers are dealing with a complex matrix of privacy rules.

Ensuring ePHI security in practice: Common compliance gaps in healthcare have been basic unpatched systems, old Windows machines, shared passwords. Regulators are done being lenient. The Office for Civil Rights (OCR) has levied multimillion-dollar fines for breaches and will likely increase enforcement as new rules come in. One trend is the enforcement of risk analysis. OCR often fines entities not because a breach occurred, but because their risk assessment was insufficient or not updated, which is a HIPAA requirement. Going forward, a thorough annual (or continuous) risk assessment is a must do.

Healthcare organizations should update their compliance programs to be much more prescriptive. If the HIPAA rule gets finalized, they’ll need to tick every box (encryption of all PHI at rest and in transit, MFA on all accounts, unique IDs for users, emergency mode ops plans tested annually, etc.). In preparation, many are aligning with NIST’s Health Cybersecurity Framework or adopting HITRUST certification (a common framework in healthcare that combines multiple standards).

Employee training is key since phishing is rampant and healthcare workers are busy and sometimes not cyber conscious, but breaches via insiders or stolen creds are common, so compliance includes robust training regimens (often required by law too).

Overall, healthcare’s main challenge is catching up to other sectors in security maturity under heavy regulatory prodding, all while juggling lifeanddeath service delivery and often tight budgets. The trend is that compliance frameworks in health will increasingly resemble those in finance in strictness, given the criticality of the service.

Critical Infrastructure

Critical infrastructure sectors, such as energy (electricity, oil & gas), water utilities, transportation (airlines, rail, ports), telecommunications, and others, are facing perhaps the most intense regulatory focus in cybersecurity. These are the industries where a cyber incident could not only cause data loss but potentially large-scale physical or economic harm. Governments in 2025 are aggressively moving to fortify critical infrastructure through compliance mandates.

Broader coverage under NIS2 (EU): The EU’s NIS2 directive expands the scope of regulated sectors compared to its predecessor. It now encompasses a wide range of sectors, including energy, transport, banking, healthcare, public infrastructure, digital infrastructure (such as DNS and data centers), space, and more. It also adds to the manufacturing of critical products. Essentially, many organizations that never had to report security status to a regulator before will now fall under NIS2 if they meet size criteria in these sectors.

Compliance with NIS2 means implementing all the baseline security measures (risk assessments, incident response plan, supply chain security, crypto, access control, etc.), and instituting management accountability and governance oversight of cyber risks. If a power grid operator in the EU fails to patch known vulnerabilities, for instance, they could face significant fines under NIS2 just as they would if they violated safety rules. Moreover, the directive’s personal liability clause for managers is a big stick to ensure cyber is taken seriously at the top.

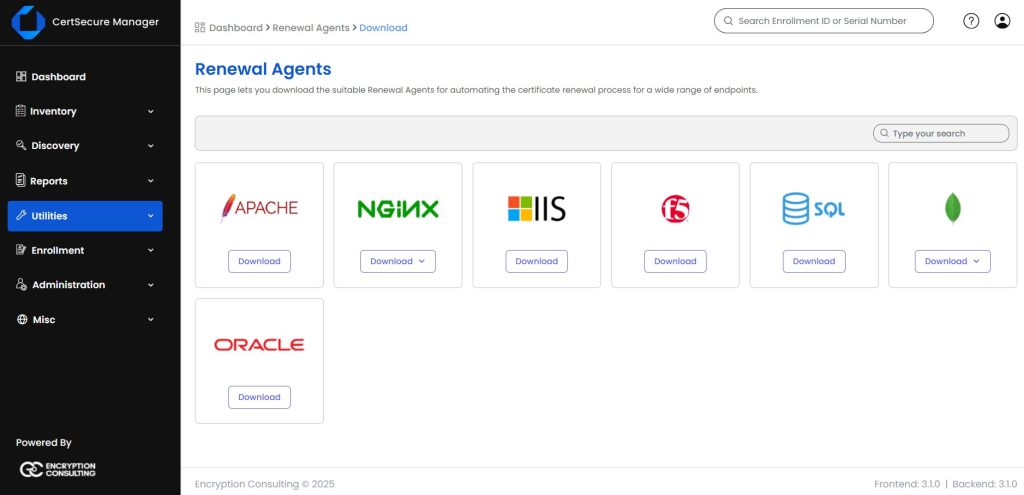

How can Encryption Consulting help?

Encryption Consulting offers a structured Compliance Advisory Service designed to align your organization with global regulatory frameworks such as GDPR, PCI DSS, HIPAA, NIS2, and DORA. As part of our service, we provide:

- Current State Assessment: We review your existing encryption, key management, and security policies, assess technical environments, and gather compliance documentation to establish a clear baseline.

- Gap Analysis : We evaluate policies and controls against industry standards, identify misalignments, and conduct workshops and questionnaires to uncover weaknesses in encryption and compliance practices.

- Findings and Recommendations: We deliver a detailed report with actionable recommendations, prioritized by risk, compliance impact, and business needs, to strengthen your overall security environment.

- Roadmap Development: We create a step-by-step strategy mapped to compliance goals, industry standards, and milestones, ensuring sustainable compliance and efficient remediation.

- Ongoing Advisory: We provide continuous support through periodic reassessments, regulatory updates, team training, and strategic guidance during audits and incident responses.

With this end-to-end approach, we help organizations not only meet compliance requirements but also build resilience, reduce risk, and stay prepared for future regulatory demands.

Tailored Advisory Services

We assess, strategize & implement encryption strategies and solutions customized to your requirements.

Conclusion

The year 2025 marks an inflection point in compliance, where cybersecurity, privacy, and governance obligations reach new heights of rigor and breadth. The trends we’ve explored, from global data privacy expansion and encryption mandates to AI governance, disclosure requirements, ESG integration, supply chain security, zero trust, automation, and industry-specific rules, together paint a picture of a future where organizations must be proactive, transparent, and resilient. Compliance is no longer a static checklist but a living, strategic function that must adapt continuously to emerging risks and rules.

So, how can organizations prepare for the compliance landscape of the next few years?

- Build a holistic compliance strategy: Silos between privacy compliance, cybersecurity, and corporate governance need to be broken down. An integrated approach (perhaps using a common controls framework and unified GRC platform) will ensure nothing falls through the cracks and reduce redundant efforts. For example, a unified compliance committee or working group can oversee data protection, cyber risk, and ESG disclosures collectively, recognizing their inter dependencies.

- Stay ahead of regulations: Given the accelerating pace of regulatory change, organizations should invest in horizon scanning capabilities. This could mean dedicating personnel or using regulatory watch services (and AI tools) to monitor proposed laws and emerging standards in all regions you operate. Being involved in industry associations or public comment periods can provide valuable insights and influence. The goal is to avoid surprise if you know, for instance, that an AI Act or a new state law is coming in 18 months, you can start aligning policies now rather than scrambling later.

- Embrace technology and automation: The complexity of compliance in 2025 and beyond simply cannot be managed manually. Organizations should leverage compliance automation to manage control monitoring, evidence collection, and reporting. Not only does this make compliance more efficient, it also often improves security posture by providing real-time feedback. Additionally, consider how emerging tech like AI can assist, perhaps in automating code reviews for security (helping with software supply chain compliance) or analyzing user behavior for insider threat (tying into zero trust). An important note: technology should augment a skilled compliance team, not replace it. Talented compliance professionals who understand the business and technology will remain indispensable.

- Cultivate a compliance culture: Regulations can impose requirements, but it’s ultimately people who implement them. Leading organizations foster a culture where employees at all levels understand the importance of compliance and feel personally invested in it. This involves regular training (with engaging content, not just dull checkboxes), leadership messaging about integrity and security, and integrating compliance objectives into performance goals. For instance, making security and compliance a part of everyone’s job description, developers writing code with security in mind, salespeople being careful with customer data, etc., creates an environment where complying is the norm, not an afterthought.

- Enhance incident readiness and transparency: With mandatory disclosures and fast reporting timelines, companies must be ready to respond to incidents before they occur. This includes having detailed incident response playbooks, communication plans (including draft templates for regulators, customers, and investors notifications), and even media handling strategies. Conduct regular breach simulations that involve not just IT but also legal, PR, and executive leadership so that if the worst happens, the organization can respond in a coordinated, compliant manner. Moreover, given the focus on transparency, it’s wise to assume that significant incidents will become public, so acting with honesty and responsibility during incidents is part of maintaining trust (and regulators do consider cooperation and candor when determining penalties).

- Measure and report on compliance internally: Boards and executives should receive meaningful metrics about the company’s compliance posture. This might include KPIs such as percentage of staff who completed security training, number of high-risk findings from audits that were remediated, time to patch critical vulnerabilities, or third-party risk ratings. By quantifying and tracking these, leadership can better oversee compliance (which ties to ESG expectations too) and allocate resources where needed. In many industries, regulators now expect board minutes to reflect discussions of cybersecurity and compliance, having regular reports helps fulfill that duty and can be shown as evidence that management is engaged.

- Plan for future trends: Looking ahead, we can foresee some areas that may become tomorrow’s compliance challenges. For example, quantum computing was discussed organizations that might develop a roadmap for crypto agility now, so they aren’t caught off guard in the 2030s. In essence, organizations should strive to transform compliance from a burdensome cost center into a business enabler and trust builder. Those that manage compliance well gain credibility with customers (who know their data is protected), with partners (who see them as secure links in the chain), and with regulators (who may then grant certifications or faster approvals). For example, demonstrating robust compliance with security standards can open doors to working in sensitive sectors or handling government data, which can be a market differentiator.

Compliance in 2025 and beyond is undoubtedly challenging, the bar is higher than ever. But by taking a strategic, proactive approach and leveraging the trends outlined above, organizations can not only avoid penalties and incidents but truly turn strong compliance into a competitive advantage.

Those who prepare today for the regulations and risks of tomorrow will be the ones best positioned to thrive in an environment where trust and accountability are paramount. As the saying goes, “Compliance is a journey, not a destination”, and that journey is accelerating. Now is the time to tighten your seatbelts, map your route, and drive your compliance program forward confidently into the future.