The use of network connected Internet of Things (IoT) devices is growing rapidly, introducing various cybersecurity and supply chain attacks targeting these endpoints. Real-world attacks have shown how firmware can be exploited to create botnets, steal data, or even take control of critical infrastructure. The infamous Mirai botnet, for example, leveraged insecure IoT devices to launch massive DDoS attacks. Many of those devices had hardcoded credentials and no way to update their firmware.

Due to this, keeping the firmware of your IoT devices up to date becomes a crucial aspect of your cybersecurity posture. Firmware updates are crucial to fix software bugs, patch vulnerabilities, or add new security features. Equally important is ensuring these updates are secure and trusted.

Key challenges with IoT firmware Security

Securing firmware is not as straightforward as securing a web application. IoT devices are often resource-constrained, meaning they don’t have the processing power or memory to support traditional security protocols. They’re also incredibly diverse—different chipsets, different operating systems, different update mechanisms. This lack of standardization makes it hard to apply a one-size-fits-all solution.

Supply chains vulnerability is a key challenge for firmware security as firmware is often developed by third-party vendors or assembled from open-source components. This opens the door to tampering long before the device reaches the end user. And once it’s out in the field, updating that firmware becomes a logistical nightmare, unless you’ve planned for it from the start.

Additionally, unauthorized access to code-signing keys or firmware signing mechanisms allows attackers to impersonate trust and deliver malicious updates to devices that appear trusted.

Finally, insecure coding practices could allow attackers to exploit buffer overflows to gain remote access to devices and create DDoS or malware-injection attacks.

Consequences of insecure IoT firmware

Insecure IoT firmware could lead to various attacks that could be employed remotely by an attacker without warning. Let’s look at some of the examples below:

- In September 2016, the creators of Mirai launched a DDoS attack on the website of a well-known security expert. Shortly after, they released the source code, which was quickly adopted by other cybercriminals. This led to a massive attack on the domain registration services provider, Dyn, in October 2016, causing widespread disruption.

The RIFT botnet emerged in December 2018 using a variety of exploits to infect IoT devices. According to online sources, the botnet used 17 exploits.

On December 11, 2018, a remote code execution vulnerability in the ThinkPHP framework was reported wherein the OS commands were injected through the query parameter “vars”. This follows a typical exploitation sequence observed in RIFT attacks.

- In another incident, D-Link accidentally leaked private code-signing keys used to sign software. While it’s not known whether the keys were used by malicious third parties, the possibility exists that they could have been used by a hacker to sign malware, making it much easier to execute attacks.

- In one of the attacks on connected cars, researchers at the Chinese firm Tencent revealed they could burrow through the Wi-Fi connection of a Tesla S all the way to its driving systems and remotely activate the moving vehicle’s brakes.

Securing IoT firmware updates – Vendors’ perspective

Over-the-air (OTA) updates allow you to push firmware patches, security fixes, and even new features to devices remotely without the need for any physical access. OTA updates are usually delivered through cellular data (4G or 5G) or through internet connections.

OTA not only brings convenience to your firmware update process but also adds resiliency providing your organization with crypto agility in dealing with any kind of cyberattack. Without OTA, you’re stuck with whatever firmware was on the device at launch. And if that firmware has a flaw, you’re in trouble.

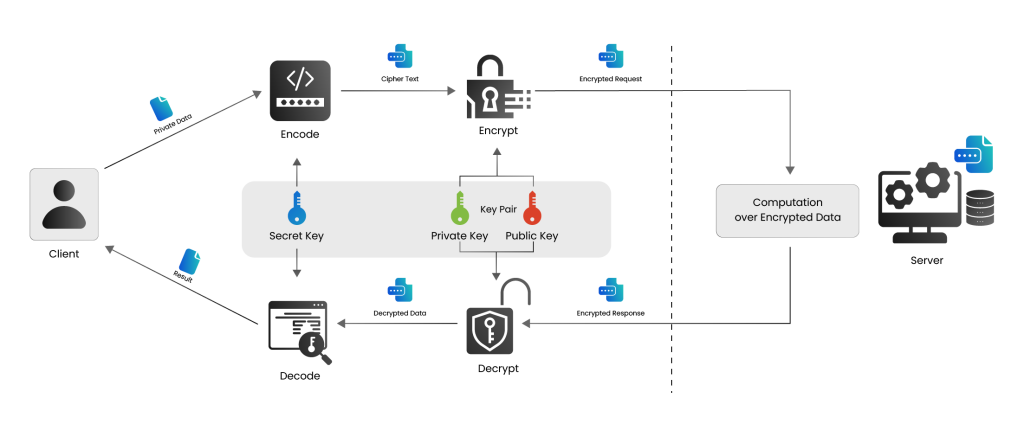

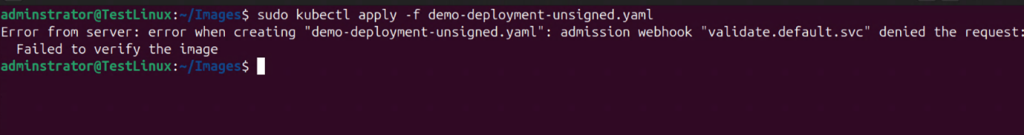

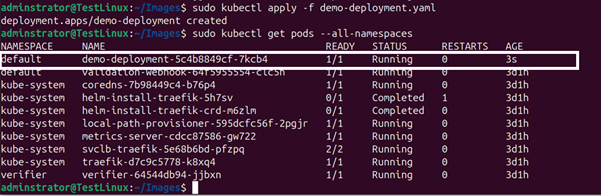

Designing a secure OTA system is crucial to ensure firmware security. Every update should be signed with a private key and verified on the device using a corresponding public key. This ensures that only trusted updates are installed. Devices should reject any update that doesn’t meet these criteria. The update process should also include integrity checks to prevent tampering during transmission. Also, a rollback protection is crucial to ensure that the attackers shouldn’t be able to downgrade firmware to a vulnerable version.

Equally important is the infrastructure behind the updates. You need a secure server environment to host the firmware, a reliable delivery mechanism, and a monitoring system to track update success rates and failures. User transparency is important as well for keeping your users informed when updates are happening and why, building trust and reducing resistance.

Securing IoT firmware updates – End User’s perspective

Insecure IoT firmware updates could cause serious consequences for the users of these devices especially because any kind of malicious attacks can be done remotely without requiring any physical access to the device.

To safeguard against insecure firmware updates, avoid automatic updates specially on untrusted networks and only get the updates from the vendor’s secure website using HTTPS. Also, always consider prioritizing firmware support in your purchase decisions.

Real-World Applications, Standards and Innovations in firmware security

Industries that rely heavily on IoT, like automotive, healthcare, and manufacturing sectors, are already seeing the benefits of secure OTA updates. In the automotive sector, for example, OTA updates are used not just for infotainment systems but for critical safety features. Tesla famously uses OTA to roll out everything from performance improvements to bug fixes, often overnight.

In healthcare, where devices like insulin pumps and heart monitors are increasingly connected, OTA updates can be a matter of life and death. A vulnerability in a medical device isn’t just a security issue, it’s a patient safety issue. Secure OTA mechanisms ensure that these devices can be updated quickly and safely, without requiring a hospital visit.

Standards like NIST’s IoT cybersecurity framework and ETSI EN 303 645, are pushing IoT manufacturers towards adopting secure development practices, rigorous testing, and a robust OTA infrastructure.

Recent research has proposed advanced cryptographic techniques to secure firmware updates against supply chain attacks. For example, post-quantum cryptography (PQC) methods like Dilithium and SPHINCS+ are being explored to ensure firmware authenticity and integrity in a quantum-resilient manner.

How could Encryption Consulting help?

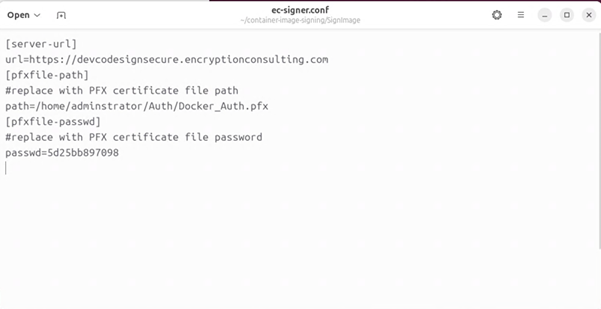

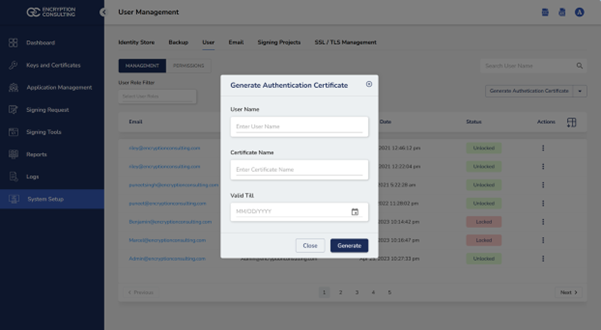

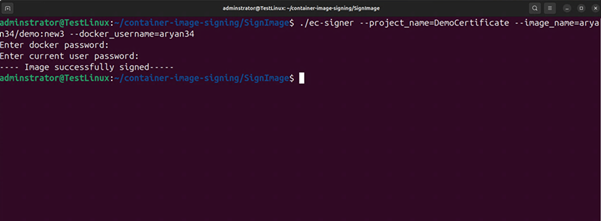

Encryption Consulting’s CodeSign secure protects your software with a powerful, policy-driven code-signing solution to streamline security effortlessly.

Encryption Consulting’s advisory services could help your organization discover enterprise-grade data protection with end-to-end encryption strategies that enhance compliance, eliminate risk blind spots, and align security with your business objectives across cloud, on-prem, and hybrid environments.

Additionally, Encryption Consulting’s PQC assessment service could help your organization by conducting a detailed assessment of your on-premises, cloud, and SaaS environments, identifying vulnerabilities and recommending the best strategies to mitigate the quantum risks.

For more information related to our products and services please visit:

Code Signing Solution | Get Fast, Easy & Secure Code Signing with CodeSign Secure

Post Quantum Cryptographic Services | Encryption Consulting

Conclusion

Firmware may be invisible to most users, but it’s the backbone of every IoT device. Investing in secure firmware practices and robust OTA update systems is the key to safeguarding and mitigating the risks associated with firmware vulnerabilities and cryptographic attacks.